At the end of The Social Network, there’s a scene where Mark Zuckerberg, sitting alone in a boardroom, sends a friend request to a girl on Facebook from his laptop. Then he sits back in his chair and keeps refreshing the page again and again, waiting for his request to be accepted.

Let’s admit it, we’ve all been in a situation like this before. It could be for a multitude of things, like checking if a gaming console is back in stock or if the price of a flight ticket has been lowered. The website is open in front of us, and we keep staring at it, our eyes peeled for a change.

Of course, you probably know that this is the least productive way you can spend your time. Fortunately, there is a solution to this - having your computer do this automatically. 🤖️

In this article, you will learn how to monitor website changes.

If you want to get started quickly, Bardeen lets you build workflows to monitor any webpage - no code required. Learn more.

We are going to use no-code website scraper tools to track a specific field on a website and will notify you when a change is made.

Excited? Let’s dive in!

Before we get to setting up an automation for monitoring website changes, let’s talk about the magic that happens behind the scenes.

If you want to monitor a website, there are two ways to go about it: APIs and data scrapers. Let’s talk about both options briefly.

API stands for “Application Programming Interface,” and it acts as a middleman between two apps that want to communicate with each other and exchange data.

Let's use an example to visualize this. Say you want to book a flight. You may go to a site like Google Flights. After entering search criteria like the destination and date, you press Enter, and voilà, you have a list of flights right in front of you.

In the background, Google searched its database and returned the results. What if you could ask Google to tell you when these results changed automatically?

In theory, you can set up a workflow where the APIs of a website that you want to monitor ping your server and get nicely formatted data sent to you instantly.

But, the problem is two-fold.

First, not all websites have a public API that you can use.

And second, monitoring websites using APIs is time-consuming to set up, and you need to know how to code. So, let’s look at the next option.

If APIs seem way out of your ballpark, web scrapers are your best option.

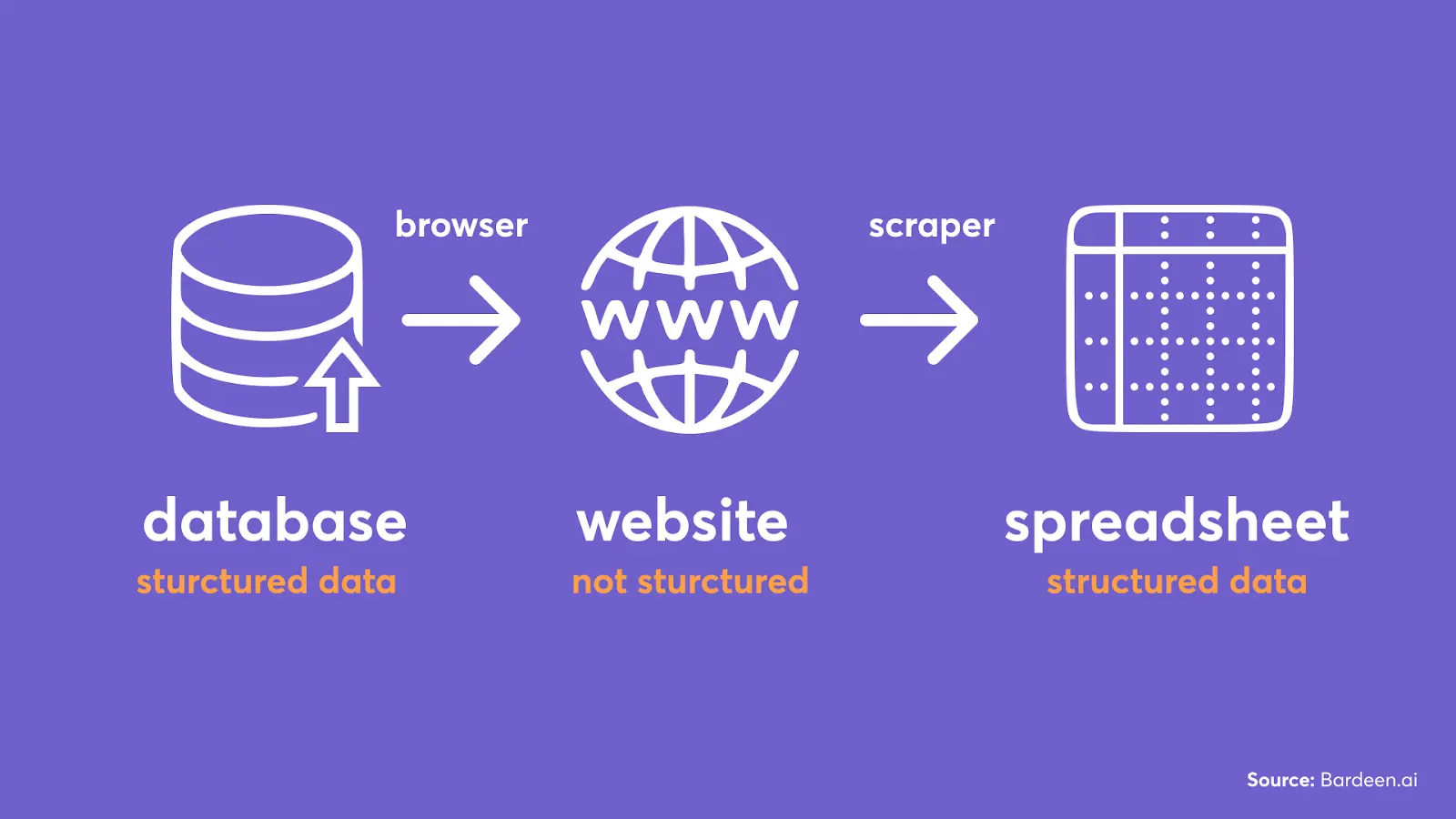

Scrapers are the software tools that extract data from almost any website in a structured format (like a table).

Now, when you get data from a particular place on a website, you can monitor when it changes.

The website monitoring tools that use web scrapers will visit the website frequently and extract the data you are monitoring. Then, they will compare this data with the original. Once data differs from the original, you will get a notification. Pretty simple, right?

But what if your data is not publicly accessible where you need to be logged in to view a website?

In this case, traditional cloud-based tools can't access the information because websites are password-protected. Fortunately, there are browser-based tools (like Bardeen) that can circumvent this limitation by monitoring websites in the background right in your browser!

Overall, web scrapers are much more user-friendly than the APIs approach. And you can set them up in minutes.

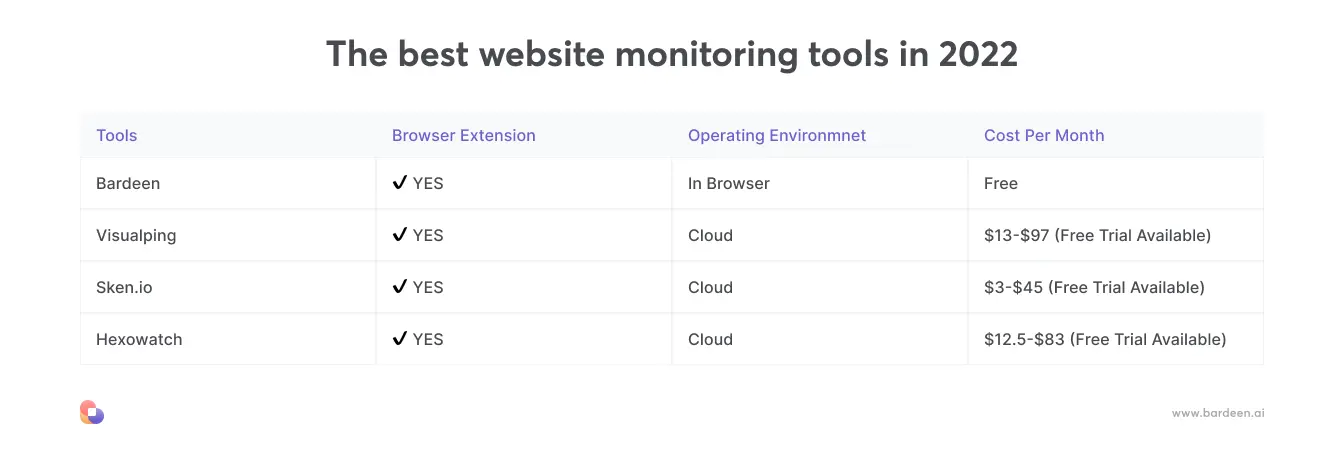

Now, let’s explore the three best website monitoring tools to get you set up.

Bardeen is a no-code workflow automation tool that integrates with many of your favorite web apps like Gmail, and others. It also has a very powerful scraper feature.

You can create automations using its web scraper and then pick a follow-up action that will run when something on the website changes. You can get an SMS or Slack message, an email, or even a tweet to be sent automatically.

Bardeen works from the browser; it is great for websites that require you to be logged in to view information that you want to track. It’s also more secure than many other tools since you don't need to share your password or cookies.

Finally, the workflow automation engine unlocks very complex workflows like adding scraped information to Google Sheets automatically when a website changes.

Here is how to start monitoring websites with Bardeen.

In this example, we will monitor a company variable on LinkedIn.

To create an automation, click on “new playbook” and add the following action:

When website data changes.

A scraper template points at the data fields you’d like to track.

The trigger from the previous step “when website changes” requires you to provide a scraper template.

Click on “create new scraper template” and point at the data that you want to monitor. In our case, that'll be the company name element.

Save the scraper template.

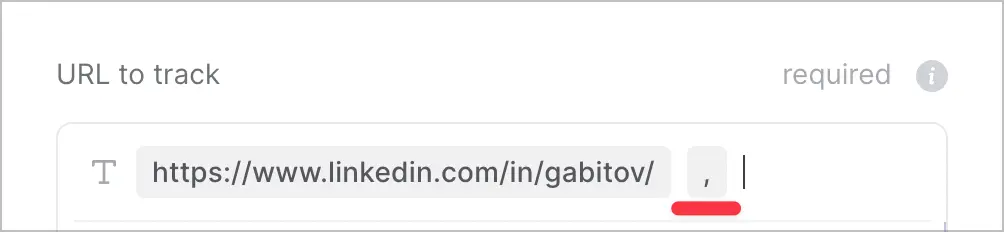

You will then be asked for a URL of the web page that you want to monitor. You can monitor multiple websites with your scraper template.

For that, use a comma and paste another URL.

Now that you’ve successfully set up a scraper for monitoring, Bardeen will remember the initial data for all the variables you are monitoring. It will check the website in the background every ten minutes and rescrape the data. When something changes, the automation will be triggered.

Click on the + icon in the builder to add the follow-up action. You can send an email, take a website screenshot, shoot a new message in Slack, and even add new data to a Google Sheet!

In the video above, we monitored a company variable on a LinkedIn profile page. If you’re looking for your next team member, you can set up an automation and get notified when a killer candidate changes a job or updates a profile description.

You can use the same logic for many other use-cases.

Scroll down to see website monitoring automations with pre-built scraper templates that you can use out of the box.

Visualping is a cloud-based website monitoring tool that will send you an email or a Slack message when a website changes. If you’re looking to track public websites, Visualping is right up your alley.

Just like Bardeen, it is simple to set up and is customizable. You can also specify the scraping frequency.

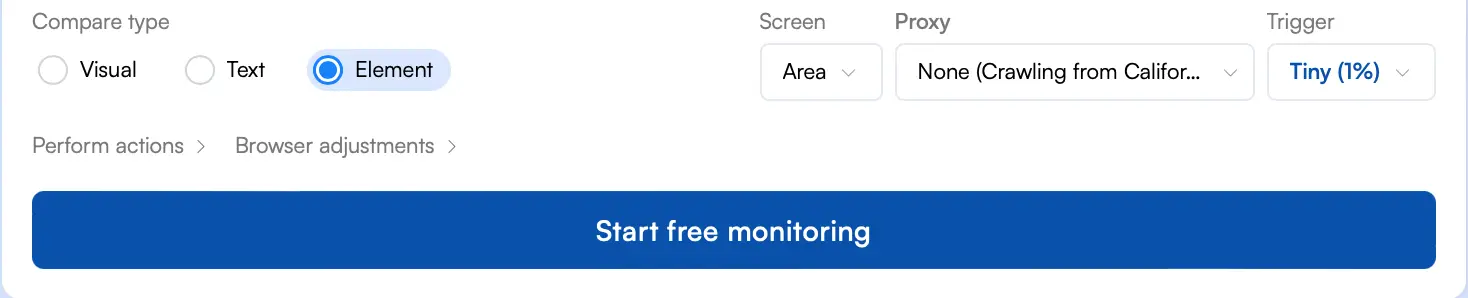

Enter the website link that you want to track, and select an element you want to track.

If Visualping detects a change, it’ll email you. A live demo is available on their homepage!

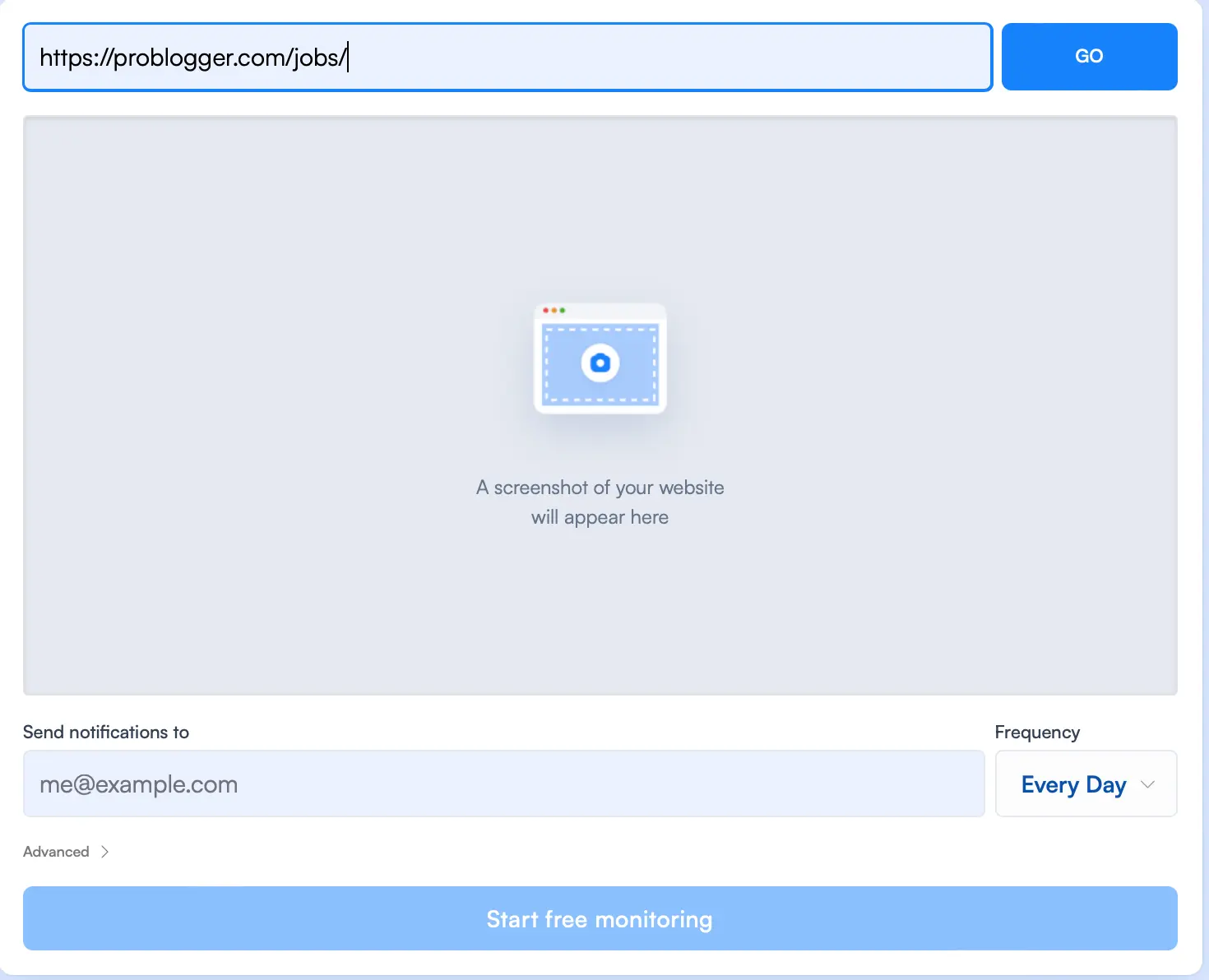

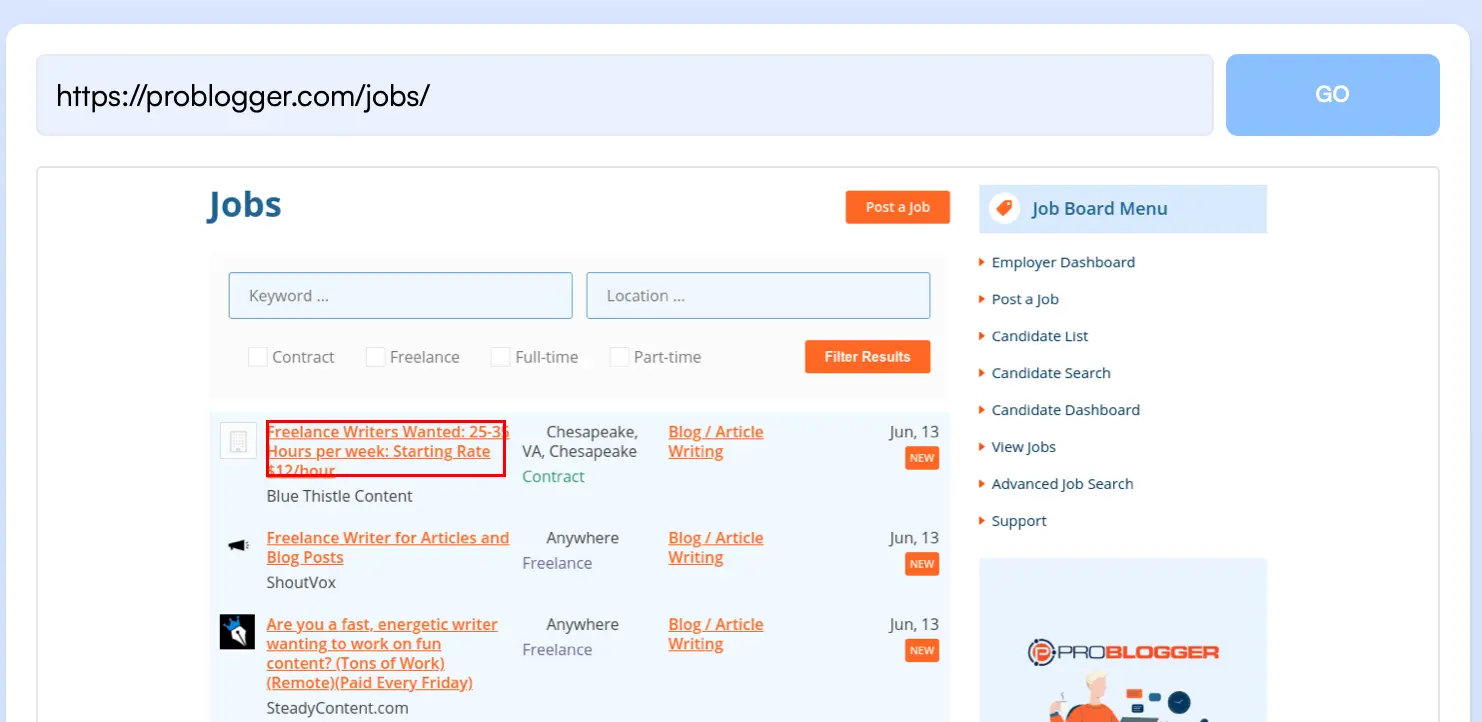

For this example, I’m going to track when a new job is posted on a ProBlogger. After you’ve added the URL, click “Go” and Visualping will retrieve the page.

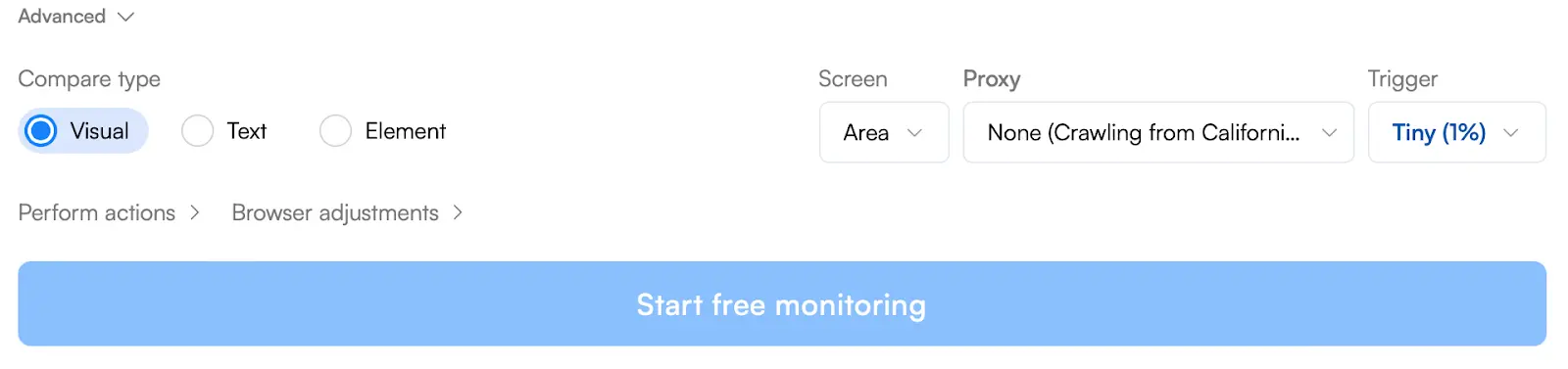

Now, you have to choose which type of monitoring you want: visual, text, or element. You can also add advanced options like selecting the web crawler location and adding a scroll action, wait, type, cookie, and more.

In this example, I chose the “Element” type. A red outline will highlight the selected elements.

For visual tracking, you can choose a specific part of the website you'd like to screenshot. For text, you can monitor the entire website.

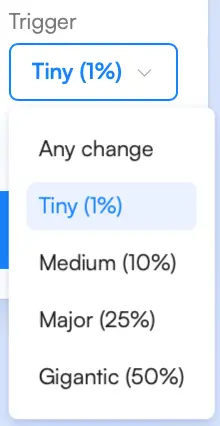

After you’ve chosen what you want to monitor and customized the scraper; there is one last thing - how big of a change should warrant a notification.

Based on your use case, you may want to be notified about every little change or a large change.

Pretty neat, right?

After that, click on the “Start free monitoring” button. If you haven’t created an account yet, you’ll be asked to create one.

Once done, the monitoring will begin.

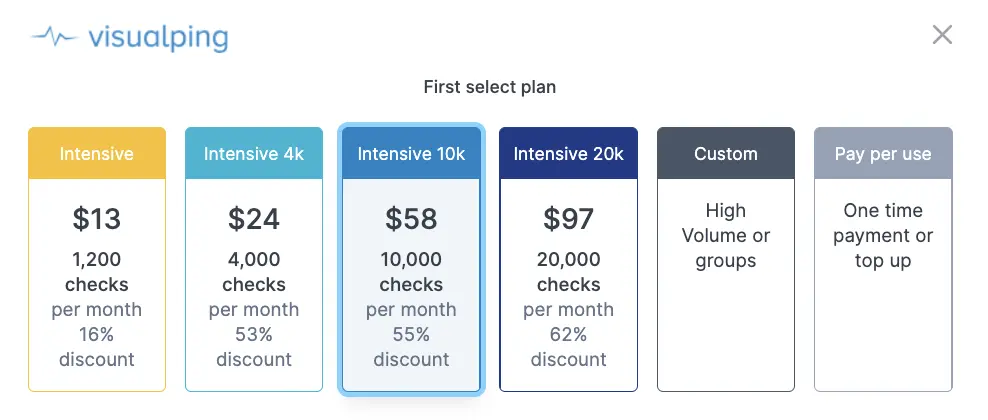

The main downside of Visualping is the price. Here are the pricing options:

Signing up for the free trial will give you 65 credits. So monitoring a website every 10 minutes for a month will use up 4,320 checks, which adds up to quite a lot of money. If you want a free alternative, you can go for the Chrome extension instead.

It's free forever, and optimal for high-frequency checks on websites that require logins. However, there are a few downsides still.

First, since it operates in the browser, your computer needs to be switched on all the time. Plus, since it operates locally, Visualizing doesn't alert you using email or any other medium. You only get a notification in the browser, which is easy to miss.

If you want to be alerted by email and not keep your computer on all day, you can opt for server checks instead, but there are only two checks per day that are free. Beyond that, you need to look into paid plans.

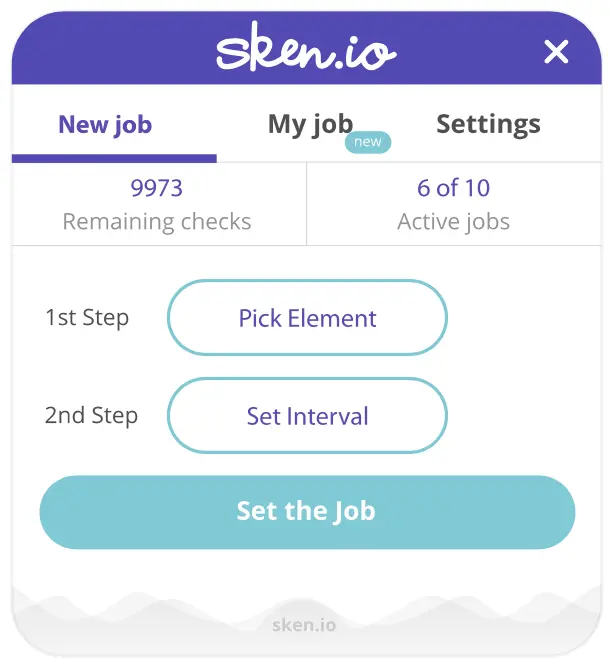

This is another great option for website monitoring, and it also has an Android app and Chrome extension available.

The process isn't much different from Bardeen or Visualping. Just enter the URL of the webpage you want to monitor, select the element you want the tool to latch onto, and then get a notification via your email or on phone when any change occurs.

Similar to Visualping, you can configure the checking frequency and make it however long or short you want, depending on what you are tracking.

Sken.io also comes packed with some smart features like CSS change detection and ignoring pop-up windows. This could be useful if, after setting up the automation, the design of the site changes and the element you chose previously isn’t there anymore.

This could save you the headache of going back and editing the template again.

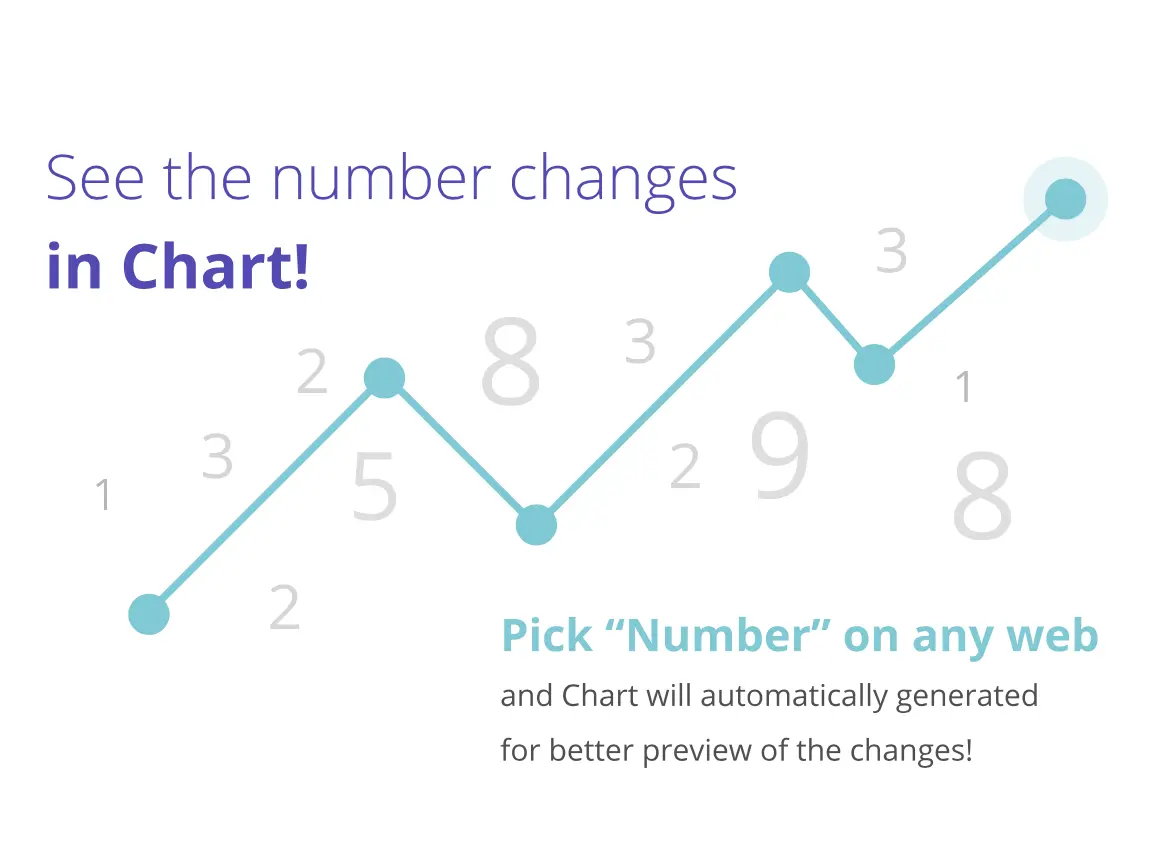

Plus, if you're tracking numerical values, like price changes and the number of sales or downloads, Sken.io also has a chart preview feature which breaks down your data in an easy-to-understand format.

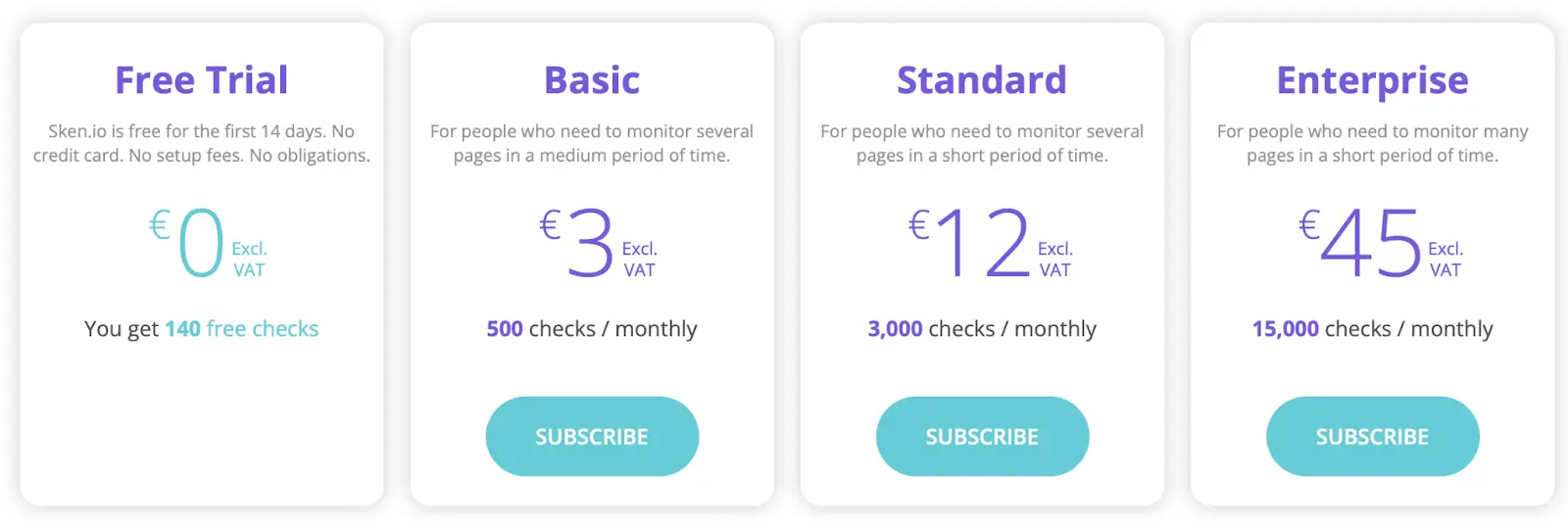

You get a 14-day free trial which gives you 140 free checks, but after that you can choose between plans ranging from €3 to €45 per month.

Overall, this is a great option for monitoring website changes.

If you need a more heavyweight option for website monitoring that goes below the surface, Hexowatch might be your pick.

Hexowatch is the most in-depth and sophisticated tool we’re going to review. It allows you to track websites in multiple locations, on different types of devices, and even leverage APIs and AI.

Here is how you step it up.

First, login, and go to your main dashboard. Then click on “Add URL.”

You’ll be shown a variety of monitoring types to choose from: visual, HTML element, HTML page, keyword, technology, content, availability, Domain WHOIS, sitemap, API, backlink, RSS feed, and even AI monitoring.

Choose the one that suits your use case the best.

Now, you can set up the automation.

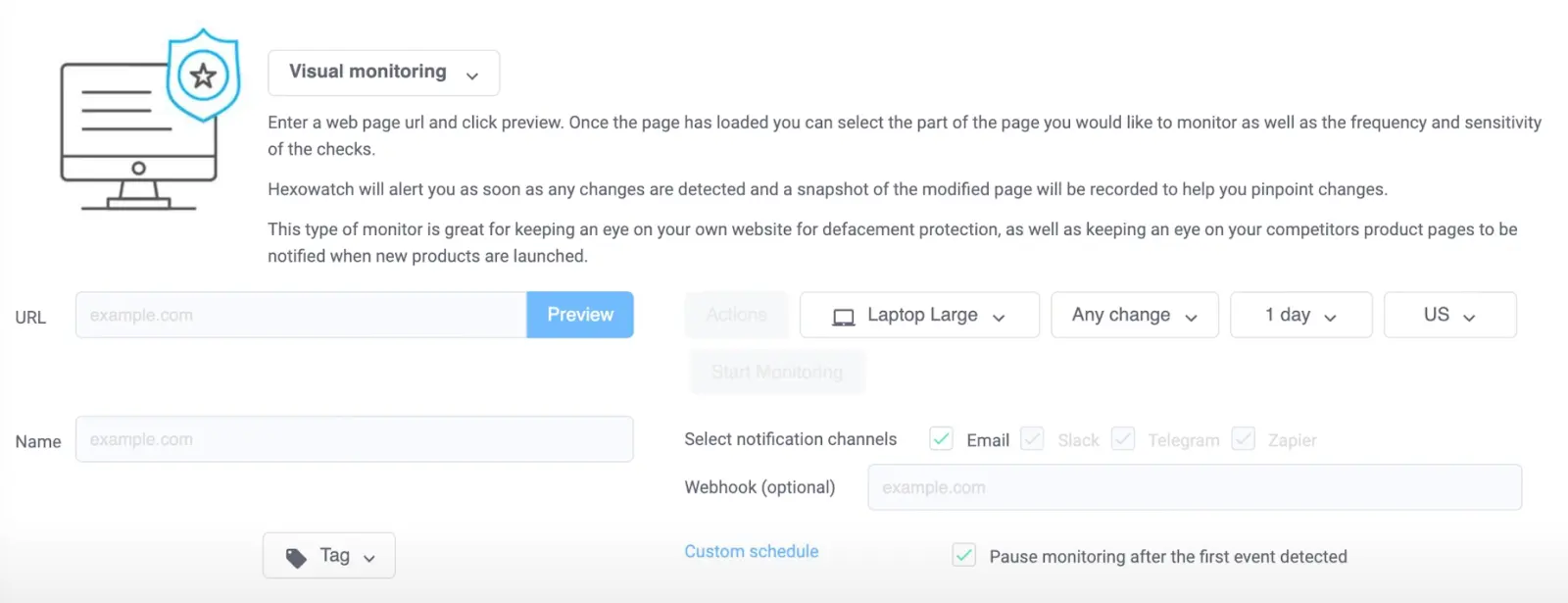

In this example, I’ll use the most common type - visual monitoring.

As you can see, there are many customization options.

You preview the website on different devices (including mobile, tablet, and laptop), choose sensitivity, configure checking interval and define the location.

Finally, you will need to specify how you want to be alerted: email, Slack, or Telegram.

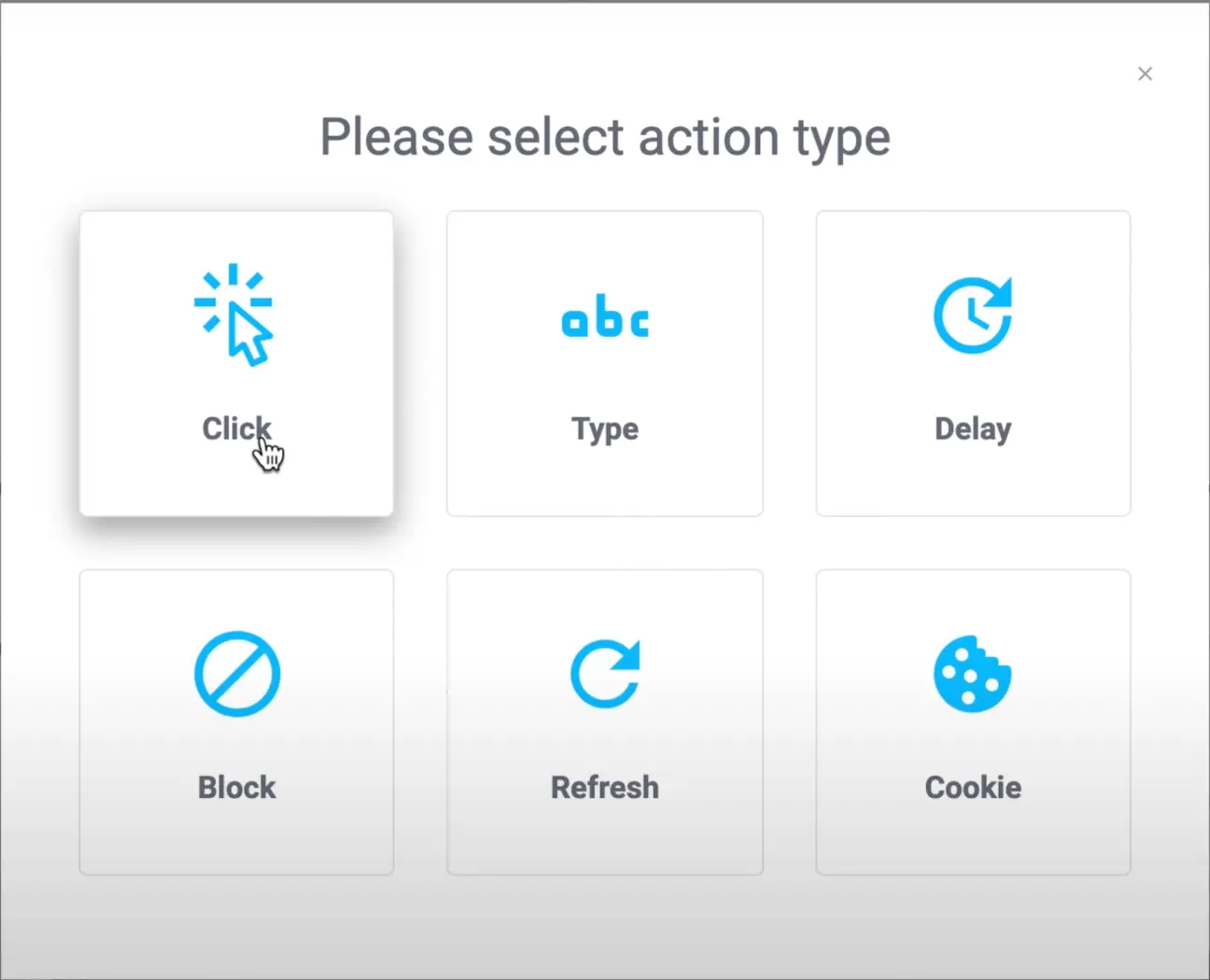

After setting up the trigger, you’ll be asked to select an action type.

And, yeah, that’s pretty much it! Now, you can monitor all your links from your dashboard.

You’ll also be provided a neat data breakdown as charts to make your data easier to read.

Remember we talked about APIs and about how they could be useful for website monitoring?

Well, Hexowatch has an API too. You can go through the documentation and use website monitoring (scraping) as a trigger in automation tools or integrate it directly with your software.

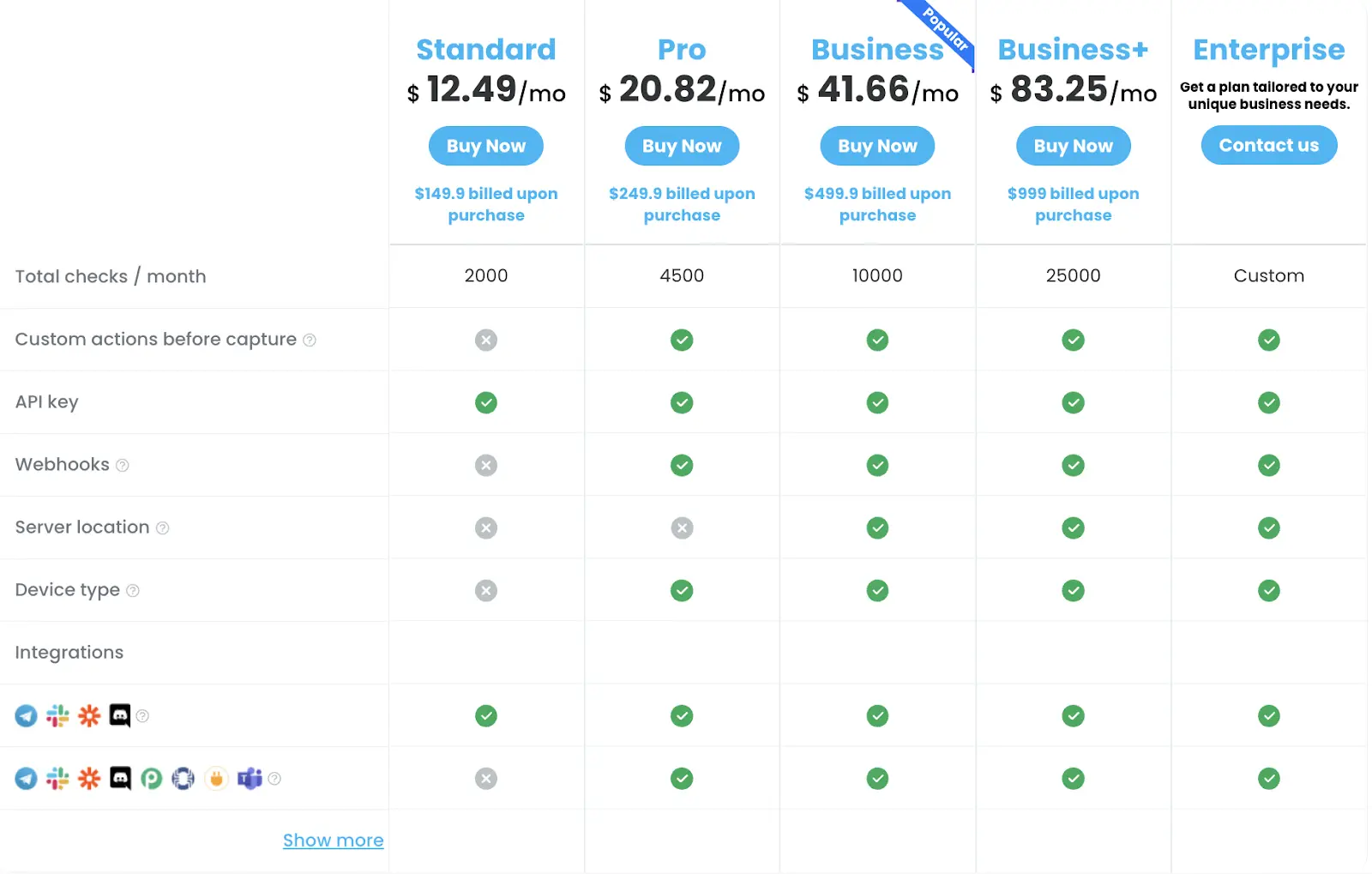

If you’re curious, above are all the paid plans they offer.

Overall, Hexowatch is a great option to look into. It’s designed in such a way that even if you only want to use the basic features, you can do that easily. But then, if you want to go into the deep end and mess around with code, you can go for it!

OK, now you’ve got the hang of these web monitoring tools. You know how to set up a trigger for when a website changes, connect it to an action, and everything in between.

But how can you leverage this new know-how to save you time and gain a competitive edge?

Knowledge without action is useless. And in this section, you will learn about the most useful ways to leverage website monitoring tools to your advantage.

Website monitoring tools may have limitations but are unlimited in how you can use them. In that aspect, it reminds me of LEGO bricks. Did you know that there are 102,981,500 possible ways to combine six eight-stud LEGO bricks together?

It’s your turn to imagine creative ways to leverage website monitoring for your advantage.

Hopefully, this list of examples helped you wrap your head around what you can do with these tools. Share your use case with us in our automation community.

If, before reading this article, you were barely familiar with web scraping tools, then what you’ve just read probably gave you everything you need to know about them. However, there might still be some gaps in your knowledge. So, here are a few additional questions you’re may curious about.

Yes. As it turns out, companies don’t really want users to scrape data from their websites. So, to prevent misuse, some companies have put measures in place to avoid web scraping.

The most obvious way they do this is by setting limits on requests and connections. Visit too many pages at once, and—BOOM!—your account is disabled.

Fortunately, there’s less of a risk of this when using a tool like Bardeen, since it runs directly in your browser and local IP address. Still, I would recommend you to use all tools with caution.

I wrote this article with simplicity as my goal, providing the easiest solutions to monitor website changes that don't require one to work with complicated code. However, if you have some know how to code, great!

Using open-source frameworks like Frontera, PySpider, and Scrapy provides extra flexibility and opens up many more options. I won’t go into the technical details here, but you can pretty much do anything you want as long as you’re willing to put in the time and effort necessary.

Web scraping and SEO are a match made in heaven. Although in this article we talked about web scrapers in the context of website monitoring and saving time, tools like Screamingfrog and ScrapeBox are designed to help you boost your site ranking.

Screamingfrog can help you do everything from collecting keyword results, finding duplicate content, to extracting data for guest post opportunities. On the other hand, ScrapeBox markets itself as “The Swiss Army Knife of SEO” and packs a wide array of features too. Overall, the repetitive nature of web scraping can be an asset when it comes to SEO, so don’t be afraid to try them for your SEO workflows.

Setting up your first web scraping automation can seem overwhelming at first, but once you follow through with it, it can save you a ton of time in the long run. In the end, it takes just a few minutes to set up if you follow the instructions in this article. And, of course, once you’re familiar with the process, you’re free to create as many website trackers as you want!

With that being said, it’s better just to start with one, and I hope you’ve created your first web scraper automation today.

Next, check out how you can scrape website data without code directly to Google Sheets, Notion, Airtable, and other tools.

Suggested automations:

SOC 2 Type II, GDPR and CASA Tier 2 and 3 certified — so you can automate with confidence at any scale.

Bardeen is an automation and workflow platform designed to help GTM teams eliminate manual tasks and streamline processes. It connects and integrates with your favorite tools, enabling you to automate repetitive workflows, manage data across systems, and enhance collaboration.

Bardeen acts as a bridge to enhance and automate workflows. It can reduce your reliance on tools focused on data entry and CRM updating, lead generation and outreach, reporting and analytics, and communication and follow-ups.

Bardeen is ideal for GTM teams across various roles including Sales (SDRs, AEs), Customer Success (CSMs), Revenue Operations, Sales Engineering, and Sales Leadership.

Bardeen integrates broadly with CRMs, communication platforms, lead generation tools, project and task management tools, and customer success tools. These integrations connect workflows and ensure data flows smoothly across systems.

Bardeen supports a wide variety of use cases across different teams, such as:

Sales: Automating lead discovery, enrichment and outreach sequences. Tracking account activity and nurturing target accounts.

Customer Success: Preparing for customer meetings, analyzing engagement metrics, and managing renewals.

Revenue Operations: Monitoring lead status, ensuring data accuracy, and generating detailed activity summaries.

Sales Leadership: Creating competitive analysis reports, monitoring pipeline health, and generating daily/weekly team performance summaries.