Whether browser-based or cloud-based, web scraping tools can be useful for everyone, from small businesses to large organizations.

AI web scraping scraper can support a wide variety of business functions including sales prospecting, price monitoring, market research, LinkedIn automation, and candidate sourcing. But manual data scraping eats up a lot of time and is prone to inaccuracies.

If you’ve ever spent hours on a large-scale data scraping project, you know what I mean. Luckily, we have Artificial Intelligence (AI).

If you’re new to the whole AI web scraping game, it can be a pain to find the perfect one that fulfills your requirements. Cloud? Browser? API? Technical jargon like this can send your head spinning.

Data collected by PricewaterhouseCoopers (PwC) shows us that even basic AI capabilities can give teams 30-40% of their time back.

So are you ready to harness the awesome power of AI? In this article, we’ll clear up your confusion about AI data scraping tools and we will discuss eight of the top AI web scraping tools explaining the pros and cons of each. plus how to leverage them in your workflow.

A web scraper is a software tool or script that automatically extracts data from websites by fetching and parsing their HTML content. Businesses use operations such as LinkedIn scraping to gather and analyze large amounts of publicly available data.

But it doesn’t stop there - anything can be scraped, including product prices, job listings, or contact details, which can then be stored and analyzed.

Web scrapers work by…

When using a web scraper, it’s important to comply with legal and ethical guidelines to avoid violating website terms of service or data privacy laws.

AI web scraping is an automated process of extracting data for sales prospecting or other data intensive tasks from websites using AI-based methods and tools.

Unlike traditional web scraping, which relies on pre-defined selectors that isolate the data you want to extract, web scraping AI uses artificial intelligence algorithms capable of self-adjusting to handle dynamic websites.

This approach addresses the limitations associated with manual or purely nocode-based scraping techniques. Using an artificial intelligence web scraping tool is much more effective.

AI scraping tools are designed to navigate through web pages, identify and extract data, and adapt to changes in website layouts without human intervention.

There are several types of website scrapers available. Each one is designed for specific needs and levels of complexity, ranging from simple one-time extractions to large-scale operations, sometimes using automated data software.

Top web scraping tools will typically belong to one of the following three categories:

These web scrapers operate from browsers like Chrome, Firefox, Edge, and Safari. Browser-based web scrapers run locally, which means your data stays with you for better data privacy.

However, they operate from your local IP address and residential proxies, so they are better suited to non-intensive scraper operations. These tools are typically also the most user-friendly.

These scrapers operate from a separate cloud server, securing your local IP from getting blocked through IP rotation.

They’re usually more expensive but provide a good option if you require high-volume scraping operations. Cloud-based AI web scraping tools are available as web-based apps or downloadable local software on your desktop.

If you can’t decide between browser or cloud-based web scraping tools, why not go for a hybrid scraper? These offer varied scraping features and are often considered the best screen scraping tools due to their flexibility.

Your choice depends on the complexity of the website you are scraping, data volume, and level of customization needed. You can create your own browser-based scraping extension if you know how to code, but it’s much easier to just use Bardeen’s AI scraping agents!

Most web scrapers today use AI to enhance and improve the web scraping process. Unlike traditional web scraping, which relies on hardcoded rules, AI web scraping tools use machine learning and natural language processing (NLP) to interpret, clean, and analyze the data after it is collected.

AI web scraping tools are also more efficient and more adaptable to dynamic websites. They can learn and adjust when website structures change, reducing the need for frequent reconfiguration.

Now, let’s talk about what makes AI web scraping a great solution for your business.

Want to know what AI web scrapers do? You can use an AI data scraper tool to gain the following benefits:

AI web scraper tools automatically collect web data with just a few clicks from you, eliminating the need for extensive manual input.

A webscraping AI tool is ideal for modern sites because it can adjust to changes in website structures, ensuring consistent data collection.

These tools are capable of extracting various types of data, including text, images, and videos. For instance, you can use price scraping tools to gather data on product costs and perform market research.

Let’s also consider one of the key revops and sales use cases - lead generation. With an AI scraper, you can quickly build sales prospect lists and start outreach operations in minutes, instead of days.

You can then perform a data export to store collected information in multiple formats, such as JSON, Excel, and CSV. This improves access and analysis when performing data extraction tasks.

AI web scraping tools are simply more efficient and accurate than traditional web scrapers. Studies suggest that AI-powered web scraping tools are 30% to 40% faster and up to 99.5% more accurate.

What is the best tool to scrape a website? That depends on your needs, technical skills, and the complexity of the operation.

For example, AI web scraping complex websites or large volumes of data requires a more sophisticated tool.

Here are our recommended steps for choosing the best scraping tools:

Different AI scraping tools are designed for different levels of complexity, scalability, and automation.

Always assess your requirements or you may end up with a tool that is either too basic or too advanced for what you need.

For example, static HTML pages can be scraped with a lightweight tool like BeautifulSoup. But for JavaScript-heavy content, you will need a browser automation tool like Selenium or Playwright.

Additionally, factors like data volume, frequency of scraping, and anti-scraping measures play a key role.

If you plan to scrape large amounts of data frequently, look for a cloud-based solution like Scrapy or Apify that has proxy rotation and automation features.

Different websites have different structures, technologies, and anti-scraping measures that can make it difficult to extract data.

Some sites implement CAPTCHAs, IP blocking, or dynamic loading, making it necessary to use tools with proxy rotation, headless browsing, and anti-detection mechanisms.

Understanding these complexities ensures you select the most effective and efficient scraping solution, saving time and resources while maintaining compliance with ethical and legal standards.

The best website scraping software will depend on the type of scraper you need:

Browser-based AI web scraping tools are best suited for simple, HTML-based websites where the content is directly available in the page source. Use these for small-scale projects or if your team lacks coding experience.

Cloud-based AI web scraping tools are needed for websites that load content using JavaScript or AJAX. These are also better if you need frequent data updates or a tool with automation and cloud-based capabilities.

Without native integrations, scraped data may require manual handling, slowing down workflows and reducing efficiency.

Tools that do not integrate easily will also require input from IT or development teams, which can slow down your implementation roadmap.

The web scrapers on this list were chosen because they cover a wide range of skill levels and budgets. It doesn’t matter if this is your first data scraping job or if you are looking for a more professional solution. There is something here for everybody.

But this isn’t just my opinion. I scoured several review sites, including G2 and Capterra to find out what real users think about these tools. So, without further ado, these are my top 8 AI web scraping tools:

| Tool | Type of scraper | Available on | What it's best for |

|---|---|---|---|

| Bardeen Scraper | Browser | Chrome |

|

| Webscraper.io | Hybrid | Chrome, Firefox |

|

| Instant Data Scraper | Browser | Chrome, Edge |

|

| ParseHub | Cloud | Windows, Mac, Linux |

|

| Octoparse | Hybrid | Windows, Mac |

|

| Byteline | Cloud | Chrome |

|

| Grepsr | Cloud | Chrome |

|

| ScrapeStorm | Cloud | Windows, Mac, Linux |

|

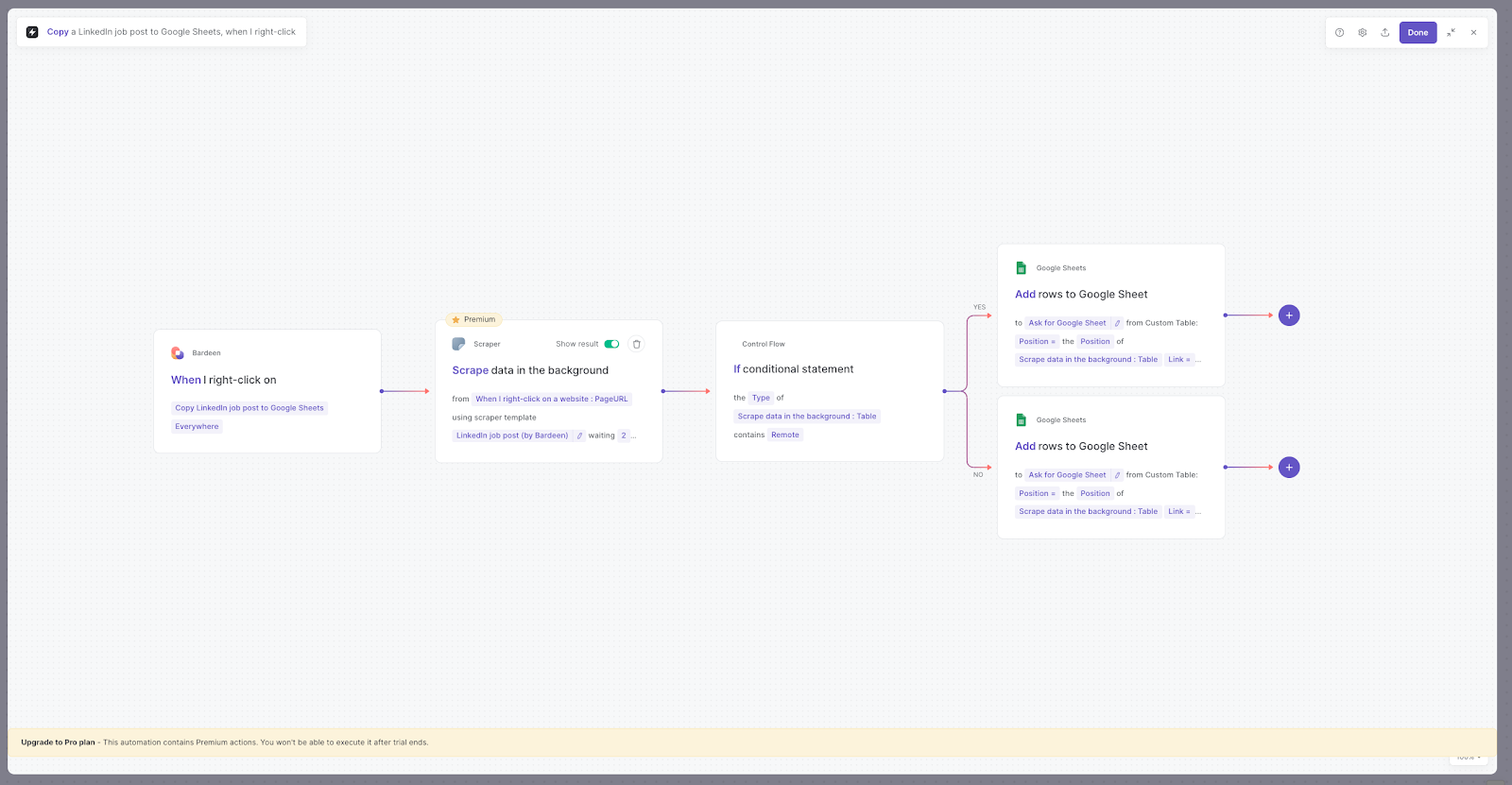

With Bardeen’s web scraping software, you can retrieve the structured data you want and then send it to various web apps and integrations automatically without adding code.

Here are a few examples of what you can do with Bardeen:

How does this playbook work?

Finding relevant leads on LinkedIn Sales Navigator takes time. This playbook extracts people search results from the current page into a structured format you can use for outreach.

How does this playbook work?

Finding and tracking relevant Facebook pages is time-consuming. This playbook scrapes Facebook search results, extracts key business page data, and adds it to a Google Sheet for easy analysis and outreach.

How does this playbook work?

Prospecting on LinkedIn is time-consuming. This playbook extracts company data from a LinkedIn page and automatically creates a new organization in Pipedrive, complete with a note containing the scraped data.

Most AI web scraping tools are designed solely to scrape data from LinkedIn or other specific platforms. This is where Bardeen stands out from the rest.

With Bardeen, you can connect the scraped data to your sales workflows and GTM motion. Plus, you can link to any third-party apps like LinkedIn.

The Bardeen scraper tool is capable of performing tasks beyond simple web scraping. Its pagination, deep scraping, automation, and click actions features let you enrich data from a list of links, create your own scraper templates, and send data to integrated apps.

G2 users appreciate Bardeen for its user-friendly interface, highlighting its simplicity and accessibility for individuals with varying technical backgrounds. Another aspect that users value is Bardeen's versatility in automating data collection. It's particularly praised for its effectiveness in gathering account information, making it a valuable tool for sales professionals.

Users on Capterra highlight its user-friendly interface and effective automation capabilities. Some users praise its no-code interface while others mention using Bardeen to connect applications like Notion and Slack.

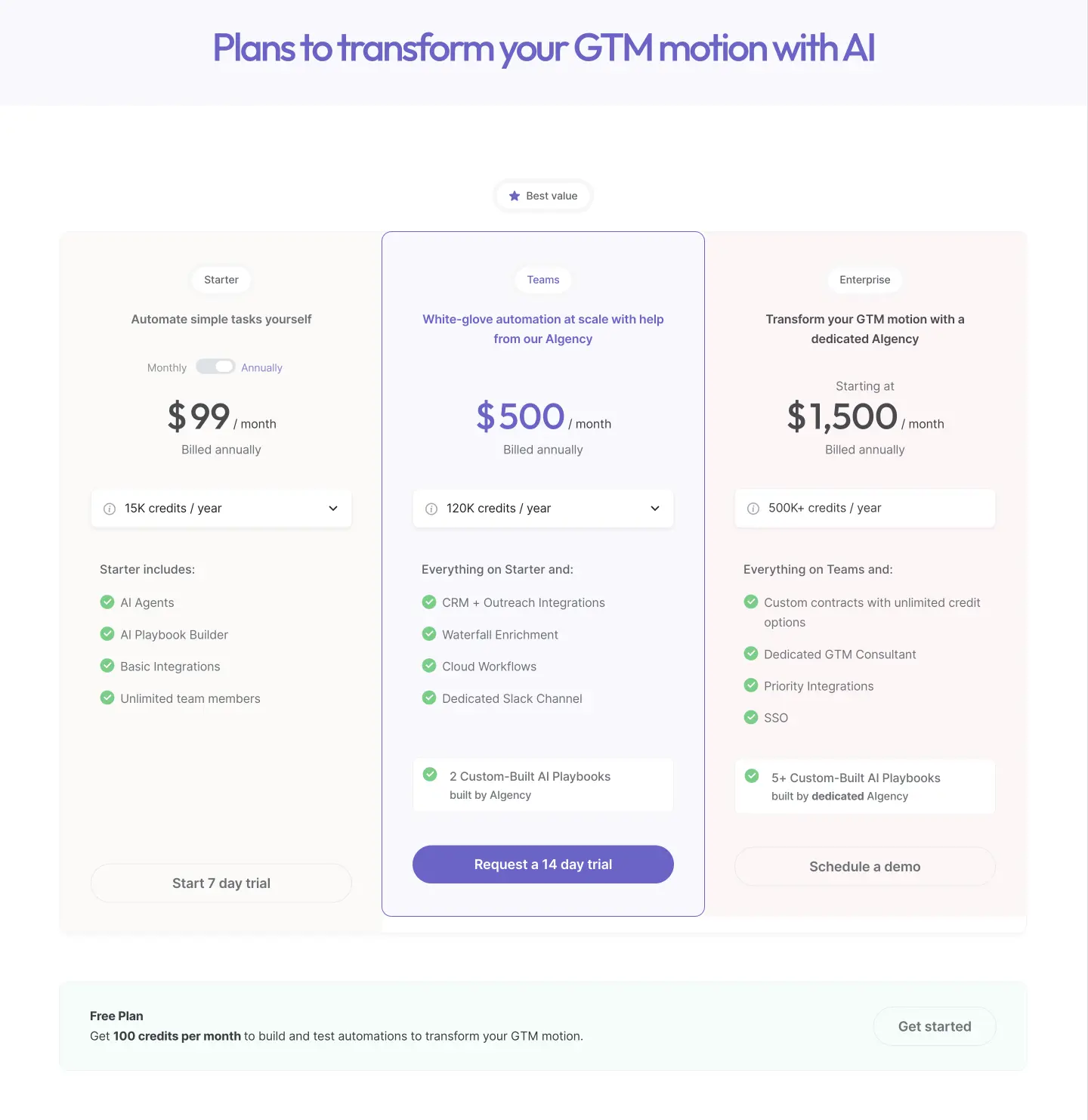

Bardeen’s pricing has been optimized for 2025 and includes the following plans:

Read why Bardeen is doubling down on AI + humans and how it can accelerate your sales cycle.

Ready to automate your workflows and maximize sales productivity? Sign up for Bardeen's free plan and experience the power of AI sales automation firsthand.

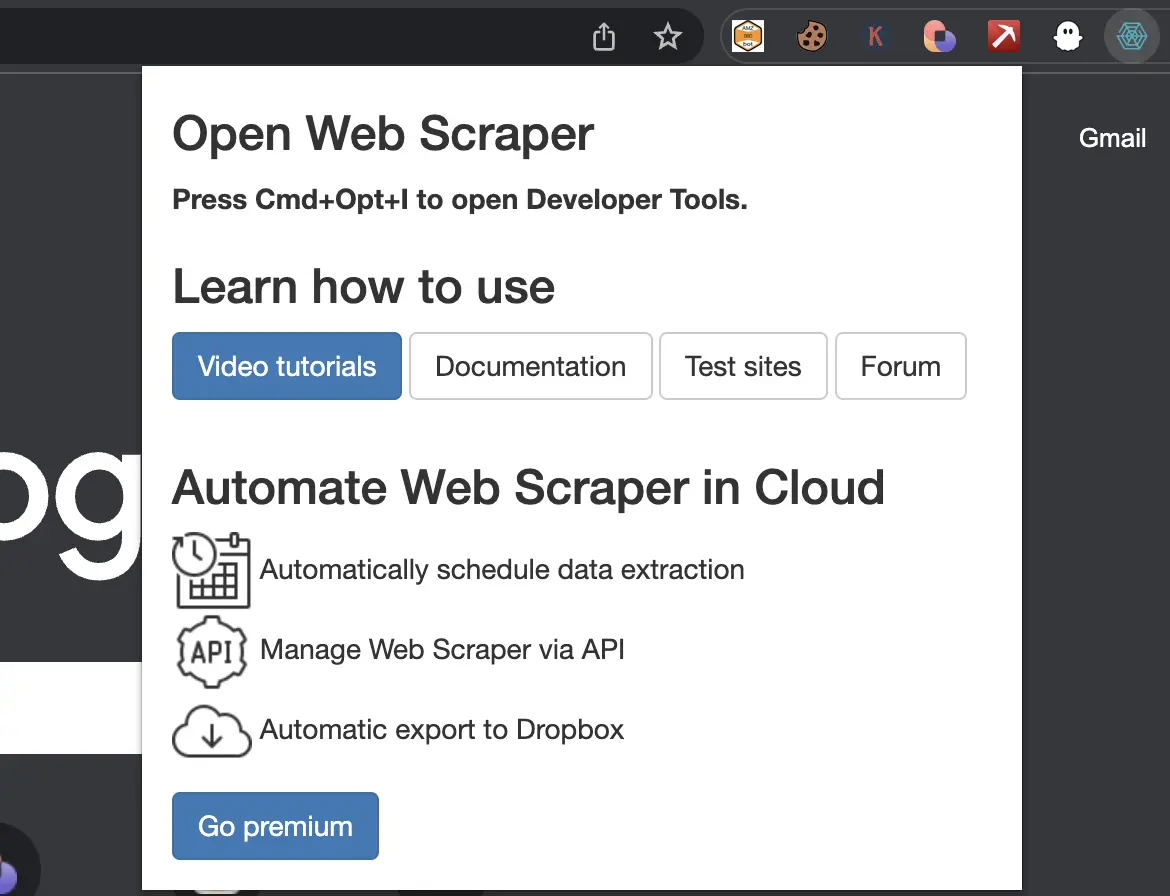

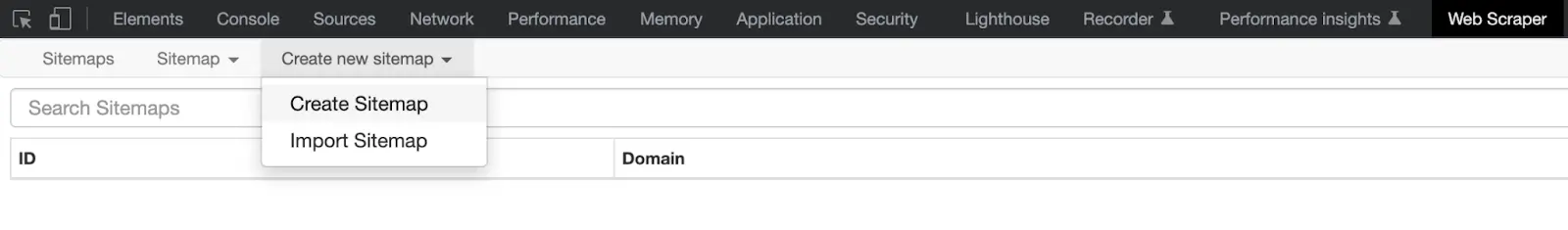

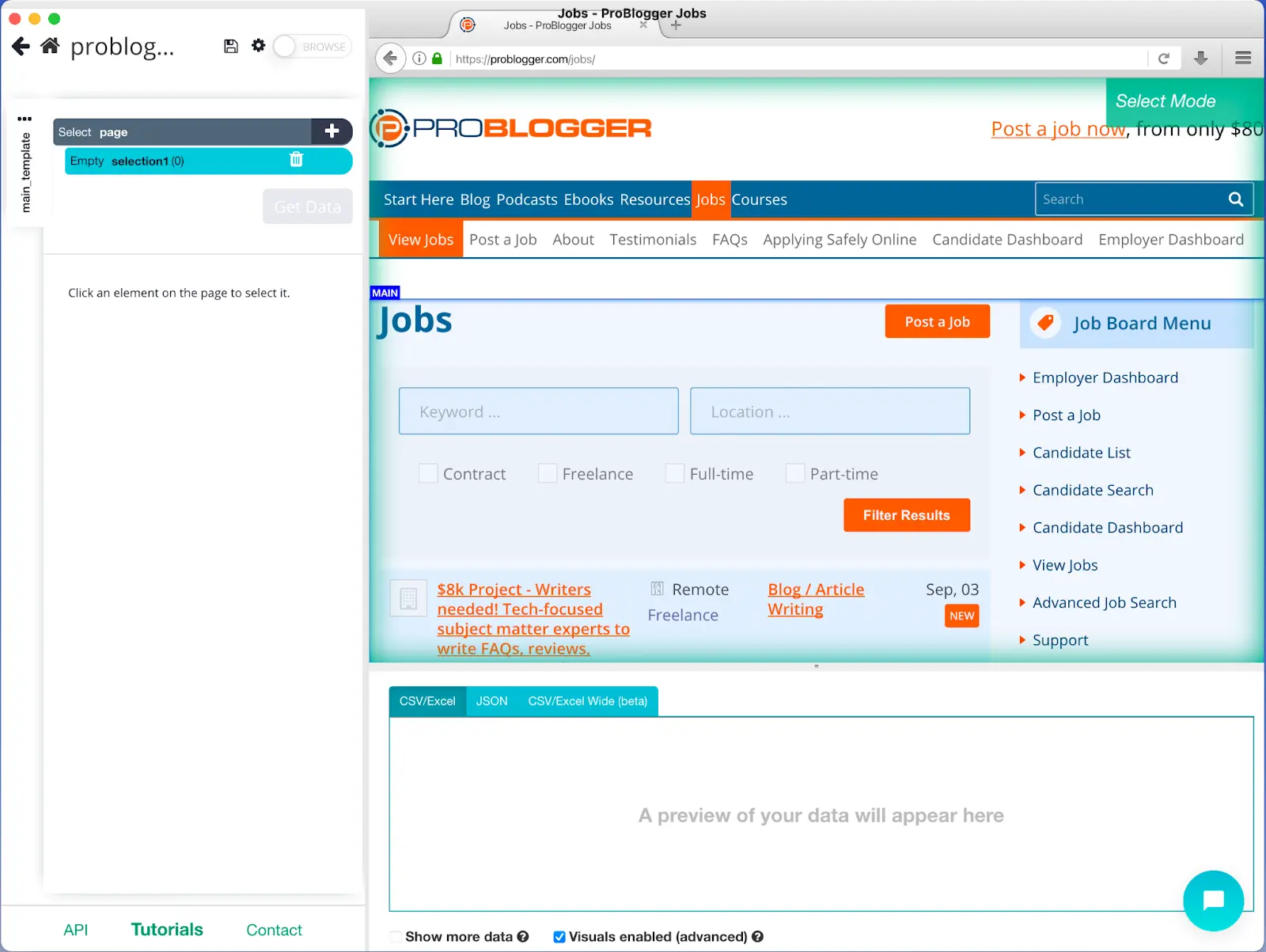

Do you have experience with web development or coding? If yes, you’ll like Webscraper.io. It’s one of the best website scraping software out there. Once installed, it becomes a module in the Developer Tools menu. When you click on the extension icon, you’ll be shown this:

As you might expect, once you open this free AI web scraper in Developer Tools, the overall design is very cut-and-dry. Its point-and-click interface can be a plus point for some users. You can create or import a sitemap to scrape AI data from any website.

After specifying a name and a URL, you can add selectors to extract data. It supports text, links, images, and many more data types and will create a comprehensive web scraping library for you if you choose to scrape multiple pages for data.

Keep in mind that this is a hybrid web scraper, so it can scrape a website both from your local IP or a server. This means that it’s flexible based on your needs.

Are you just scraping a list of groups from Facebook? A browser-based scraper will do. Are you planning on scraping data from LinkedIn profiles? In that case, you’d better use the cloud version!

Besides having a scheduler and IP rotation like any other cloud scraper, Webscraper.io comes with many other options to simplify the process. For example, you can automatically export the scraped data to Dropbox, Google Sheets, or Amazon S3. You can also integrate this scraper into an API and manage it from there!

Webscraper.io may have a basic design and a learning curve for non-developers, but it’s really nifty in the long term. You can get it on Chrome and Firefox or check out the cloud version.

Users on G2 appreciate Webscraper for its user-friendly design, noting that it simplifies the data extraction process without the need for coding. The tool's capability to navigate between pages and efficiently download tables and lists in CSV format is also praised.

Users on Capterra also appreciate its user-friendly design, noting that it simplifies the data extraction process without the need for coding. The tool's capability to navigate between pages and efficiently download tables and lists in CSV format is also praised.

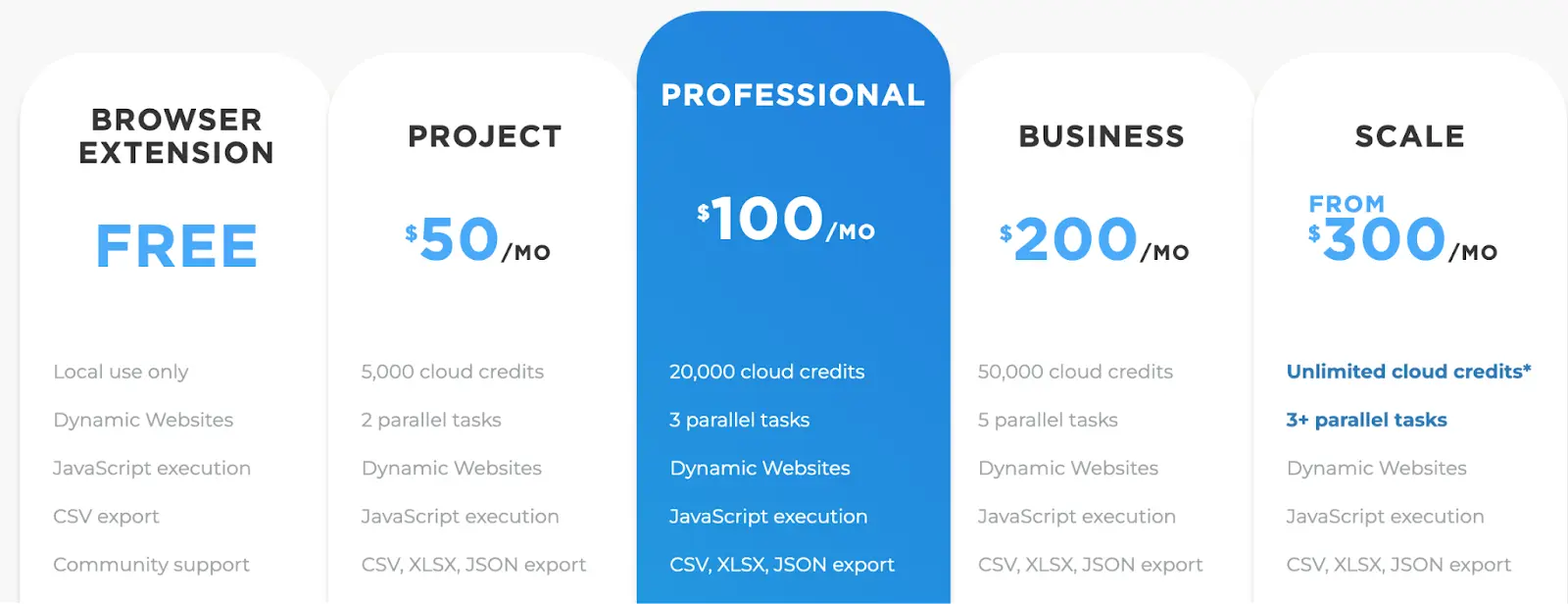

Of course, only the browser extension is free. If you want to access their servers, you’ll need to choose from their various packages. Webscraper.io plans range from $50 to $300 per month:

Most of the webscrape AI tools we’ve talked about have powerful bonus features on top of just scraping. It can be powerful, but it also adds more complexity to the platform. If you only want to get the data from a web page, Instant Data Scraper is the way to go.

The tool is easy to use. Activate the scraper, and it’ll try to detect what you want to scrape. You can edit the scrape template if necessary.

Available for both Chrome and Edge, it’s fully browser-based and allows you to download scraped data in XLV file format.

It’s completely free and only takes up less than a megabyte of space. However, this tool has limited functions, so it may not be the best tool to scrape website data with.

Overall, Instant Data Scraper is praised for its ease of use and efficiency in handling basic web scraping tasks. It's particularly suitable for users seeking a straightforward solution for simple data extraction needs.

However, those requiring more advanced features or dealing with complex scraping scenarios may find it less accommodating.

If you want a more dedicated and professional data scraping and list crawling app, then browser-based options don’t work for you. ParseHub might be the way to go. It has no browser extension, only desktop clients on Windows, Mac, and Linux.

When you open it on your computer, you’ll see a built-in browser from which you can do your AI web scraping operations.

Enter the URL of the website from which you want to extract data. After it loads, on the left side you’ll see various commands and settings. In the middle will be an interactive view of the website which you can click on to select elements.

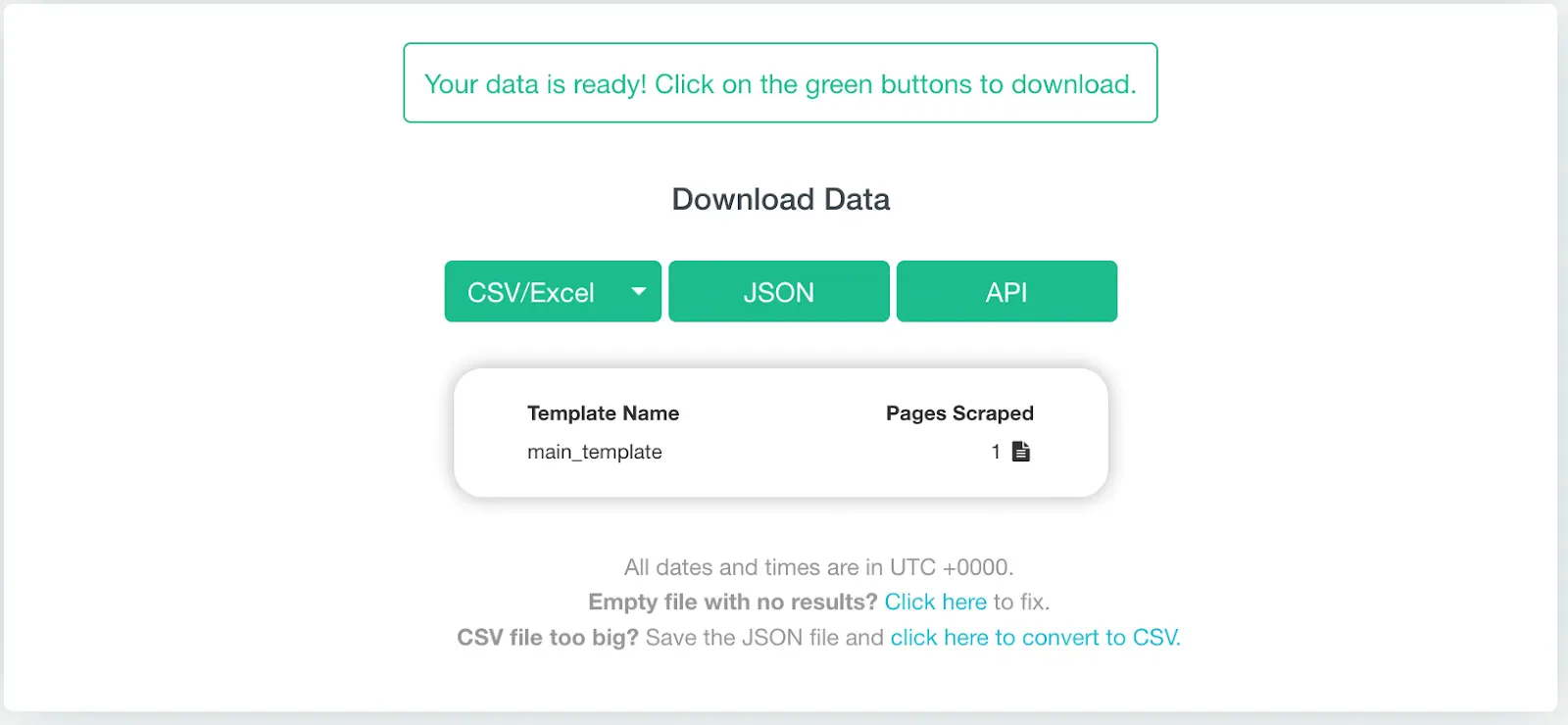

You can preview the selected data at the bottom in CSV or JSON format. Once set in place you can ‘Run’ the scraping operation on their server.

When the data has been scraped, you can also download it in CSV/Excel, JSON, or API or import it into Google Sheets or Tableau.

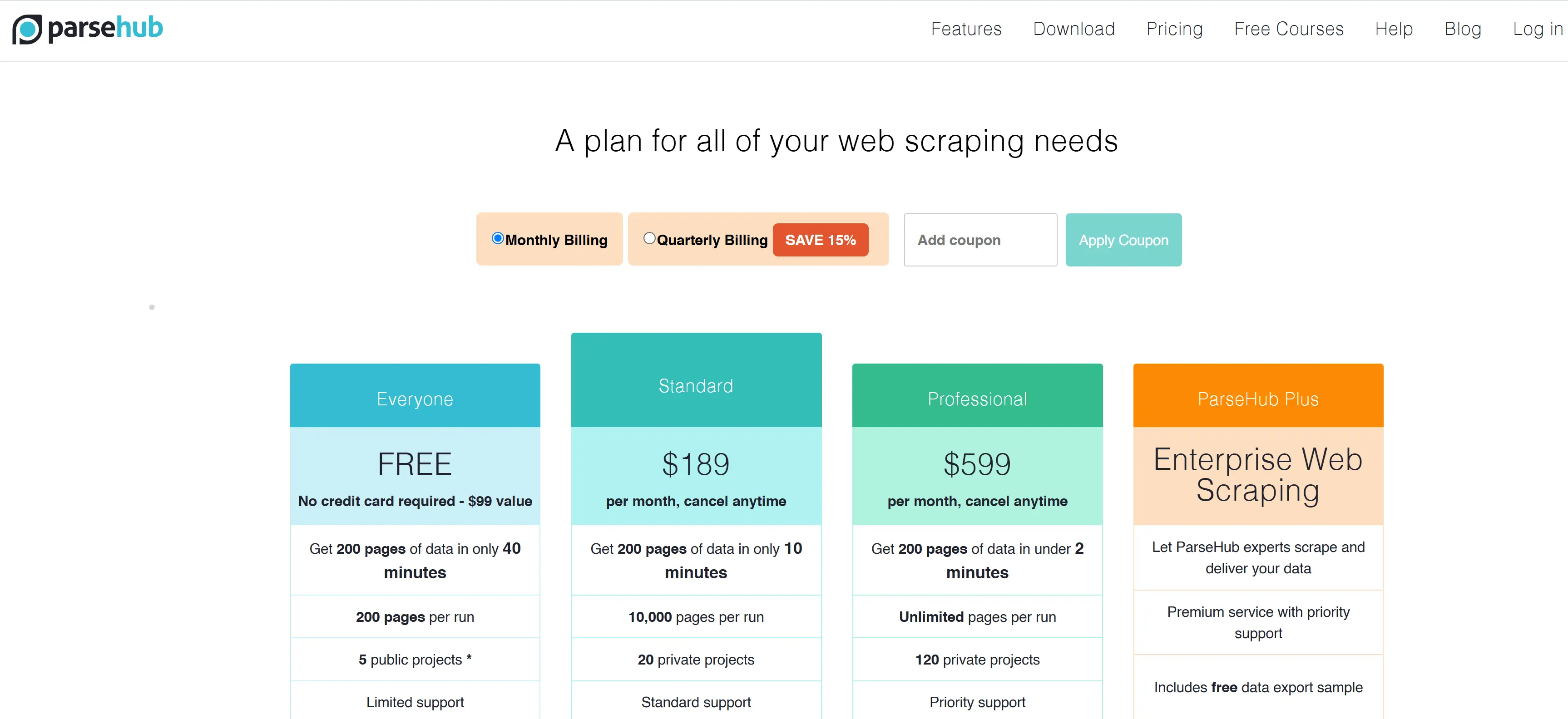

Operating exclusively from the cloud presents many benefits like IP rotation, scheduled collection, and more. Unfortunately, that extra functionality is reflected in the costs.

Users on G2 highlight its ease of use and effective data scraping capabilities. Some note ParseHub's efficiency in handling complex data extraction tasks, while others mention the No-code capabilities the best part.

Users on Capterra appreciate its user-friendly interface and powerful data extraction capabilities. The tool's cross-platform availability and useful features have also been praised, as well as the excellent onboarding experience.

With the free plan, you get 200 pages per run and 5 public projects. You can opt for the Standard and Professional plans to increase that limit, costing $189 and $599, respectively. So, it’s definitely expensive but might be worth it based on your use case.

Free trial? Free version available with 14-day data retention

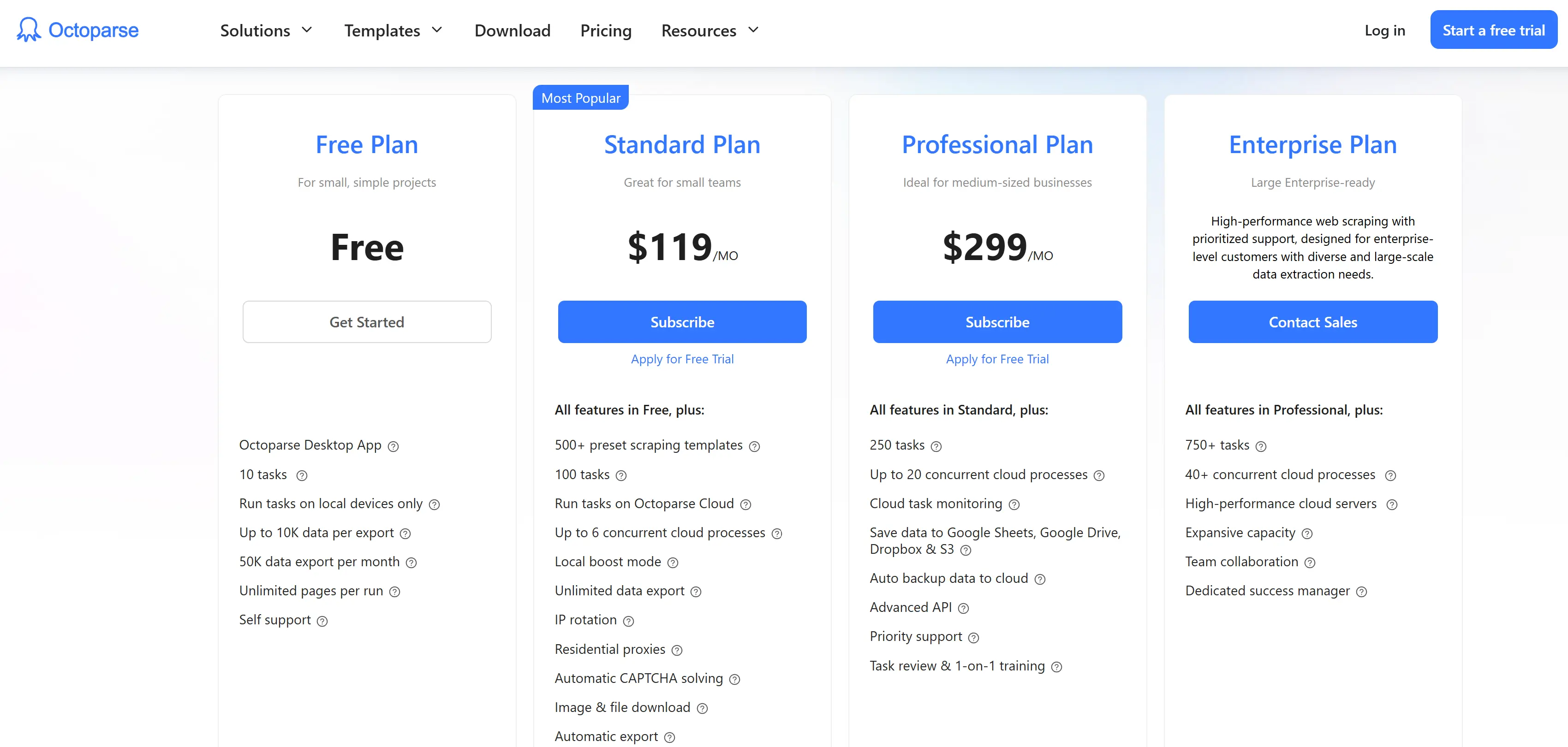

If you want something similar to ParseHub but cheaper, you’ll like Octoparse. It doesn’t have any web extensions, only desktop clients on Windows and Mac, but you can simply visit the website you want to scrape data from in the built-in browser and get started.

Cloud-based web scraping tools have many benefits, like IP rotation and scheduling, but in some cases, it also makes sense to scrape locally.

Since Octoparse is a hybrid scraper tool (it can operate both from your local IP and the cloud), you can choose to run the scraping operations from your computer itself too!

As your business grows and your requirement increases, you can also look into Octoparse’s professional data scraping service. For now, you can download the app to your computer via their website.

Users on G2 praise Octoparse for its user-friendly interface and powerful features. Many appreciate the tool's accessibility, noting that it simplifies data extraction tasks without requiring programming skills. The availability of pre-designed templates is another aspect that users find beneficial, as it streamlines the scraping process.

Users on Capterra appreciate its intuitive user interface, which allows both beginners and advanced users to quickly set up and execute scraping tasks.

Additionally, Octoparse’s customer support receives high praise, with users noting that the team is responsive and helpful when any issues arise. While users are generally satisfied, some do mention that Octoparse's pricing structure could be more affordable, particularly for those just starting out with web scraping.

Free trial? Free version available

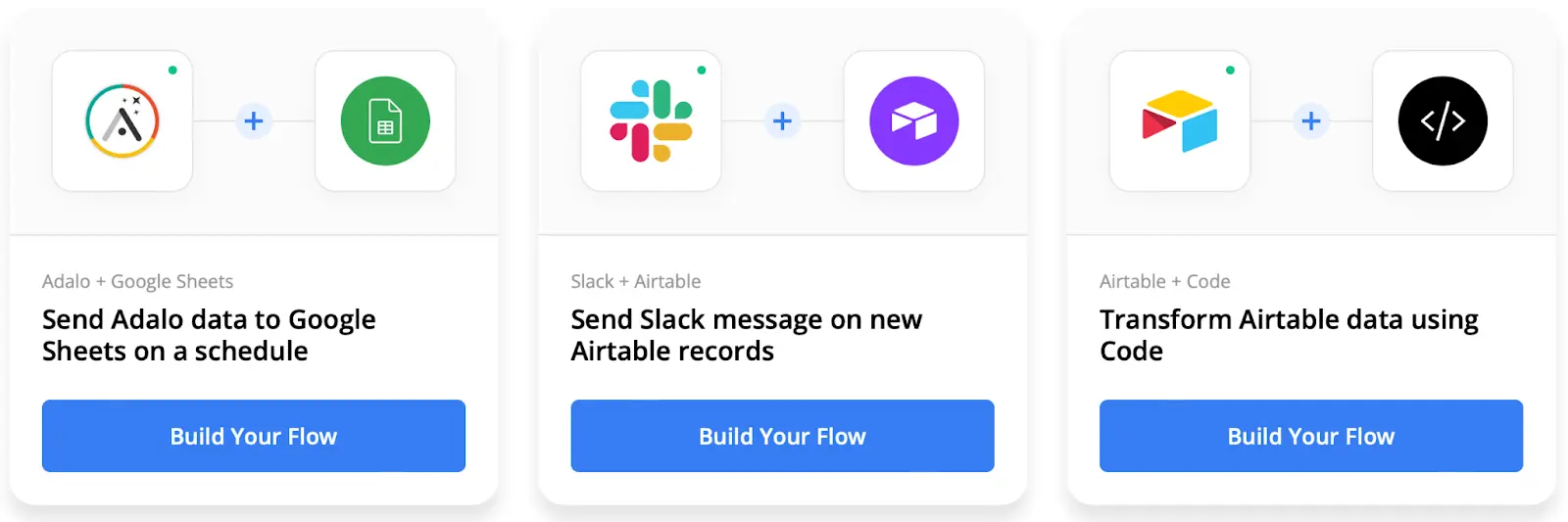

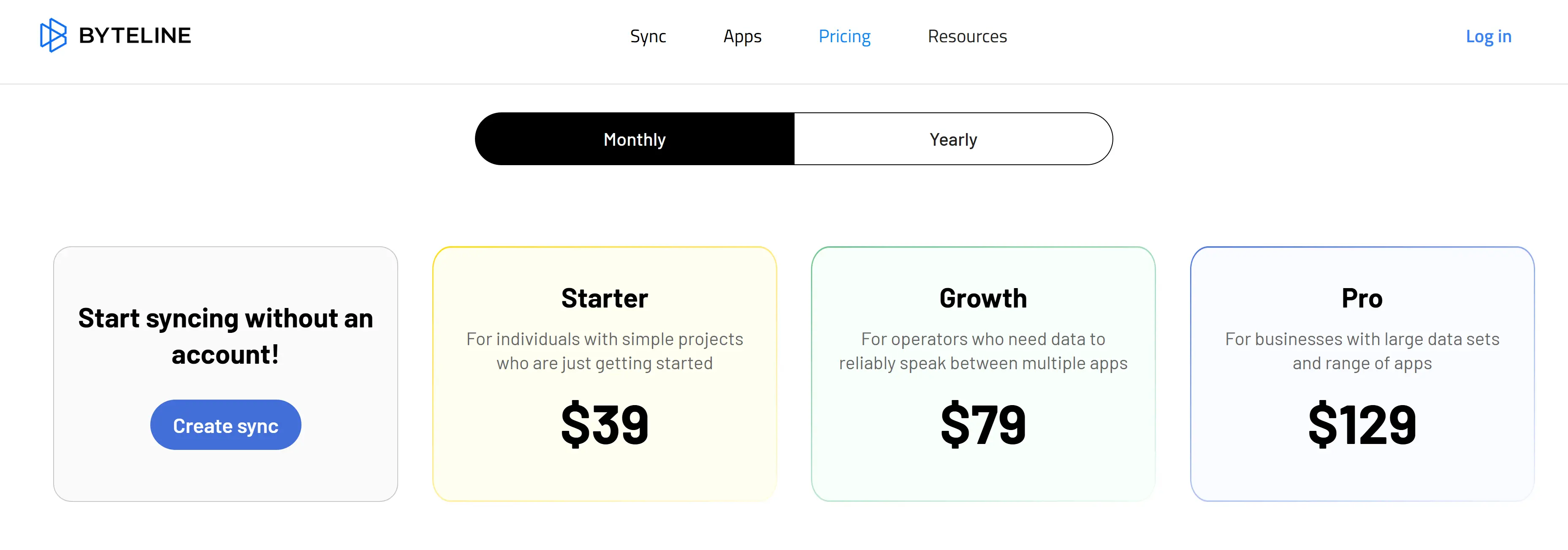

Do you want a web scraper with a higher focus on automations rather than just plain data? Byteline operates on ‘Flows’ where you can connect various web apps. These can be triggered by either an HTTP API, a scheduler, or an in-app update.

For data scraping, it allows you to pick elements with the Chrome extension, but they are scraped using their server. They also auto-rotate between residential servers to ensure the highest level of reliability.

Notice how a link had been copied when the elements were selected? You can paste that link into the console and configure the selection further. Once it’s done, you can export the data to Airtable, Google Sheets, or any other Byteline-integrated apps.

Users on Capterra appreciate Byteline for its user-friendly interface and robust features. Others enjoy the ease of use and exceptional customer support, noting that the support team is quick to assist or resolve issues directly.

Overall, user reviews highlight the platform's ability to connect seamlessly with various data sources and automate data extraction processes.

Additionally, Byteline's integration with applications like Airtable and Webflow CMS is also praised. However, some users have noted that while the platform offers powerful features, there may be a learning curve.

Love it already? Time to talk about pricing.

If you’re new to this whole data web scraping thing and need a tool that can guide you through the process, you’ll love Grepsr! It works similarly to all the other web scraper tools we’ve looked at so far.

Go to the website you want to scrape data from and start clicking on elements. When you’re doing it for the first time, Grepsr will define the steps for you and make sure you understand the process.

Being a cloud-based scraper, you can save the data you’ve collected to storage platforms like Dropbox, Google Drive, Amazon S3, and even FTP.

Download scraper tools like this if you only want to set it up once and then automate it, as you can use the built-in scheduler and define an extraction timeline to get the most up-to-date data.

Unfortunately, this feature is only available with the Basic and Advanced plans. The Free plan is fairly generous by itself, but the Basic or Advanced plans are also available if you have a higher requirement.

Grepsr also saves your scraped data to its own servers. With the Free plan, your data is saved for 30 days, and that goes up to 60 and 90 days for the two paid plans. Similar to other cloud-based web scraping tools, they also offer a personalized data service, for both data acquisition and integration with third-party platforms.

All in all, Grepsr is a good cloud-based web scraping tool. It’s beginner-friendly but also has the high-tech features we’ve come to expect.

Users on G2 highlight Grepsr for its professionalism and attention to detail. The platform's user-friendly browser extension has also received praise. Overall, users commend Grepsr for its reliable data extraction services, efficient customer support, and tools that simplify the web scraping process.

Users on Capterra have shared positive experiences with Grepsr, particularly highlighting its user-friendly interface and responsive customer support. Additionally, the platform's efficiency in delivering data extraction services is appreciated, with users mentioning timely and high-quality outputs. However, some users have suggested improvements, such as enhancing the invoicing system for better clarity and addressing occasional data inconsistencies.

Free trial? No free trial

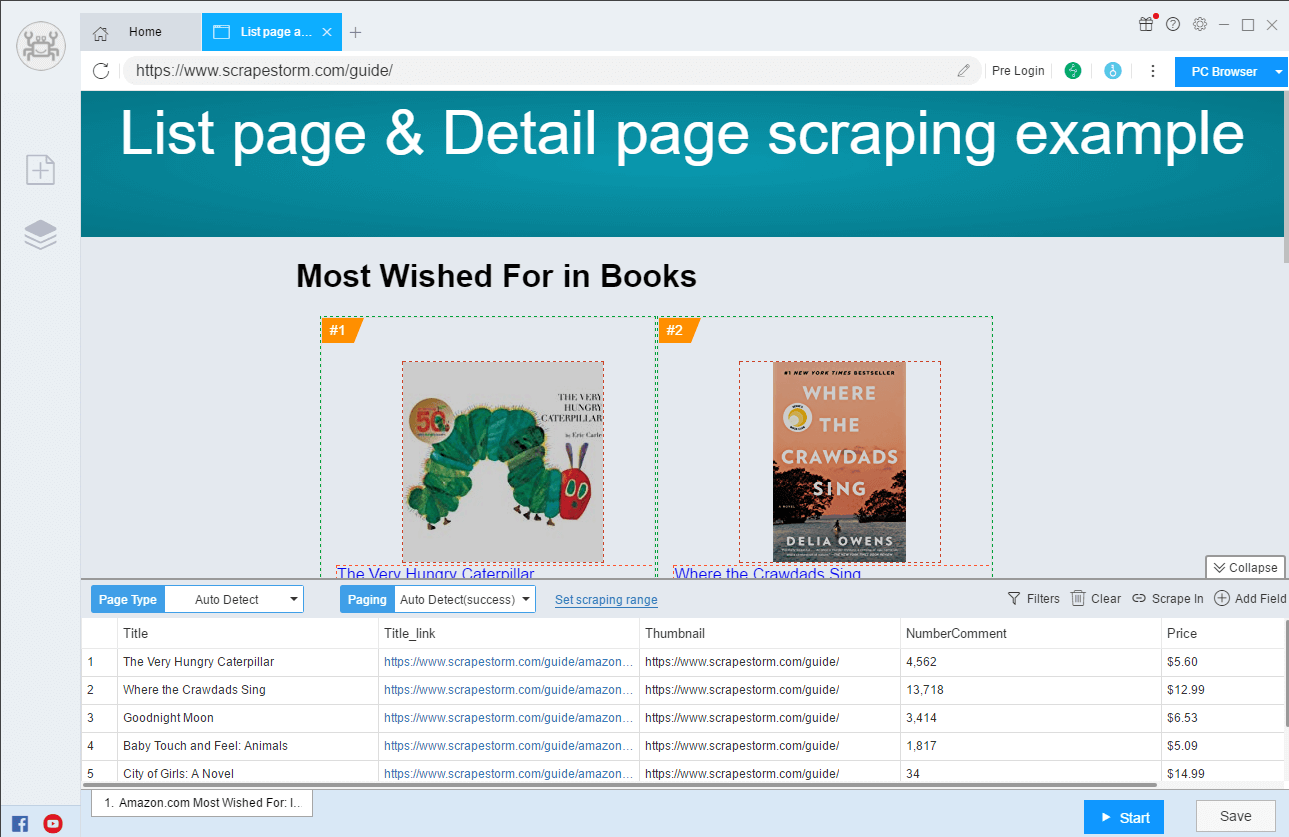

ScrapeStorm is an AI web scraping tool with a visual, no-code interface. It uses AI to identify lists, tables, and pagination buttons automatically.

You also get advanced features like an in-built scheduler, IP rotation, and automatic export.

ScrapeStorm supports data export to the cloud or a local file in formats like CSV, HTML, MySQL, MongoDB, WordPress, and Google Sheets. It is available on Windows, Mac, and Linux.

Users on Capterra appreciate its user-friendly interface and efficient data extraction capabilities. One user noted, "I like the user interface the most. In contrast to some other web scraping softwares that I've used in the past, ScrapeStorm is super intuitive and very easy to understand."

Users on G2 value Scrapestorm for its powerful features and time-saving capabilities. However, some users have noted areas for improvement. A reviewer mentioned that when extracting data from certain websites, the software may not always recognize elements correctly, requiring manual re-selection.

Now, about pricing. There’s a free plan available, but it’s limited to 100 rows of export per day. If you have a higher requirement, you can choose between plans ranging from $49 to $199 per month.

Free trial? Free starter plan available

It’s important to comply with legal and ethical guidelines when using an AI website scraper. Here are some important things to consider before launching your next web scraping project:

Always consider scraping intervals to prevent overloading the website’s server and causing performance issues.

Scraping too frequently or making too many requests in a short period can overwhelm the server and potentially crash the site.

Additionally, websites often have anti-scraping measures in place, such as IP blocks or rate-limiting. By introducing appropriate scraping intervals, you can avoid being flagged as a bot and minimize the risk of getting blocked or banned.

Finally, proper scraping intervals help maintain data accuracy and consistency by ensuring that you’re collecting up-to-date information without duplication or missing data.

The structure and quality of the data you collect directly impacts its usability and accuracy. Websites may present information in various formats—such as tables or lists—which need to be properly parsed and extracted in a structured way.

If the input data is not correctly identified or filtered, it can lead to incomplete, inconsistent, or irrelevant data being collected, making it difficult to analyze or use effectively.

Common export formats like CSV, JSON, or databases are often required to integrate your AI web scraping tools with data analysis tools, spreadsheets, or machine learning models.

Make sure to have a standardized format for data exports and ensure your web scraper can accommodate that.

Without a standardized data export format, you risk having raw data that’s difficult to process or analyze. By planning for export and data automation in advance, you can ensure that the collected data is organized, structured, and easy to access.

If you are scraping a large dataset, you need to ensure that your system has the capacity to manage and store the data, whether it’s in a database, cloud storage, or another format.

High data volume also requires more time and resources to process and store. If not managed properly, scraping large datasets can lead to data quality issues, such as incomplete records, duplicates, or inconsistencies.

By considering data volume in advance, you can plan for the appropriate infrastructure, implement strategies like data batching or pagination, and ensure a more efficient scraping process.

Without clearly defining the scope, you risk collecting irrelevant or excessive data.

For example, scraping an entire website may result in massive volumes of data that include unnecessary information such as advertisements, unrelated content, or out-of-date entries.

Defining the scope allows you to focus on specific pages, sections, or data points, which improves both the accuracy and relevance of the data collected.

It also ensures that the scraping process is more efficient, reducing the time and resources needed to gather the data.

By setting clear parameters for what to scrape, you avoid overloading your system or the website's server, while also minimizing the chances of running into legal or ethical issues related to unauthorized data extraction.

Crawling authority refers to the legal and ethical consideration of how scraping may impact the website’s server load and user experience.

Websites often define robots.txt files or set specific terms of service that dictate how crawlers and scrapers can access their content.

By respecting these rules, you avoid violating the website’s policies, which could result in being blocked or facing legal consequences.

Ultimately, considering crawling authority helps to ensure you are collecting data in a way that aligns with fair and responsible data usage.

Always assess the site’s complexity to ensure you choose an appropriate scraping tool, thereby avoiding incomplete or failed data extraction.

Some websites rely on static HTML for content, while others use dynamic elements like JavaScript or AJAX to load data.

For instance, scraping a dynamic website with a basic tool like Instant Data Scraper—which is designed for static HTML—won't work, as it doesn't render JavaScript.

More advanced tools like Selenium, Playwright, or Puppeteer are needed for such websites to simulate a real browser and load content before extraction.

Finally, understanding the site's complexity helps you plan for challenges like CAPTCHAs, infinite scrolling, or paginated content, which require special handling.

Web scrapers are more popular now than ever before. But it’s important not to get carried away by the hype. Instead, choose the scraper tool that best fits your desired purposes.

Bardeen is undoubtedly the best web scraper. Although it’s just for Chrome users, it has a wide range of integrations, and you can do a lot with the free plan. Here are some examples of how Bardeen can help you:

Download Bardeen today or book a demo to see for yourself how you can benefit from Bardeen.

Web scrapers can often be hard to wrap your head around. They can scrape data from the web, which brings to mind some obvious use cases, like product listings from Amazon, followers from Instagram, or job postings from LinkedIn. But, what else? Can they also be leveraged to save time in everyday life?

This is where Bardeen stands out from the rest. Whereas most of them are designed to scrape data and not much else. With Bardeen you can connect the scraped data with different automations. Plus, you can connect to third-party apps like Zillow and LinkedIn.

Here are some noteworthy pre-built automations.

How does this playbook work?

Capturing property data from Zillow is time-consuming. This playbook extracts key details from any Zillow listing and adds them to a Google Sheet with a single shortcut.

How does this playbook work?

Manually extracting property data from Redfin is time-consuming. This playbook scrapes search results from your active Redfin tab, giving you a structured list in seconds.

How does this playbook work?

Researching products on ProductHunt is time-consuming. This playbook extracts a list of products from any ProductHunt topic page with a single click, giving you a handy data file for analysis.

As you might’ve picked up by now, browser-based scrapers are usually the best bet for most users since they are easier to get started and more powerful, especially when scraping data is only part of your workflow. If you know how to code, you can create your own, but most people don't.

You’ve already got the hang of Bardeen, but there are also many other ones that are worth checking out for certain use cases.

Some people may think that scraping data from websites is less than legal, but that’s not true. Web scrapers only retrieve public information that is readily available on the internet. You can use tools for web scraping to get any data that others have posted online.

If you know how to code, you can scrape data from a website by writing your own code and creating a scraping tool. Alternatively, you can just download Bardeen for free and use it as a Chrome extension to collect the data you want.

You are spoilt for choice when it comes to free web scraping tools. Pick the one that best suits your needs and intentions. For example, if you use Google Chrome as your browser, pick a browser-based extension like Bardeen. If you want to scrape data using different IPs, pick a cloud-based scraper tool.

AI web scraping tools use techniques like browser automation (headless browsers) and AJAX handling to simulate user interactions and capture data from dynamic websites. Some AI web scraping tools can utilize APIs to bypass the page rendering process.

To scrape ethically, make sure to review the website’s terms and conditions and respect robots.txt, which outlines web scraping rules for the site. Limit the frequency of scraping to avoid overloading servers and always make sure to use the data responsibly and avoid violating privacy or copyright laws.

AI tools use machine learning models and NLP to clean, categorize, and structure raw data, eliminating duplicates, correcting errors, and enriching data with additional context or metadata.

SOC 2 Type II, GDPR and CASA Tier 2 and 3 certified — so you can automate with confidence at any scale.

Bardeen is an automation and workflow platform designed to help GTM teams eliminate manual tasks and streamline processes. It connects and integrates with your favorite tools, enabling you to automate repetitive workflows, manage data across systems, and enhance collaboration.

Bardeen acts as a bridge to enhance and automate workflows. It can reduce your reliance on tools focused on data entry and CRM updating, lead generation and outreach, reporting and analytics, and communication and follow-ups.

Bardeen is ideal for GTM teams across various roles including Sales (SDRs, AEs), Customer Success (CSMs), Revenue Operations, Sales Engineering, and Sales Leadership.

Bardeen integrates broadly with CRMs, communication platforms, lead generation tools, project and task management tools, and customer success tools. These integrations connect workflows and ensure data flows smoothly across systems.

Bardeen supports a wide variety of use cases across different teams, such as:

Sales: Automating lead discovery, enrichment and outreach sequences. Tracking account activity and nurturing target accounts.

Customer Success: Preparing for customer meetings, analyzing engagement metrics, and managing renewals.

Revenue Operations: Monitoring lead status, ensuring data accuracy, and generating detailed activity summaries.

Sales Leadership: Creating competitive analysis reports, monitoring pipeline health, and generating daily/weekly team performance summaries.