Use Python to scrape LinkedIn jobs with Beautiful Soup and Selenium.

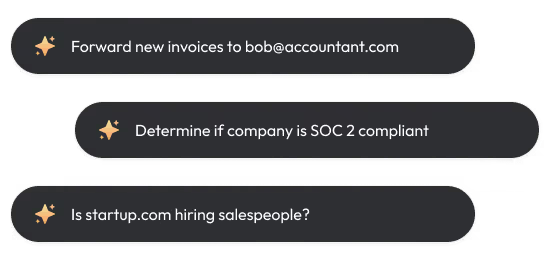

By the way, we're Bardeen, we build a free AI Agent for doing repetitive tasks.

Since you're learning about LinkedIn job scraping, you might like our LinkedIn Data Scraper. It automates job data scraping and saves you hours.

Scraping LinkedIn jobs is a valuable skill for job seekers and recruiters alike. In this comprehensive guide, you'll learn how to set up your scraping environment, understand LinkedIn's job search URL structure, extract job details from LinkedIn pages, and store the scraped data effectively.

We'll cover both the manual method and introduce you to the power of AI agents like Bardeen for automating repetitive tasks. By the end, you'll have the tools to save hours of job searching and gain a competitive edge in your career. Ready to become a LinkedIn job scraping pro?

Introduction

Scraping LinkedIn jobs is a valuable skill for job seekers and recruiters alike. By extracting job data from LinkedIn, you can gain insights into the job market, identify trends, and find opportunities that match your skills and interests. In this guide, we'll walk you through the process of scraping LinkedIn jobs step-by-step.

You'll learn how to:

- Set up your environment for scraping

- Navigate LinkedIn's job search pages

- Extract job data using Python and popular libraries

- Store and analyze the scraped data

Whether you're a beginner or an experienced programmer, this guide will provide you with the knowledge and tools to successfully scrape LinkedIn jobs. Let's get started!

Set Up Your Environment for Successful LinkedIn Job Scraping

Scraping LinkedIn jobs requires the right tools and setup. Python, with its powerful libraries like Beautiful Soup and Selenium, is the ideal language for the task. Beautiful Soup makes parsing HTML a breeze, while Selenium automates web interactions to handle dynamic content. A reliable proxy service is also crucial to avoid IP blocking during the scraping process.

Here's how to set up your environment for scraping LinkedIn jobs:

1. Choose Python as your scraping language

Its simplicity, extensive libraries, and community support make it perfect for both beginners and experienced developers.

2. Install Beautiful Soup and Selenium

Beautiful Soup simplifies HTML parsing, while Selenium interacts with web pages and simulates user actions. Together, they form a powerful combination for scraping LinkedIn job listings.

3. Set up a reliable proxy service

Rotating IP addresses through a proxy service reduces the risk of getting blocked by LinkedIn's anti-scraping measures. It also allows for geotargeting to scrape job listings from specific locations.

By setting up the right environment with Python, Beautiful Soup, Selenium, and a proxy service, you'll be well-equipped to scrape LinkedIn jobs effectively. Consider using an automated LinkedIn scraper to enhance your data collection process.

Save time with Bardeen's LinkedIn Profile Data Playbook. Automate data scraping directly into Google Sheets and simplify your lead generation efforts.

Decode LinkedIn's Job Search URL to Scrape Targeted Job Listings

To scrape LinkedIn jobs efficiently, you need to understand the structure of LinkedIn's job search URL. The URL contains parameters that define search criteria like keywords, location, and experience level. By manipulating these parameters, you can refine your scraping results and target specific job listings, saving time and effort in your job search process.

Here's how to decode LinkedIn's job search URL:

- The base URL, "https://www.linkedin.com/jobs/search/", is followed by query parameters that specify the search criteria.

- Key parameters include "keywords" for job title or skills, "location" for geographical area, "f_E" for experience level, and "f_TP" for job type (full-time, part-time, etc.).

- By modifying the values of these parameters, you can create custom job search URLs tailored to your scraping requirements. For example, to scrape software engineering jobs in San Francisco with 1-3 years of experience, you would construct a URL like: "https://www.linkedin.com/jobs/search/?keywords=software%20engineer&location=San%20Francisco%2C%20California&f_E=2".

LinkedIn's job search results are paginated, with a default of 25 listings per page. The "start" parameter determines the offset of the first result on the page. To scrape all available listings, increment the "start" value and make multiple requests. Be aware that LinkedIn imposes a limit of around 1,000 accessible results, so plan your scraping strategy effectively.

By understanding LinkedIn's job search URL structure and manipulating its parameters, you can scrape targeted job listings and maximize the efficiency of your LinkedIn job scraping efforts. This granular control over search criteria enables focused scraping, filtering out irrelevant listings and saving valuable time in your job search process.

Extracting Job Details from LinkedIn Pages: Unlocking Valuable Insights

Extracting job details from LinkedIn pages is at the heart of scraping LinkedIn jobs. Each job listing holds crucial information such as the job title, company name, location, job description, and application URL. By parsing the HTML structure of the job listing page using Python and Beautiful Soup, you can accurately extract these details for further analysis or integration with your job search process.

Beautiful Soup is a powerful Python library that simplifies HTML parsing. It creates a parse tree from the HTML source, allowing you to navigate and search the tree using various methods. To extract job details, follow these steps:

Identify the relevant HTML elements and their attributes that contain the desired job information. LinkedIn job listings typically follow a consistent structure, with job details enclosed in specific HTML tags and classes.

Use Beautiful Soup's methods like find() or find_all() with the appropriate tag names and class attributes to precisely locate and extract the job title, company name, and location. Target the <div> element with a specific class name that holds the job description text. Extract the entire job description using Beautiful Soup. Locate the application URL within an <a> tag and extract its href attribute to obtain the URL for each job listing.

By leveraging Beautiful Soup and understanding the HTML structure of LinkedIn job pages, you can effectively scrape job details and unlock valuable insights for your job market analysis or recruitment efforts. If you're interested in other ways to integrate LinkedIn with your tools, consider exploring available integrations. Extracting job details is a crucial step in the LinkedIn job scraping process, enabling you to gather comprehensive data for further analysis and utilization.

Save time on your job data collection by using Bardeen's LinkedIn profile enrichment in Google Sheets. Automate your workflow with ease!

Storing and Exporting Scraped Job Data: Unlocking Insights for Analysis

Storing and exporting scraped job data is a crucial step in the LinkedIn job scraping process. The extracted job details need to be organized and saved in a structured format for easy access, manipulation, and further analysis. Common storage options include CSV files, databases, or JSON formats with web scraper extensions, each offering unique benefits for different use cases.

Organizing the scraped data in a structured format is essential. One approach is to create a dictionary or named tuple for each job listing, where the keys represent the attributes (job title, company, location) and the values store the corresponding extracted information. These dictionaries or tuples can then be stored in a list, serving as a container for all the scraped job listings.

CSV files are a popular choice for storing and sharing tabular data. Python's built-in csv module module makes it easy to save the scraped job data to a CSV file. By creating a CSV writer object and specifying the file path, you can iterate over the list of job dictionaries and write each job's details as a row in the CSV file. This approach provides a convenient way to store and share the data for further analysis or integration with other tools.

Databases offer advanced querying and indexing capabilities, making them suitable for larger datasets and complex analysis. Python libraries like SQLAlchemy or PyMongo can be used to interact with databases like SQLite, MySQL, or MongoDB and store the scraped data. Alternatively, JSON format is a lightweight data interchange format that is easy to read and write. Python's module can be used to serialize the scraped data into JSON format and save it to a file or send it over an API.

By storing and exporting the scraped job data in a structured format, you unlock valuable insights for analysis and utilization. Whether it's importing the data into an applicant tracking system, conducting market research, or integrating with other tools such as LinkedIn to Notion, having the data organized and accessible is essential for making the most out of your LinkedIn job scraping efforts.

Conclusions

Knowing how to scrape LinkedIn jobs is crucial for gathering valuable job market data and insights efficiently. Enhance your data collection process with Bardeen. Use AI web scraping tools to save time and focus on more important tasks.

In this comprehensive guide, you've learned:

- Setting up the right scraping environment with Python, Beautiful Soup, Selenium, and a reliable proxy service

- Understanding LinkedIn's job search URL structure and constructing custom search URLs for targeted scraping

- Extracting job details from LinkedIn pages by parsing HTML with Beautiful Soup and retrieving key information

- Storing and exporting scraped job data in structured formats like CSV files, databases, or JSON for further analysis

By mastering the art of LinkedIn job scraping, you unlock a wealth of opportunities for market research, job analysis, and candidate sourcing. Don't miss out on these valuable insights - become an expert at scraping LinkedIn jobs today, or risk being left behind in the competitive job market!

.svg)

.svg)

.svg)