In this guide, you can learn how to scrape LinkedIn data using Python, Selenium, and Beautiful Soup. Python and its linked libraries can be used to extract data from LinkedIn. But if you’re scraping LinkedIn, there are easier solutions.

Bardeen is a free AI Agent for doing repetitive tasks.

The LinkedIn Data Scraper. It automates data extraction from LinkedIn profiles, companies, and posts, saving you time and effort.

LinkedIn is the world's largest professional networking platform. It holds an enormous amount of valuable data, including industry trends, job listings, company information, and contact details. However, finding the exact information you want can be tricky and time-consuming.

In this step-by-step guide, we'll walk you through the process of scraping data from LinkedIn using Python, Selenium, and Beautiful Soup. We'll discuss how to set up your Python environment, understand LinkedIn's dynamic site structure, automate browser interactions, extract data, manage pagination, and save scraped data.

By the end of this guide, you'll have everything you need to responsibly and effectively gather data from LinkedIn. Whether you're a data analyst or a developer, we’ll show you how to confidently scrape data from the platform using your own LinkedIn scraper.

Why Scrape LinkedIn Data with Python?

LinkedIn is a goldmine of valuable data for businesses, researchers, and developers. From job listings and company profiles to user information and industry insights, LinkedIn holds a wealth of information that can be used for various purposes. However, manually extracting this data often requires a lot of time and effort.

That's where web scraping tools come in. By using Python, along with powerful libraries like Selenium and Beautiful Soup, you can automate the process of extracting data from LinkedIn. This lets you access valuable information in a matter of minutes.

Python LinkedIn scraping can help with:

- Sales Prospecting

- Lead Generation

- Automating Repetitive Tasks

- Email Outreach

- Recruitment

- Market Research

- Identifying Industry Trends

There’s a long list of benefits to scraping data from LinkedIn - you just need to know how to use automations to increase the magnitude of these advantages!

No Need for Coding: Let Bardeen Do It for You

Before we dive into the ins and outs of the LinkedIn scraper Python-style, we just want to take a moment to explain a far simpler way of web scraping LinkedIn. Scraping data from LinkedIn using Python requires a deep understanding of web scraping techniques and handling complex libraries, so many people are put off by this.

Automating data extraction from LinkedIn is seamless with the Bardeen AI web scraper. This approach not only saves time but also enables you to gather LinkedIn data effectively with minimal coding skills.

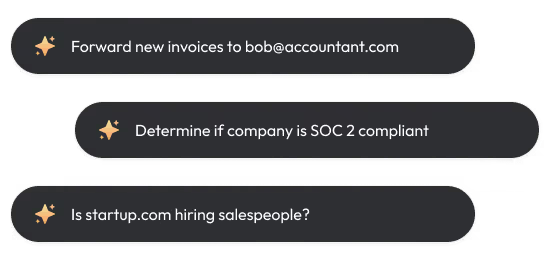

Here are examples of how Bardeen automates LinkedIn data extraction:

- Get Data from a Linkedin Profile Search: Perfect for market research or lead generation, this playbook automates the extraction of LinkedIn profile data based on your search criteria.

- Get Data from the Linkedin Job Page: Ideal for job market analysis or job search automation, this playbook extracts detailed job listing information from LinkedIn.

- Get Data from the Currently Opened Linkedin Post: Enhance your content strategy or competitor analysis by extracting data from LinkedIn posts efficiently.

Streamline your LinkedIn data-gathering process by downloading the Bardeen app today! The tutorial video below shows you how to scrape LinkedIn using Bardeen:

How to Scrape LinkedIn Data with Python, Selenium & Beautiful Soup

1. Set Up Your Python Environment for Scraping

Before diving into web scraping with Python, it's essential to set up your environment with the necessary tools and libraries. Here's a step-by-step guide to get you started:

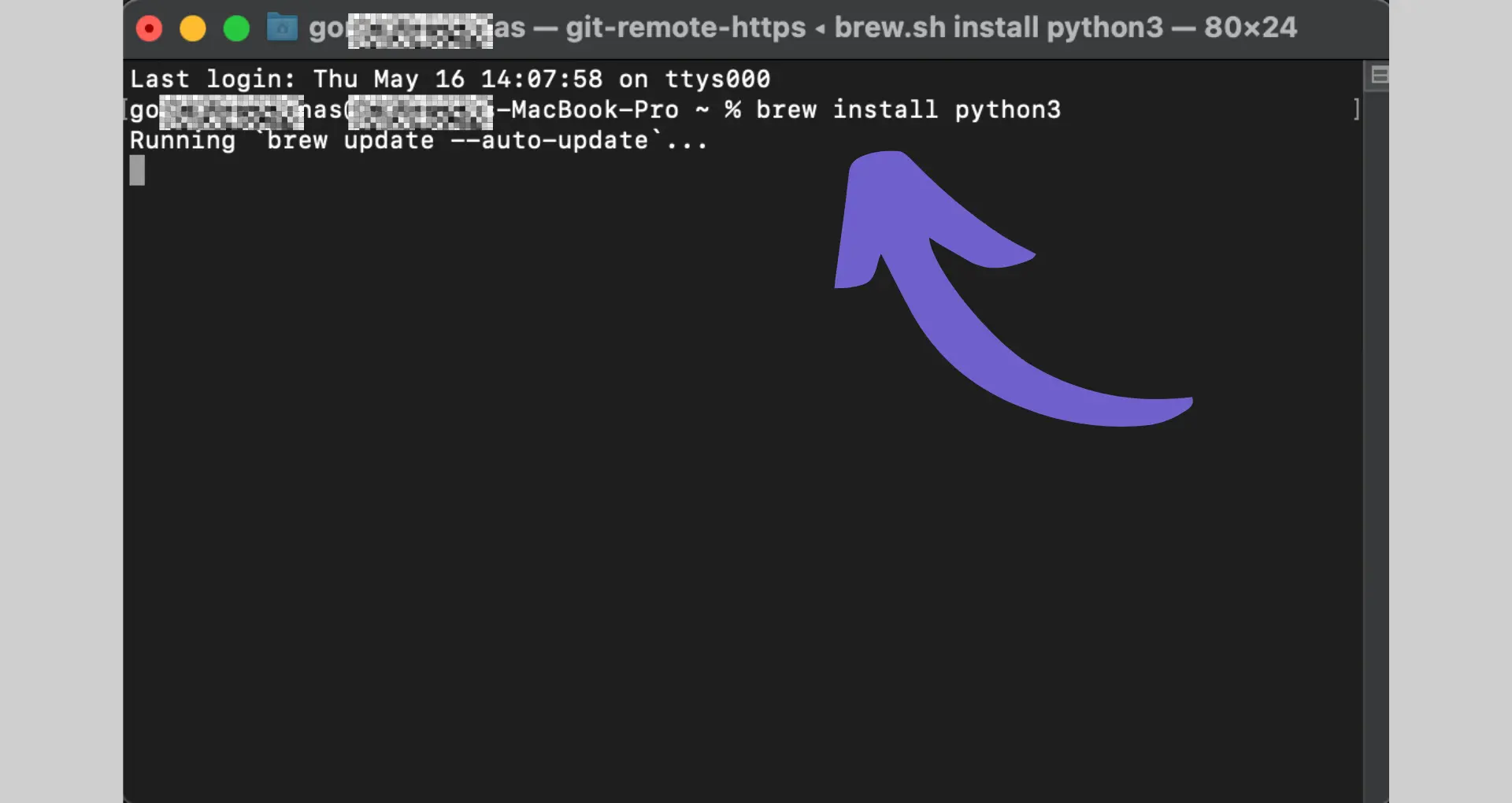

1. Install Python

Download and install the latest version of Python from the official website (python.org). Make sure to check the option to add Python to your system's PATH during the installation process.

2. Set Up a Virtual Environment (Optional but Recommended)

Create a virtual environment to keep your project's dependencies isolated. Open your terminal and run the following commands:

- python -m venv myenv

- source myenv/bin/activate

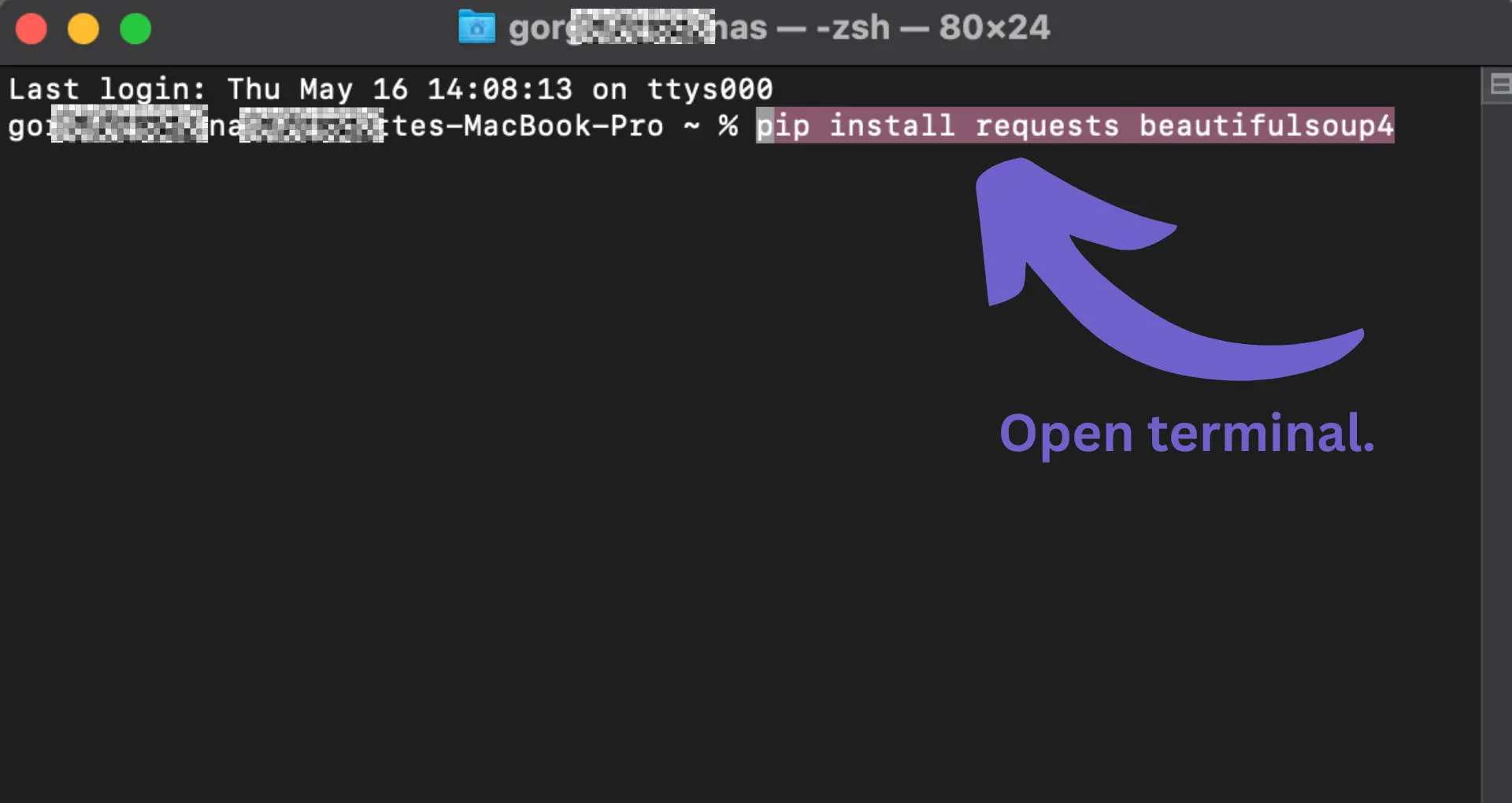

3. Install Required Libraries

With your virtual environment activated, install the essential libraries for web scraping:

- pip install requests beautifulsoup4 lxml selenium pandas

Here are some brief details on the terms we’ve used so far:

- Requests: A simple and elegant library for making HTTP requests.

- Beautiful Soup: A powerful library for parsing HTML and XML documents.

- lxml: A fast and feature-rich parser for processing XML and HTML.

- Selenium: A tool for automating web browsers, useful for scraping dynamic websites.

- Pandas: A data manipulation library that provides data structures for efficiently storing and analyzing scraped data.

By setting up a virtual environment, you can ensure that your project's dependencies are isolated from other Python projects on your system, avoiding potential conflicts.

With Python and the necessary libraries installed, you're now ready to start your web scraping journey using Python, Selenium, and Beautiful Soup.

2. Understand LinkedIn's Dynamic HTML Site Structure

To effectively scrape data from LinkedIn, it's crucial to understand the site's dynamic structure and the HTML elements on the pages. LinkedIn heavily relies on JavaScript to render content, making it challenging for traditional web scraping techniques. Here's how you can navigate LinkedIn's structure:

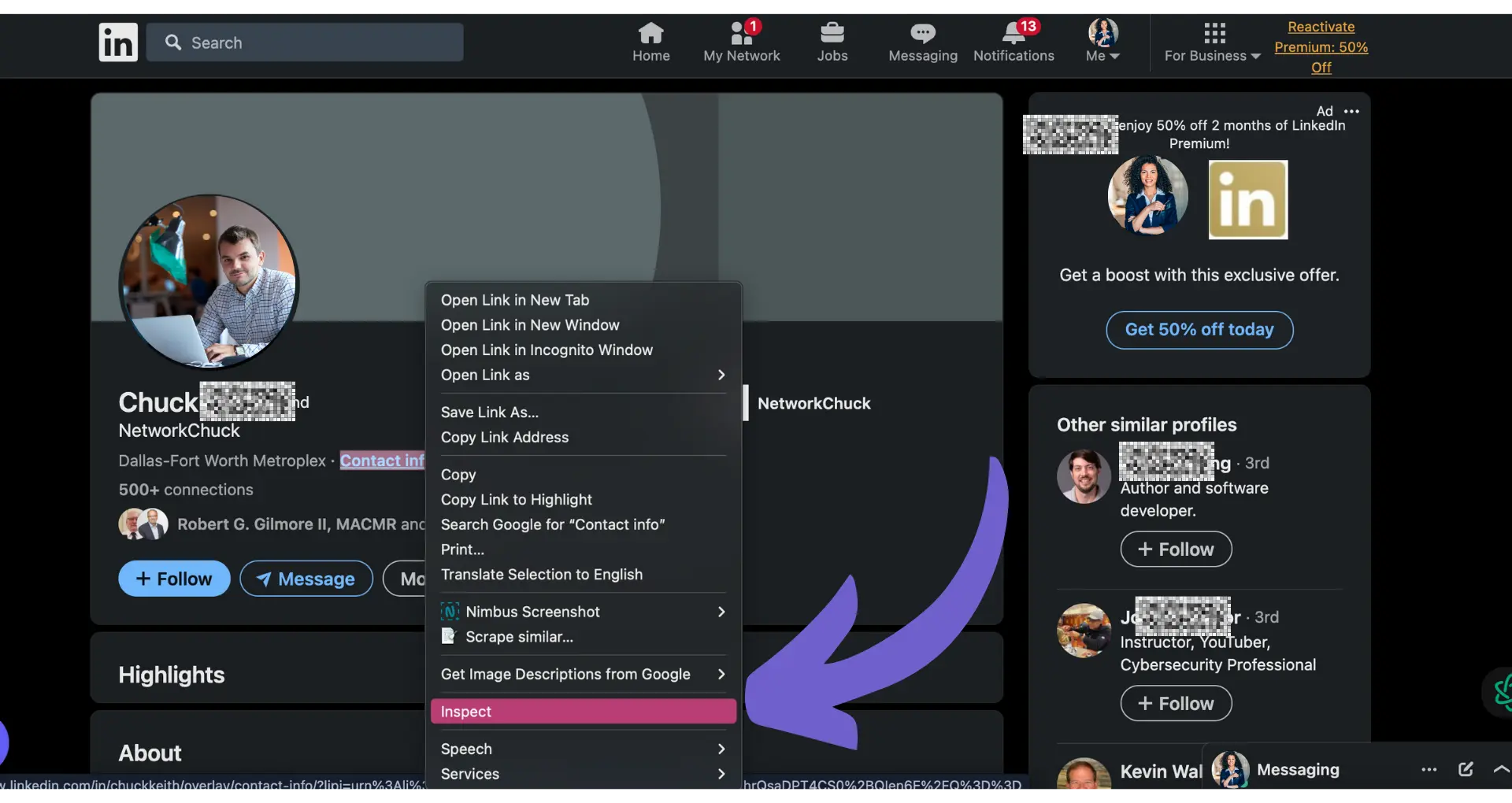

1. Open a LinkedIn profile in your web browser.

2. Open Chrome DevTools to examine LinkedIn's HTML structure. To do this, right-click on an element you want to scrape (e.g., name, title) and select "Inspect".

3. Identify key data points like job titles, company names, and other relevant information you want to extract.

4. Take note of the HTML tags, classes, and IDs that uniquely identify the desired elements. You'll use these to locate and extract the data with Beautiful Soup later.

5. You’ll notice that some content is loaded dynamically through AJAX calls and JavaScript rendering, which means the data might not be immediately available in the initial HTML source. For example, you might find that the name is wrapped in a <h1> tag with a specific class, while the title is in a <div> with its own unique class or ID.

To locate the desired data, you can:

- Use the "Network" tab in Chrome DevTools to monitor AJAX requests and identify the endpoints that return the required data.

- Analyze the JavaScript code responsible for rendering the content to understand how the data is being populated.

Examining LinkedIn's dynamic structure can help you determine the optimal approach for extracting data using Python or other tools like Selenium and Beautiful Soup. Selenium will help you interact with the dynamic elements, while Beautiful Soup will parse the rendered HTML to extract the desired information.Understanding the HTML structure also helps with precision when it comes to data extraction. It allows you to write targeted Beautiful Soup queries to fetch only the desired information from the LinkedIn profiles you're scraping.

3. Automate Browser Interactions with Selenium

Selenium is a powerful tool for automating browser interactions, making it ideal for scraping dynamic websites like LinkedIn. Here's a step-by-step guide on setting up Selenium with WebDriver to interact with LinkedIn pages:

1. Install Selenium: Use “pip install selenium” to install the Selenium library.

2. Download WebDriver: Selenium requires a WebDriver to communicate with your chosen browser. For example, if you’re using Google Chrome, download the Chrome web driver, ChromeDriver.

3. Set up Selenium: Import the necessary modules and initialize the WebDriver:

“from selenium import webdriver”“driver=webdriver.Chrome('/path/to/chromedriver')”

4. Navigate to LinkedIn: Use the “driver.get()” method to navigate to the LinkedIn login page: “driver.get('https://www.linkedin.com/login')”

5. Find the LinkedIn Login Page: Find the email and password input fields using their HTML IDs or by using “XPath:username = driver.find_element(By.XPATH, '//input[@name='session_key']")”

6. Log In to LinkedIn: Use the “send_keys()” method to enter your credentials: “email_field=driver.find_element_by_id('username')” “email_field.send_keys('your_email@example.com')” “password_field=driver.find_element_by_id('password')” “password_field.send_keys('your_password') password_field.submit()”

7. Click the Login Button: ”login_button = driver.find_element(By.XPATH, "//button[@type='submit']")”

“login_button.click()”Remember to handle any potential roadblocks, such as CAPTCHA or two-factor authentication, by incorporating appropriate waiting times or user input prompts.The steps above will automate logging into your LinkedIn account using Selenium and Python, helping you complete repetitive tasks. You can even send messages on LinkedIn via Selenium! Let’s explore how to navigate LinkedIn using Selenium in the next section.

5. Extract LinkedIn Data with Beautiful Soup

Beautiful Soup is a powerful Python library for parsing HTML and XML documents. It allows you to extract specific data from web pages by navigating the document tree and searching for elements based on their tags, attributes, or text content. Here's how you can use Beautiful Soup to parse the HTML obtained from Selenium and extract structured data from a LinkedIn job search:

1. Install Beautiful Soup: pip install beautifulsoup4

2. Import the Library in Your Python Script: from bs4 import BeautifulSoup

3. Create a Beautiful Soup Object: Pass the HTML content and the parser type: soup = BeautifulSoup(html_content, 'html.parser')

4. Locate Specific Elements: Use Beautiful Soup's methods to do this:

- find(): Finds the first occurrence of a tag with specified attributes.

- find_all(): Finds all occurrences of a tag with specified attributes.

- CSS Selectors: Use the “select()” method with a CSS selector to locate elements.

5. Extract Data: Retrieve the scraped data you need from the located elements:

- get_text(): Retrieves the text content of an element.

- get(): Retrieves the value of a specified attribute.

- Access tag attributes directly using square bracket notation.

Here's an example of how to extract job post details from the HTML:

soup = BeautifulSoup(html_content, 'html.parser')

job_listings = soup.find_all('div', class_='job-listing')

for job in job_listings:

title = job.find('h2', class_='job-title').get_text()

company = job.find('span', class_='company').get_text()

location = job.find('span', class_='location').get_text()

print(f"Title: {title}")

print(f"Company: {company}")

print(f"Location: {location}")

print("---")

In this example, we locate all the “div” elements with the class “job-listing” using “find_all()”. Then, for each job listing, we find the specific elements containing the job title, company, and location using “find()” with the appropriate CSS class names. Finally, we extract the text content of those elements using “get_text()”.

By leveraging Beautiful Soup's methods and CSS selectors, you can precisely pinpoint the data you need within the HTML structure and extract it to Excel, Google Sheets, and other applications.

You can further explore the HTML structure using browser developer tools to identify the specific elements and extract web data without coding.

Remember to handle exceptions and errors gracefully, as the HTML structure of LinkedIn pages may change over time. It's also important to respect LinkedIn's terms of service and avoid excessive or aggressive scraping that could result in your IP being blocked.

6. Manage Data Pagination and Scrape Multiple Pages

Pagination is a common challenge when you web scrape LinkedIn, especially when dealing with large datasets that span across multiple pages. LinkedIn's job search results page often employs pagination or infinite scrolling to load additional job listings or an extra job title as the user scrolls down.

To effectively scrape data from all available pages, you need to handle pagination programmatically. Here's how you can automate pagination handling and data collection across multiple pages:

1. Identify the Pagination Mechanism:

- Check if the page uses numbered pagination links or a "Load More" button.

- Inspect the URL pattern for different pages to see if it follows a consistent format (e.g., https://www.linkedin.com/jobs/search/?start=25).

2. Implement Pagination Handling:

If using numbered pagination links:

- Extract the total number of pages or the last page number.

- Iterate through the page range and construct the URL for each page.

- Send a request to each page URL and parse the response.

If using a "Load More" button or infinite scrolling:

- Identify the API endpoint that returns the additional job listings.

- Send requests to the API endpoint with increasing offset or page parameters until no more results are returned.

- Parse the JSON or HTML response to extract the job data.

3. Scrape and Store Data from Each Page:

- Use Beautiful Soup or a similar library to parse the HTML of each page.

- Extract the relevant job information, such as title, company, location, and description.

- Store the scraped data in a structured format (e.g., CSV, JSON) or database.

Here's a code snippet that demonstrates pagination handling using numbered pagination links:

import requests

from bs4 import BeautifulSoup

base_url = 'https://www.linkedin.com/jobs/search/?start={}'

start = 0

page_size = 25

while True:

url = base_url.format(start)

response = requests.get(url)

soup = BeautifulSoup(response.text, 'html.parser')

# Extract job data from the current page

job_listings = soup.find_all('div', class_='job-listing')

for job in job_listings:

# Extract and store job information

# ...

# Check if there are more pages

if not soup.find('a', class_='next-page'):

break

start += page_size

In this example, the script starts from the first page and iteratively sends requests to the subsequent pages by updating the “start” parameter in the URL. It extracts the job listings from each page using Beautiful Soup and stops when there are no more pages to scrape.

By automating pagination handling, you can ensure that your Python LinkedIn scraper and LinkedIn Python API capture data from all available job listings, providing a comprehensive dataset for further analysis and utilization.

Of course, you can always use Bardeen to make web scraping LinkedIn easier. You can use pre-built automations to extract LinkedIn company and job data to boost your efficiency without the need for elaborate coding or installations.

7. Save and Export Scraped LinkedIn Data

Once you have successfully scraped data from LinkedIn, the next step is to store and utilize the collected information effectively. There are several methods to save scraped data, depending on your specific requirements and the volume of data. Here are a few common approaches:

1. CSV Files:

- Write the scraped data to a CSV (Comma-Separated Values) file using Python's built-in CSV module.

- Each row in the CSV file represents a single record, with columns corresponding to different data fields (e.g., job title, company, location).

- CSV files are simple and portable, and they can be easily imported into spreadsheet applications or databases for further analysis.

2. Databases:

- Store the scraped data in a database management system like MySQL, PostgreSQL, or MongoDB.

- Use Python libraries such as SQLAlchemy or PyMongo to interact with the database and insert the scraped records.

- Databases provide structured storage, efficient querying, and the ability to handle large datasets.

3. JSON Files:

- If the scraped data has a hierarchical or nested structure, consider saving it in JSON (JavaScript Object Notation) format.

- Use Python's JSON module to serialize the data into JSON strings and write them to a file.

- JSON files are lightweight, human-readable, and commonly used for data interchange between systems.

Is It Legal to Web Scrape LinkedIn with Python?

When saving and utilizing scraped data from LinkedIn, it's crucial to consider the ethical and legal implications. LinkedIn's terms of service prohibit the use of scrapers or automated tools to extract data from their platform without explicit permission. Violating these terms can result in account suspension or legal consequences.

To ensure compliance with LinkedIn's policies, consider the following guidelines:

- Review and adhere to LinkedIn's terms of service and privacy policy.

- Obtain necessary permissions or licenses before scraping data from LinkedIn.

- Use the scraped data responsibly and in accordance with applicable data protection laws, such as GDPR or CCPA.

- Anonymize or aggregate sensitive personal information to protect user privacy.

- Avoid excessive or aggressive scraping that may strain LinkedIn's servers or disrupt the user experience.

- Use LinkedIn's official APIs whenever possible to access data in a sanctioned manner, such as the LinkedIn Sales Navigator API for Python.

- Limit your scraping activity to a reasonable volume, such as scraping no more than 50 profiles per day.

- Implement proxy servers or rotate IP addresses to distribute your requests and avoid detection.

- Regularly monitor LinkedIn's terms of service and adjust your scraping practices accordingly.

By following ethical practices and respecting LinkedIn's terms of service, you can leverage the scraped data for various purposes, such as market research, talent acquisition, or competitor analysis, while mitigating legal risks.

Remember, the scraped data is only as valuable as the insights you derive from it. Analyze the collected information, identify patterns or trends, and use data visualization techniques to communicate your findings effectively. Combining the scraped LinkedIn data with other relevant datasets can provide a more comprehensive understanding of your target market or industry.

Python vs Bardeen: Which LinkedIn Data Scraper is Best?

LinkedIn data scraping is an effective and cost-effective way to extract valuable data for companies, job listings, industry updates, and candidates. Rather than manually trawling through each page yourself, it’s far better to use a scraper to get the data for you.

While the Python LinkedIn scraper is robust and reliable, it requires a lot of coding knowledge and some extra software (Selenium and Beautiful Soup) to make it worthwhile.

Instead, we recommend Bardeen. It’s a free Chrome extension that requires absolutely no coding knowledge to work. Simply add prompts and create workflows using natural language, allowing you to build complex processes in a matter of minutes. Alternatively, you can just use our pre-built automations to extract LinkedIn data without even building the workflows.

Get started with Bardeen now to explore the wide variety of workflow automations you can use to scrape LinkedIn data. Download Bardeen today!

Automate LinkedIn Data Extraction with Bardeen

While scraping data from LinkedIn using Python requires a deep understanding of web scraping techniques and handling complex libraries, there's a simpler way. Automating data extraction from LinkedIn can be seamlessly achieved using Bardeen. This approach not only saves time but also enables even those with minimal coding skills to gather LinkedIn data effectively.

Here are examples of how Bardeen automates LinkedIn data extraction:

- Get data from a LinkedIn profile search: Perfect for market research or lead generation, this playbook automates the extraction of LinkedIn profile data based on your search criteria.

- Get data from the LinkedIn job page: Ideal for job market analysis or job search automation, this playbook extracts detailed job listing information from LinkedIn.

- Get data from the currently opened LinkedIn post: Enhance your content strategy or competitor analysis by extracting data from LinkedIn posts efficiently.

Streamline your LinkedIn data gathering process by downloading the Bardeen app at Bardeen.ai/download.

.svg)

.svg)

.svg)