Crunchbase holds a treasure trove of business data, but manually extracting it can be a time-consuming nightmare. What if you could scrape and analyze Crunchbase data at scale, saving countless hours? In this step-by-step guide, we'll show you how to automate Crunchbase scraping using Python libraries and AI tools like Bardeen. Get ready to unlock valuable insights on companies, investors, and industry trends!

Understand the Breadth of Crunchbase Data

Before diving into scraping Crunchbase, it's crucial to grasp the extensive data available on the platform:

- Company profiles: funding rounds, acquisitions, leadership, and more

- Investor profiles: investment focus, portfolio companies, contact info

- Industry trends and news

Familiarizing yourself with the data landscape helps plan your scraping strategy effectively.

Explore Company Profiles

Crunchbase company profiles provide rich details beyond basic facts:

- Financials outline funding amounts, dates, and lead investors per round

- Acquisition data shows purchase prices and acquiring companies

- People section lists current and past executives and board members

Click through several profiles to understand the information depth when scraping Crunchbase.

Mine Investor Profile Data

For startups seeking funding, investor profiles on Crunchbase offer valuable insights:

- Preferred industry sectors and investment stages

- Typical check sizes and portfolio companies

- Direct contact information for many investors

Prioritize scraping these key data points from Crunchbase investor profiles.

Examine Industry Trends and News

Crunchbase also compiles broader industry data and startup news:

- Hubs analyze sector or location-specific company patterns

- Discover section highlights funding, leadership changes, product launches

Consider how these additional datasets could complement your primary company and investor information when scraping Crunchbase.

Understand Crunchbase's Site Structure for Targeted Scraping

To effectively scrape data from Crunchbase, it's essential to analyze the site's navigation and structure. This allows you to identify key pages containing company and investor information, as well as uncover URL patterns and API calls for efficient data access.

1. Inspect Navigation to Find Profile URLs

Start by examining Crunchbase's main navigation menu and sitemaps to locate lists or directories of company and investor profile pages. These act as starting points for your scraper to systematically extract URLs for individual profiles to target.

For example, you might find a "Companies" or "Investors" section listing profiles by category or location. Collecting these URLs enables you to build a comprehensive dataset to scrape.

2. Identify Paginated Result URL Patterns

Next, look for search result pages or list views that spread data across multiple pages. Inspect the URL structure as you navigate through these paginated results to spot patterns.

Common URL parameters like "page=1" or "offset=50" indicate paginated content. By programmatically generating sequential URLs, you can ensure your scraper captures all available data without missing pages.

3. Locate Data-Rich AJAX and API Calls

Modern websites like Crunchbase often load data dynamically through AJAX requests or API calls, without refreshing the entire page. Inspecting the browser's Network tab can reveal these requests, which may provide more structured data than the HTML page itself.

Identifying and calling these APIs directly allows you to access the underlying JSON or XML data, reducing parsing complexity compared to scraping raw HTML.

Analyzing Crunchbase's site structure lays the groundwork for an efficient, targeted scraping approach. Armed with key URLs and data endpoints, you can proceed to extracting the desired company and investor details at scale.

In the upcoming section, we'll walk through techniques to scrape data efficiently using Python libraries and best practices. Get ready to supercharge your data extraction pipeline!

Techniques for Extracting Crunchbase Data at Scale

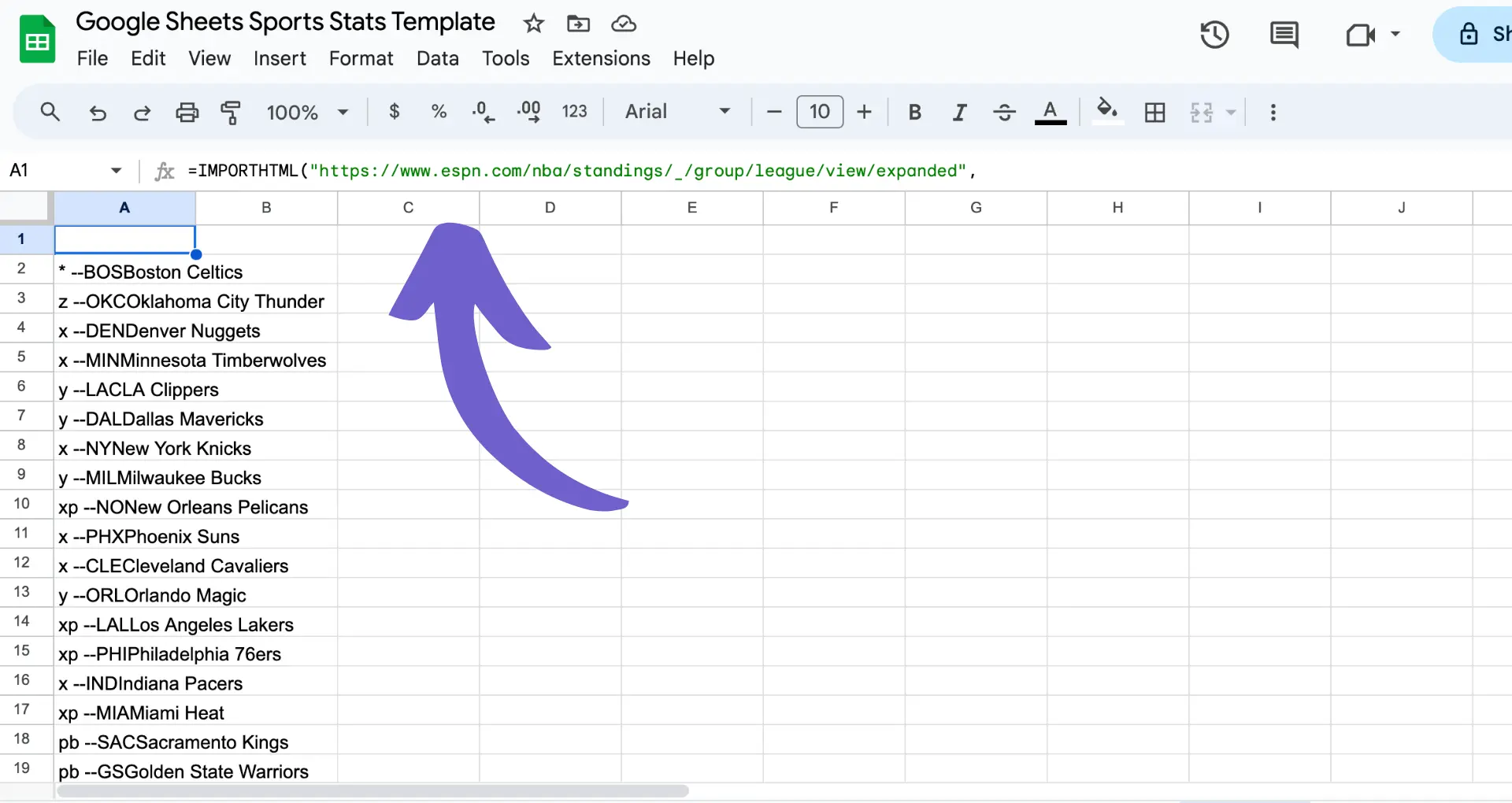

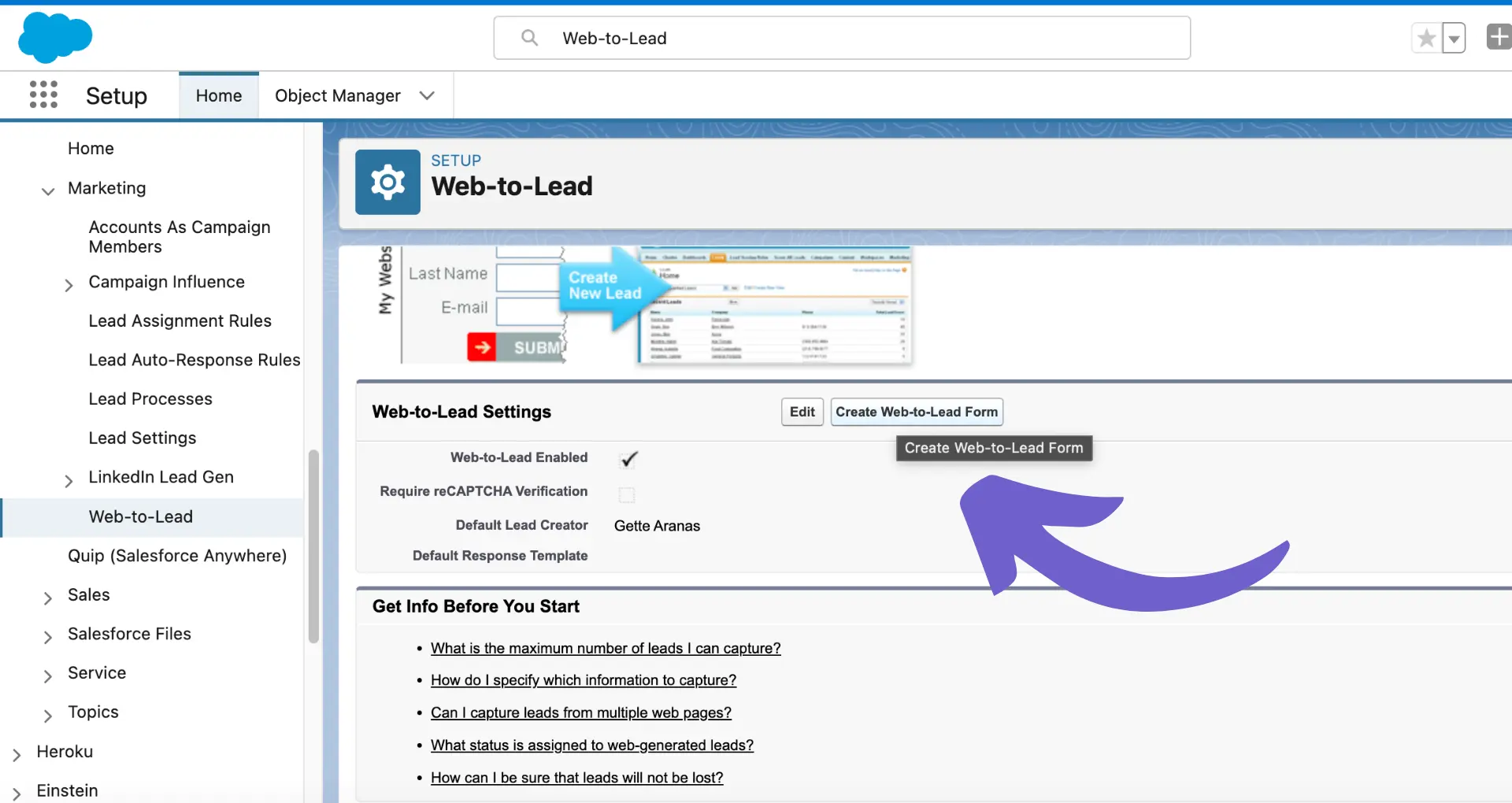

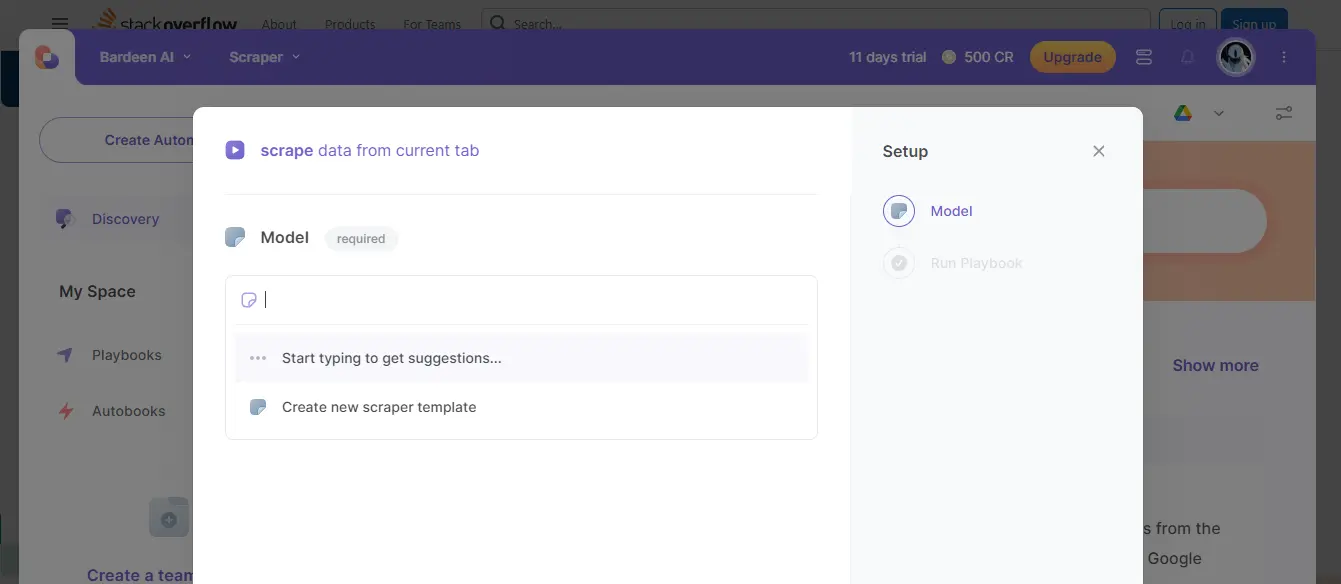

To efficiently scrape large amounts of data from Crunchbase, it's important to set up a robust scraping environment and workflow. Python libraries like Scrapy and Beautiful Soup provide powerful tools for extracting structured data. Configuring your scraper settings, parsing HTML responses, and storing the scraped data are key steps in the process. Consider using web scraper extensions to enhance your data extraction capabilities.

1. Set Up a Python Scraping Environment

Start by installing Python and setting up a virtual environment for your scraping project. Then, install the necessary libraries like Scrapy or Beautiful Soup using pip.

For example, to install Scrapy, run pip install scrapy. Scrapy provides a complete framework for writing web spiders, handling requests, and extracting data using CSS or XPath selectors.

2. Configure Scraper Settings and Throttling

Before running your scraper, configure settings like request headers, timeout values, and concurrent requests. This ensures your scraper appears as a legitimate user and avoids overloading Crunchbase's servers.

Scrapy allows you to set a download delay between requests using the DOWNLOAD_DELAY setting. Respect Crunchbase's robots.txt file and consider using an API key if available to stay within usage limits.

3. Parse HTML Responses and Extract Data

Once you've retrieved the HTML content for a page, use Beautiful Soup or Scrapy's built-in parsers to navigate the DOM and extract relevant data points. Look for specific HTML tags, CSS classes, or XPath expressions that uniquely identify the desired elements.

For instance, to parse a company's funding rounds, you might target the \u003cdiv class=\"funding_rounds\"\u003e\u003c/div\u003e element and extract child elements containing round details. Convert the parsed data into structured formats like dictionaries or custom item classes.

4. Store Scraped Data for Further Analysis

As you extract data points, store them in a format suitable for further analysis and aggregation. Scrapy's Item Pipeline allows you to process and store scraped items in a database or export them as JSON or CSV files.

Consider using a PostgreSQL or MongoDB database to store structured company and investor data. This allows for efficient querying and integration with other tools for analysis and visualization.

By leveraging Python libraries, configuring scraper settings, and extracting data into structured formats, you can efficiently scrape Crunchbase at scale. Stay tuned for the next section, where we'll cover best practices for respectful and reliable Crunchbase scraping.

Best Practices for Crunchbase Scraping

To ensure your Crunchbase scraping efforts are effective and ethical, it's crucial to follow best practices. This includes respecting Crunchbase's terms of service, implementing incremental scraping, monitoring scraper performance, and properly managing your scraped data. By adhering to these guidelines, you can maintain a reliable and sustainable scraping process.

1. Respect Crunchbase's Terms of Service

Before scraping Crunchbase, carefully review their terms of service and robots.txt file. These outline what is permissible in terms of accessing and using their data. Failure to comply can result in your IP being blocked, hindering your ability to scrape.

For example, Crunchbase may specify a maximum number of requests per second or prohibit scraping certain sections of the site. By staying within these boundaries, you demonstrate respect for the platform and reduce the risk of being flagged as a malicious bot.

2. Implement Incremental Scraping Techniques

Rather than scraping Crunchbase's entire database every time, employ incremental scraping to capture only new or updated information. This targeted approach minimizes the load on Crunchbase's servers and makes your scraping more efficient.

To achieve incremental scraping, keep track of previously scraped data and compare it against the current data. Only extract and store records that have changed since your last scraping session. This technique is particularly useful when monitoring company profiles for new funding rounds or leadership updates.

3. Monitor Scraper Performance and Adapt

Regularly monitor your scraper's performance metrics, such as success rates, response times, and error frequencies. These insights help identify potential issues before they escalate and allow you to fine-tune your scraping process.

If you notice a sudden drop in success rates or an increase in errors, investigate promptly. Crunchbase may have updated their site structure or implemented new anti-scraping measures. Be prepared to adapt your scraper's code to handle these changes and maintain smooth operation.

4. Backup and Version Control Scraper Code

Treat your scraper code as you would any other valuable software asset by implementing proper backup and version control practices. Regularly back up your code to prevent data loss in case of system failures or accidental deletions. Use a version control system like Git to track changes to your scraper over time. This allows you to revert to previous working versions if needed and collaborate with others on scraper development. By maintaining a well-documented and version-controlled codebase, you ensure the longevity and reliability of your Crunchbase scraping pipeline.

By prioritizing these best practices - respecting terms of service, implementing incremental scraping, monitoring performance, and managing your code - you can scrape Crunchbase responsibly and effectively. Up next, we'll summarize the key takeaways from this guide on scraping Crunchbase.

We hope you've found this in-depth guide on scraping Crunchbase informative and actionable. From understanding Crunchbase's data offerings to navigating their site structure, setting up a robust scraping environment, and following best practices, you're now well-equipped to tackle scraping Crunchbase. Remember, with great automation in sales prospecting power comes great responsibility - use your newfound knowledge wisely!

Save time on LinkedIn with Bardeen's connect LinkedIn integration. Streamline data extraction while focusing on what matters most.

Conclusions

Mastering the art of scraping Crunchbase unlocks a wealth of valuable business data for informed decision-making. This guide walked you through:

- Understanding the diverse datasets available on Crunchbase, from company financials to investor preferences

- Navigating Crunchbase's site structure to efficiently scrape profile data at scale

- Setting up a robust scraping environment and workflow using Python libraries and best practices

- Adhering to Crunchbase's terms of service while responsibly extracting and managing scraped data

By following the techniques outlined in this step-by-step guide, you can confidently scrape Crunchbase and leverage its rich data for your business needs. Consider using a LinkedIn data scraper for similar data extraction tasks. Don't let the fear of missing out on valuable insights hold you back - start scraping Crunchbase today!