Install Ollama, download DeepSeek, then run it locally for full control.

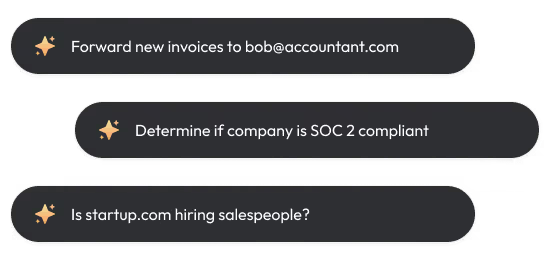

By the way, we're Bardeen, we build a free AI Agent for doing repetitive tasks.

If you're setting up DeepSeek, you might be interested in Bardeen's automated enrichment tools. They save time and streamline workflows by automating repetitive tasks.

Curious about running DeepSeek locally on your own hardware? With the right setup, you can unleash the power of AI without relying on cloud servers or API limits. Imagine having a ChatGPT-like experience right on your computer, with full control over your data and lightning-fast response times.

In this comprehensive guide, we'll walk you through the process of setting up DeepSeek R1 models locally, optimizing performance, and creating a great user interface. Get ready to level up your AI game and discover the benefits of running DeepSeek in your own environment. Let's dive in!

Setting Up DeepSeek-R1 Locally With Ollama

Running DeepSeek-R1 on your own hardware provides several key benefits. It ensures your data remains private by processing everything locally. You have full control over the model without relying on external APIs. And by avoiding API fees, running DeepSeek-R1 locally is highly cost-efficient.

1. Install Ollama To Simplify Local LLM Deployment

Ollama makes it easy to run large language models like DeepSeek-R1 on your own machine. It handles model quantization and optimization automatically. To get started, simply download and install Ollama from the official website.

2. Download DeepSeek-R1 Model With a Single Command

Once you have Ollama installed, open a terminal and run:ollama run deepseek-r1

This will download and set up the full 671B parameter DeepSeek-R1 model. If your hardware can't handle the large model, Ollama provides distilled versions ranging from 1.5B to 70B parameters that you can run instead.

3. Serve DeepSeek-R1 as an API For Easy Integration

To make DeepSeek-R1 available for use in other applications, start the Ollama server:ollama serve

This runs the model in the background and exposes it through a local API. You can then call the API from your own code to generate text, answer questions, and more.

4. Interact With DeepSeek-R1 Through Multiple Interfaces

Ollama provides several convenient ways to use DeepSeek-R1 once it's running locally:

- In the terminal via interactive CLI

- Programmatically through the HTTP API

- In your Python scripts and notebooks using the Ollama library

With local access to DeepSeek-R1, you can experiment freely, integrate it into your own applications, and build powerful AI-enabled tools and services.

Running DeepSeek-R1 locally using Ollama puts the power of large language models in your hands. You can use DeepSeek's capabilities while maintaining privacy, control, and cost-efficiency.

Next up, we'll dive into the details of using DeepSeek-R1 with Ollama and walk through building an example retrieval augmented generation application. You'll learn how to implement a complete locally-hosted AI solution powered by DeepSeek.

Using DeepSeek-R1 Locally: From CLI to Python Integration

Once you have DeepSeek-R1 running locally with Ollama, there are several ways to interact with the model. You can run inference directly in the terminal, access the model via API for integration with other applications, or use the Ollama Python library to incorporate DeepSeek into your Python projects. Let's explore each method and see how you can leverage DeepSeek-R1's capabilities in your local environment.

1. Interacting With DeepSeek-R1 via CLI

The simplest way to use DeepSeek-R1 is through the command line interface (CLI). After starting the Ollama server, you can open a new terminal window and run:ollama chat "Your prompt here"

Replace "Your prompt here" with your desired input, and DeepSeek-R1 will generate a response directly in the terminal. This is great for quick experiments and testing out the model's capabilities.

2. Accessing DeepSeek-R1 Through the API

For more advanced use cases, you can interact with DeepSeek-R1 via the Ollama API. This allows you to integrate the model into your own applications and services. To make an API request, use a tool like curl:curl -X POST -H "Content-Type: application/json" -d '{"prompt": "Your prompt here"}' http://localhost:8000/v1/completions

Replace "Your prompt here" with your input, and the API will return the generated response as JSON. This enables seamless integration with other software components.

Boost your workflow by using Bardeen's tools to generate emails with AI. Automating message creation saves time for important tasks!

3. Using DeepSeek-R1 With Python and Ollama

If you prefer working in Python, the Ollama library provides a convenient way to access DeepSeek-R1. First, install the library:pip install ollama

Then, you can use the following code to generate a response:from ollama import Ollama

ollama = Ollama()

response = ollama.generate(prompt="Your prompt here")

print(response)

This allows you to incorporate DeepSeek-R1 into your Python scripts and notebooks, opening up a wide range of possibilities for AI-powered applications.

4. Customizing DeepSeek-R1 to Your Needs

Running DeepSeek-R1 locally gives you full control over the model's configuration. You can adjust parameters like temperature, top_k, and top_p to control the randomness and diversity of the generated outputs. For example, using the Python library:response = ollama.generate(prompt="Your prompt here", temperature=0.7, top_k=50, top_p=0.9)

By tweaking these settings, you can fine-tune DeepSeek-R1's behavior to suit your specific use case, whether it's open-ended generation, focused Q&A, or creative writing.

DeepSeek-R1's local accessibility through Ollama empowers you to utilize the model's capabilities in a variety of ways. From quick CLI interactions to API integration and Python scripting, you can leverage DeepSeek-R1 to build powerful AI applications while maintaining privacy, control, and customization.

In the upcoming section, we'll dive into a practical example by building a retrieval-augmented generation (RAG) application using DeepSeek-R1, Gradio, and PDF document processing. Get ready to create an interactive Q&A system that showcases the potential of running DeepSeek-R1 on your own machine!

Building a RAG Application With DeepSeek-R1 and Gradio

Combining DeepSeek-R1's powerful language understanding with retrieval-augmented generation (RAG) enables you to create intelligent applications that can answer questions based on specific document contexts. In this section, we'll walk through the process of building a RAG application using DeepSeek-R1, Gradio, and a few essential Python libraries. By the end, you'll have a web interface that allows users to upload PDFs and ask questions related to their content.

1. Setting Up the Prerequisites

Before diving into the code, ensure you have the following libraries installed:

- Python 3.8+

- Langchain - for building LLM-powered applications

- Chromadb - a vector database for efficient similarity searches

- Gradio - for creating the user interface

Install these dependencies using pip:!pip install langchain chromadb gradio!pip install -U langchain-community

With the setup complete, you're ready to start building your RAG application.

2. Extracting Text and Generating Embeddings

The first step is to process the uploaded PDF, extract its text, and generate document embeddings using DeepSeek-R1. The process_pdf function handles this:

- Load the PDF using PyMuPDFLoader

- Split the text into chunks with RecursiveCharacterTextSplitter

- Generate vector embeddings using OllamaEmbeddings

- Store the embeddings in a Chroma vector database for efficient retrieval

By generating embeddings, you can quickly find relevant document sections based on the user's question.

3. Formatting the Retrieved Context

After retrieving relevant document chunks, you need to combine them into a single string for input to DeepSeek-R1. The combine_docs function accomplishes this by joining the page_content of each retrieved document with newline separators.

This step ensures that the model receives a well-formatted context to generate accurate answers.

4. Querying DeepSeek-R1 With Ollama

With the formatted context ready, it's time to query DeepSeek-R1 using Ollama. The ollama_llm function handles this process:

- Format the user's question and retrieved context into a structured prompt

- Send the prompt to DeepSeek-R1 via ollama.chat()

- Extract the model's response and remove any unwanted tags (e.g., \u003cthink\u003e)

- Return the final answer

By leveraging Ollama, you can easily integrate DeepSeek-R1 into your RAG pipeline and obtain contextually relevant answers.

5. Assembling the RAG Pipeline

The rag_chain function brings together the document retrieval, context formatting, and question-answering components:

- Retrieve relevant documents using the retriever

- Combine the retrieved documents into a formatted context string

- Query DeepSeek-R1 with the user's question and formatted context

- Return the model's answer

This pipeline ensures that DeepSeek-R1 generates well-informed responses based on the most relevant information from the uploaded PDF.

6. Designing the Gradio Interface

Finally, create a user-friendly web interface with Gradio to allow users to upload PDFs and ask questions. The ask_question function handles the UI interaction:

- Check if a PDF is uploaded

- Process the PDF and generate embeddings

- Pass the user's question and embeddings to the RAG pipeline

- Return the model's answer

Use gr.Interface to define the layout, specifying the PDF upload and question input components, and the text output for displaying the answer.

Launch the interface with interface.launch() to start the interactive document Q&A application.

You now have a powerful RAG application that combines DeepSeek-R1's language understanding with efficient document retrieval, all accessible through a sleek Gradio interface. Imagine the possibilities - from analyzing research papers to exploring legal contracts, your application empowers users to ask questions and obtain accurate, context-aware answers. Consider exploring AI sales automation to further enhance your application.

Congratulations on making it this far! You're well on your way to becoming a DeepSeek-R1 expert. Just remember, with great power comes great responsibility - don't let your new AI sidekick replace your own critical thinking skills!

Conclusions

Running DeepSeek-R1 locally empowers you to leverage its capabilities without relying on external servers, ensuring privacy and uninterrupted access. Bardeen's tools for automate enrichment and qualification can further enhance your workflow. In this comprehensive guide, you discovered:

- Setting up DeepSeek-R1 with Ollama for local use, providing privacy, uninterrupted access, and cost efficiency

- Interacting with DeepSeek-R1 locally through CLI, API, and Python for flexible integration and customization

- Building a RAG application using DeepSeek-R1, Gradio, and essential Python libraries for intelligent document Q&A

By mastering the art of running DeepSeek-R1 locally, you unlock a world of possibilities for AI-powered applications. Don't let server downtime or privacy concerns hold you back from exploring the full potential of this remarkable language model!

.svg)

.svg)

.svg)