Use Python libraries like Requests, BeautifulSoup, and Selenium to scrape ASPX pages.

By the way, we're Bardeen, we build a free AI Agent for doing repetitive tasks.

If you're scraping websites, check out our AI Web Scraper. It automates data extraction without coding, saving you time and effort.

Web scraping ASPX pages can be a daunting task for beginners due to their dynamic nature and unique state management techniques. In this step-by-step guide, we'll walk you through the process of scraping data from ASPX pages using Python, covering the essential tools, libraries, and best practices. By the end of this guide, you'll have a solid understanding of how to tackle the challenges of scraping ASPX pages and extract valuable data while staying within legal and ethical boundaries.

Understanding ASPX and Its Challenges for Web Scraping

ASPX pages are dynamic web pages generated by Microsoft's ASP.NET framework. Unlike static HTML pages, ASPX pages rely on server-side processing to generate content dynamically based on user interactions and other factors. This dynamic nature presents unique challenges for web scraping without code.

One key difference between ASPX and standard HTML pages is how they handle state management. ASPX pages use hidden form fields like __VIEWSTATE and __EVENTVALIDATION to maintain the state of the page across postbacks. These fields store encoded data about the page's controls and their values, which the server uses to reconstruct the page state when processing postbacks.

The presence of these dynamic elements and state management techniques can make extracting data from websites to spreadsheets more complex compared to scraping static HTML pages. Some challenges include:

- Handling dynamic content that changes based on user interactions or server-side processing

- Maintaining session state across multiple requests to ensure the scraper receives the expected content

- Dealing with event-driven changes to page content triggered by postbacks or AJAX calls

- Extracting data from complex, nested HTML structures generated by ASP.NET controls

To successfully scrape data from ASPX pages, you need to understand these challenges and employ techniques to handle them effectively. This may involve using specialized web scraper tools and libraries that can simulate user interactions, manage session state, and parse dynamic content. In the following sections, we'll explore how to tackle these challenges using Python and its ecosystem of web scraping libraries.

Tools and Libraries for Scraping ASPX Pages with Python

Python offers a rich ecosystem of libraries that can be used together to tackle the challenges of scraping ASPX pages without code. Three key libraries are Requests, BeautifulSoup, and Selenium.

- Requests: This library simplifies sending HTTP requests and handling responses. It's useful for managing session state across requests, which is crucial when scraping ASPX pages that rely on __VIEWSTATE and __EVENTVALIDATION for maintaining state.

- BeautifulSoup: A powerful library for parsing HTML and XML content. BeautifulSoup makes it easy to extract data from HTML received in responses, navigating through the document tree using various search methods.

- Selenium: A tool primarily used for web browser automation, Selenium can also be employed for web scraping. Its WebDriver component allows you to simulate user interactions with a page, which is essential for handling dynamic content and event-driven changes in ASPX pages.

When scraping ASPX pages, you can combine these libraries to create a robust solution:

- Use Requests to send the initial GET request and retrieve the page content, including the __VIEWSTATE and __EVENTVALIDATION values.

- Employ BeautifulSoup to parse the HTML and extract the necessary form data and other relevant information.

- Utilize Selenium WebDriver to simulate user interactions, such as clicking buttons or filling out forms, which trigger postbacks and update the page content.

- Parse the updated HTML using BeautifulSoup to extract the desired data from the dynamically generated content.

By leveraging the strengths of each library and understanding how they complement each other, you can build a powerful Python-based web scraper capable of handling the intricacies of ASPX pages.

You can save more time on repetitive tasks like this by using Bardeen's web scraper playbook. Automate scraping and focus on what really matters.

Step-by-Step Guide to Scraping Data from ASPX Pages

Scraping data from ASPX pages requires a systematic approach to handle the dynamic nature of these pages and the challenges posed by ASP.NET features like __VIEWSTATE and __EVENTVALIDATION. Here's a step-by-step guide to help you start scraping without coding:

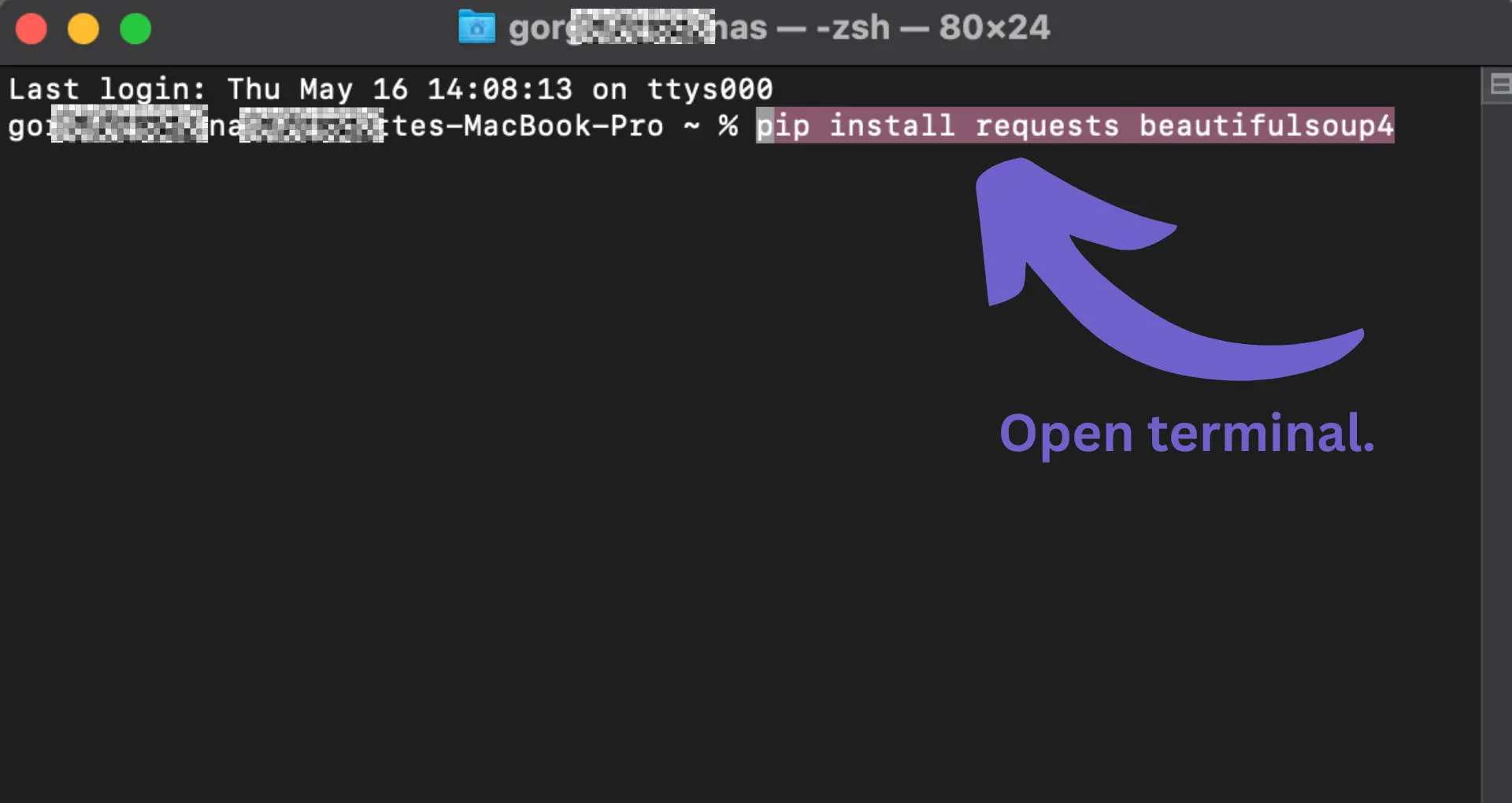

- Set up your Python environment and install the necessary libraries:

- Requests for handling HTTP requests and managing session state

- BeautifulSoup for parsing HTML content

- Selenium for simulating user interactions with the page

- Configure Selenium WebDriver:

- Download the appropriate WebDriver for your browser (e.g., ChromeDriver for Google Chrome)

- Set up the WebDriver in your Python script

- Send an initial GET request to the ASPX page using Requests:

- Retrieve the page content, including the __VIEWSTATE and __EVENTVALIDATION values

- Parse the HTML using BeautifulSoup to extract the necessary form data

- Use Selenium WebDriver to simulate user interactions:

- Navigate to the ASPX page

- Locate and interact with the relevant form elements (e.g., filling out input fields, clicking buttons)

- Trigger any necessary postbacks or event-driven content changes

- Extract the updated HTML content using BeautifulSoup:

- Locate and parse the desired data from the dynamically generated content

- Store the extracted data in a suitable format (e.g., CSV, JSON, database)

- Manage session state and handle form data:

- Maintain cookies and session information across requests

- Include the __VIEWSTATE and __EVENTVALIDATION values in subsequent POST requests to ensure proper state management

- Implement error handling and retry mechanisms:

- Handle exceptions and errors gracefully

- Implement retry logic for failed requests or unexpected responses

- Respect website terms of service and robots.txt:

- Review and comply with the website's terms of service and robots.txt file

- Implement rate limiting and avoid aggressive scraping that may overload the server

By following this step-by-step approach and leveraging the power of Python libraries like Requests, BeautifulSoup, and Selenium, you can effectively scrape data from ASPX pages while handling the complexities of ASP.NET state management and dynamic content.

Best Practices and Legal Considerations in Web Scraping

When scraping data from websites, it's crucial to consider the ethical and legal implications of your actions. Here are some best practices and legal considerations to keep in mind:

- Respect the website's terms of service and robots.txt file:

- Review and comply with the website's terms of service regarding data scraping

- Check the website's robots.txt file for any restrictions on scraping and adhere to them

- Manage your scraping rate and avoid aggressive scraping:

- Limit the frequency of your requests to avoid overloading the website's server

- Implement delays between requests to mimic human browsing behavior

- Be transparent about your scraping activities:

- Identify your scraper with a unique user agent string

- Provide a way for website owners to contact you if they have concerns about your scraping

- Handle scraped data responsibly:

- Use scraped data only for its intended purpose and don't share it publicly without permission

- Ensure that any personal or sensitive information is handled securely and in compliance with data protection regulations like GDPR

- Obtain permission when scraping copyrighted or proprietary content:

- Seek explicit permission from the website owner before scraping copyrighted material

- Be aware that some data, such as product prices or real estate listings, may be proprietary and require a license or agreement to use legally

- Stay informed about legal developments related to web scraping:

- Keep up with court cases and rulings that set precedents for web scraping legality

- Consult with legal experts if you have concerns about the legality of your scraping activities

By following these best practices and staying mindful of the legal landscape surrounding web scraping, you can collect data ethically and minimize the risk of legal issues. Remember, just because data is publicly accessible doesn't always mean it's legal or ethical to scrape without permission. When in doubt, err on the side of caution and seek legal advice.

You can save more time on repetitive tasks like this by using Bardeen's web scraper playbook. Automate scraping and focus on what really matters.

Automate ASPX Scraping with Bardeen

Scraping ASPX pages can be challenging due to their dynamic content and ASP.NET features like __VIEWSTATE and __EVENTVALIDATION. While manual methods provide some level of control, automating the web scraping process can significantly enhance efficiency and accuracy. Bardeen, with its advanced Scraper integration, enables users to automate this process, capturing the dynamic content of ASPX pages effortlessly.

Here are examples of how Bardeen can streamline your web scraping tasks:

- Get keywords and a summary from any website save it to Google Sheets: This playbook not only scrapes data from dynamic ASPX webpages but also synthesizes the captured content, extracting key insights and summarizing information for easy analysis and storage in Google Sheets.

- Get members from the currently opened LinkedIn group members page: Leverage the Scraper to extract valuable data from LinkedIn's dynamic content, perfect for market research and generating leads from group member information.

- Get web page content of websites: Automate the extraction of comprehensive content from ASPX pages, directly saving the output to Google Sheets. This playbook simplifies capturing the full scope of dynamic webpages for content repurposing or archival.

Embrace automation with Bardeen to bypass the complexities of scraping ASPX webpages, saving time and ensuring data accuracy. Start by downloading Bardeen today.

.svg)

.svg)

.svg)