Web scraping extracts data from websites using tools like Python.

By the way, we're Bardeen, we build a free AI Agent for doing repetitive tasks.

If you're scraping data, check out our AI Web Scraper. It automates data extraction without code, saving you time and effort.

Web scraping is a powerful technique for extracting data from multiple web pages, and Python is a popular language for this task. In this step-by-step guide, we'll walk you through the process of scraping data from multiple web pages using Python. We'll cover setting up your environment, using libraries like BeautifulSoup and Selenium, and explore advanced techniques to handle complex scraping scenarios.

Introduction to Web Scraping with Python

Web scraping is the process of extracting data from websites. It's a powerful technique for gathering information from multiple web pages into a structured format like a spreadsheet or database. Python is a popular programming language for web scraping due to its extensive libraries and ease of use.

Key Python libraries for web scraping include:

- BeautifulSoup - for parsing HTML and XML documents

- Selenium - for automating web browsers

- Scrapy - a complete web scraping framework

These libraries make it simple to fetch website content and extract the desired data. With web scraping tools, you can collect large amounts of data from multiple pages to analyze trends, monitor competitors, generate leads, and more.

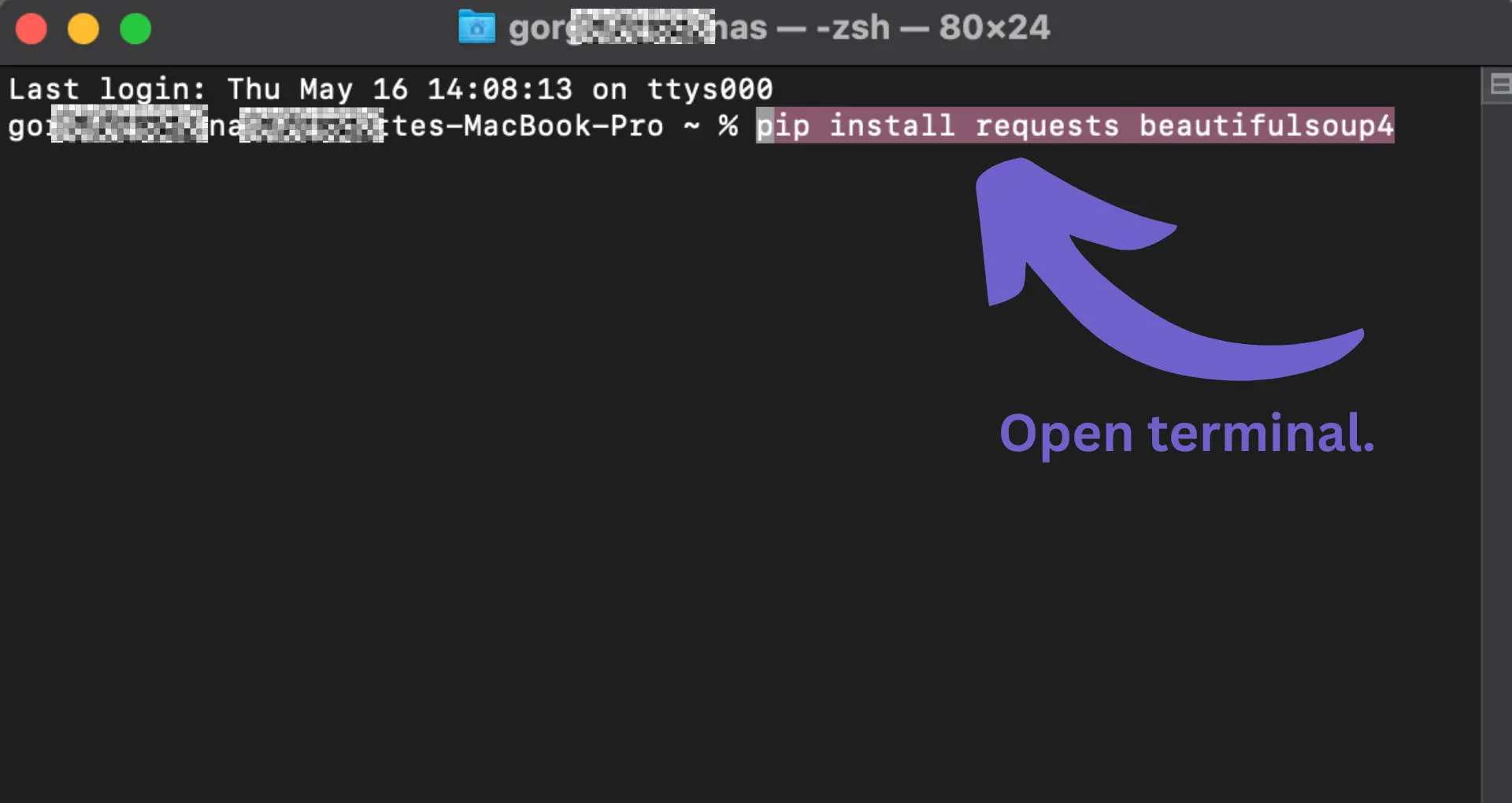

Setting Up Your Python Environment for Scraping

To start web scraping with Python, you need to set up your environment with the necessary tools and libraries. Here's a step-by-step guide:

- Install Python: Download and install the latest version of Python from the official website (python.org/downloads). Choose the appropriate installer for your operating system and follow the installation instructions.

- Set up a virtual environment (optional but recommended): Create a virtual environment to keep your project's dependencies separate from other Python projects. Open a terminal or command prompt and run:

python -m venv myenv

Activate the virtual environment:source myenv/bin/activate(Linux/Mac)myenv\Scripts\activate(Windows) - Install required libraries: Use pip, the Python package manager, to install the essential libraries for web scraping:

pip install requests beautifulsoup4 lxml

- requests: A simple, yet elegant, HTTP library for sending HTTP/1.1 requests.

- beautifulsoup4: A library for parsing HTML and XML documents.

- lxml: A fast and feature-rich library for processing XML and HTML.

With these steps completed, you're ready to start writing your Python web scraping code. In the next section, we'll dive into extracting data from web pages using these powerful libraries.

Save time and focus on coding by using Bardeen playbooks to automate data extraction tasks.

Extracting Data from Single and Multiple Web Pages

To scrape data from a single web page using Python, you can use the BeautifulSoup library along with the requests library. Here's how:

- Use requests to fetch the HTML content of the web page you want to scrape:

page = requests.get('https://example.com') - Create a BeautifulSoup object and parse the HTML:

soup = BeautifulSoup(page.content, 'html.parser') - Use BeautifulSoup methods to locate and extract the desired data. For example, to find all

<a>tags:links = soup.find_all('a') - Process and store the extracted data as needed.

To scale up and scrape data from multiple pages, you can handle pagination or dynamically generated URLs:

- For pagination, identify the URL pattern and iterate through the page numbers, making a request for each page.

- For dynamically generated URLs, analyze the structure and use techniques like regular expressions or string manipulation to generate the URLs to scrape.

Here's an example of scraping multiple pages using pagination:

base_url = 'https://example.com/page/'

for page_num in range(1, 11):

url = base_url + str(page_num)

page = requests.get(url)

soup = BeautifulSoup(page.content, 'html.parser')

# Extract data from the current page

# ...

By iterating through the page numbers and making requests to each URL, you can scrape data from multiple pages efficiently.

Advanced Techniques in Python Web Scraping

When scraping more complex websites, you may encounter challenges like JavaScript-rendered content or the need to crawl multiple pages. Here are some advanced techniques to handle these scenarios:

Handling JavaScript-Rendered Content with Selenium

Some websites heavily rely on JavaScript to dynamically load content, making it difficult to scrape using traditional methods. Selenium, a web automation tool, can help overcome this hurdle. With Selenium, you can:

- Automate a web browser to interact with the page

- Wait for the JavaScript to execute and load the desired content

- Extract the rendered HTML for further parsing

Here's a simple example of using Selenium with Python:

from selenium import webdriver

driver = webdriver.Chrome()

driver.get("https://example.com")

rendered_html = driver.page_source

# Parse the rendered HTML using BeautifulSoup or other libraries

Crawling Multiple Pages with Scrapy

When scraping large websites or multiple pages, Scrapy, a powerful web crawling framework, can simplify the process. Scrapy provides:

- Built-in support for following links and crawling multiple pages

- Concurrent requests for faster scraping

- Middleware for handling cookies, authentication, and more

Here's a basic Scrapy spider example:

import scrapy

class MySpider(scrapy.Spider):

name = "myspider"

start_urls = ["https://example.com"]

def parse(self, response):

# Extract data from the current page

# Follow links to other pages

for link in response.css("a::attr(href)"):

yield response.follow(link, self.parse)

By leveraging Selenium for JavaScript-rendered content and Scrapy for efficient crawling, you can tackle more advanced web scraping tasks in Python.

Save time and focus on coding by using Bardeen playbooks to automate data extraction tasks.

Best Practices and Legal Considerations

When scraping data from websites, it's crucial to adhere to best practices and legal guidelines to ensure ethical and respectful data extraction. Here are some key considerations:

Adhere to Robots.txt

Always check the website's robots.txt file, which specifies the rules for web crawlers. Respect the directives outlined in this file, such as disallowed pages or crawl delay requirements. Ignoring robots.txt can lead to legal issues and IP blocking.

Handle Rate Limiting

Avoid overloading websites with excessive requests. Implement rate limiting in your scraper to introduce delays between requests. This prevents your scraper from being mistaken for a denial-of-service attack and ensures a respectful crawling pace.

Ethical Considerations

- Only scrape publicly available data and respect the website's terms of service

- Do not attempt to scrape private or sensitive information

- Consider the purpose and impact of your scraping activities

- Give credit to the original data source when appropriate

Legal Implications

Web scraping can have legal implications, depending on the jurisdiction and the nature of the scraped data. Some key legal aspects to consider:

- Copyright: Respect the intellectual property rights of website owners and content creators

- Terms of Service: Comply with the website's terms of service, which may restrict scraping activities

- Data Protection: Adhere to data protection regulations, such as GDPR, when scraping personal data

To mitigate legal risks, consult with legal professionals and establish a clear data collection policy that outlines ethical guidelines for web scraping within your organization.

By following best practices and considering the legal implications, you can ensure that your web scraping efforts are conducted in an ethical and compliant manner.

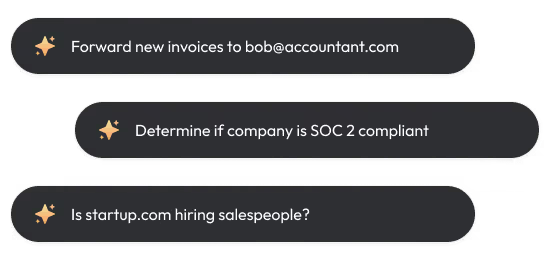

Automate Your Web Scraping with Bardeen Playbooks

Scraping data from multiple web pages is a vital process for gathering information efficiently from the web. While manual methods exist, automating this process using Bardeen can significantly enhance your productivity and accuracy. Automating web scraping tasks not only saves time but also allows you to collect data seamlessly and systematically.

Here are examples of automations you can build with Bardeen's playbooks to streamline your web scraping process:

- Get keywords and a summary from any website save it to Google Sheets: This playbook automates the extraction of data from websites, creating brief summaries and identifying keywords, then storing the results in Google Sheets. Ideal for SEO and content research.

- Get web page content of websites: Extract website content from a list of links in your Google Sheets spreadsheet and update each row with the content. Perfect for content aggregation and competitive research.

- Get keywords and a summary from any website and save it to Coda: Extract data from websites, create summaries, identify keywords, and store the results in Coda. Streamline your research and data sourcing with this powerful automation.

Embrace the power of automation with Bardeen to make your web scraping tasks effortless and efficient. Start now by downloading the Bardeen app.

.svg)

.svg)

.svg)