Prepare your dataset, configure parameters, and optimize for DeepSeek fine-tuning.

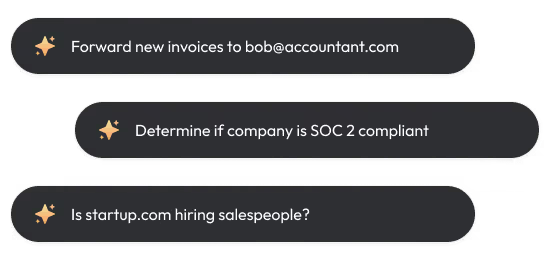

By the way, we're Bardeen, we build a free AI Agent for doing repetitive tasks.

Since you're learning about DeepSeek, you might find our AI automation tools helpful. They simplify tasks like configuring parameters and monitoring performance, saving you time and effort.

Fine-tuning DeepSeek, a powerful AI model, can unlock its full potential for your specific tasks. In this comprehensive guide, we'll walk you through the step-by-step process of preparing your dataset, configuring training parameters, and optimizing performance. Whether you're a beginner or an experienced practitioner, you'll learn how to leverage DeepSeek's capabilities to achieve remarkable results. Get ready to dive into the world of fine-tuning and take your AI projects to the next level!

Preparing Your Dataset for Optimal DeepSeek Fine-Tuning

To achieve the best results when fine-tuning DeepSeek on your custom dataset, proper data preparation is crucial. The quality and structure of your training data directly impacts the performance of the fine-tuned model.

1. Ensure High-Quality Data

Start by carefully curating and cleaning your dataset. Remove any irrelevant, duplicate, or poorly formatted examples. Data enrichment can also help ensure consistency and accuracy for successful fine-tuning.

2. Choose the Right Dataset Size

The optimal dataset size depends on the complexity of your task and available computational resources. Generally, larger datasets yield better results but require more training time and memory. Aim for a balance that suits your specific needs.

3. Create Proper Dataset Splits

Divide your dataset into train, validation, and test sets. A typical split is 80% for training, 10% for validation, and 10% for testing. The validation set helps you evaluate model performance during training and make necessary adjustments.

4. Convert to Compatible Format

DeepSeek expects data in specific formats like JSON Lines or Hugging Face Datasets. Convert your dataset into one of these formats for seamless integration with the training pipeline. Ensure each example is properly structured with the required fields.

By following these data preparation guidelines, you'll set a strong foundation for fine-tuning DeepSeek. Well-prepared data is essential for training a high-performing model tailored to your specific task.

In the next section, we'll dive into configuring DeepSeek's training parameters to optimize performance and achieve the best results for your fine-tuned model.

Configuring DeepSeek's Training Parameters for Optimal Performance

To achieve the best results when fine-tuning DeepSeek, it's crucial to carefully configure the training parameters. The learning rate, batch size, number of epochs, and advanced techniques like learning rate scheduling can significantly impact model performance and training efficiency.

1. Selecting the Optimal Learning Rate

The learning rate determines the step size at which the model's weights are updated during training. A higher learning rate can lead to faster convergence but may cause instability, while a lower learning rate ensures stable training but may take longer to converge. Experiment with different learning rates based on your dataset size and model architecture to find the sweet spot.

2. Balancing Batch Size and Memory

Batch size refers to the number of training examples used in each iteration. Larger batch sizes allow for faster training by leveraging parallelism, but they also require more memory. Consider your hardware limitations and find a balance that maximizes training efficiency without exceeding memory constraints. Gradient accumulation can help achieve larger effective batch sizes on limited memory.

3. Determining the Ideal Number of Epochs

An epoch represents a complete pass through the training dataset. Too few epochs can lead to underfitting, where the model hasn't learned enough from the data. On the other hand, too many epochs can cause overfitting, where the model becomes overly specialized to the training data and fails to generalize well. Monitor the model's performance on a validation set and employ early stopping to find the optimal number of epochs.

4. Leveraging Advanced Training Techniques

To further optimize DeepSeek's performance, consider incorporating advanced techniques like learning rate scheduling, which adjusts the learning rate over time. Methods like cosine annealing or step decay can help the model converge more effectively. Additionally, mixed precision training, which uses lower precision arithmetic, can accelerate training while maintaining model quality.

By fine-tuning DeepSeek with the right set of training parameters, you can unlock its full potential and achieve state-of-the-art performance on your specific task. Experiment, iterate, and monitor your model's progress to find the optimal configuration.

Thanks for sticking with us this far! We know diving into the technical details of fine-tuning DeepSeek can be quite an adventure. But trust us, the rewards of a well-tuned model are worth the journey. Stay curious and keep exploring!

Automate your workflow with Bardeen to focus on key tasks. Check out automate sales prospecting to save valuable time and improve efficiency.

Conclusions

Fine-tuning DeepSeek is crucial for adapting the model to your specific task and achieving optimal performance. Learn how to automate sales prospecting with AI tools, enhancing efficiency and precision in your workflows. In this guide, you discovered:

- The importance of data quality, formatting, and selecting the right dataset size for fine-tuning DeepSeek

- How to configure training parameters like learning rate, batch size, and number of epochs for efficient training

Don't miss out on unlocking DeepSeek's full potential! Without mastering fine-tuning, you might be stuck with a subpar model. Happy fine-tuning!

.svg)

.svg)

.svg)