Use IMPORTHTML or IMPORTDATA functions to scrape web data in Google Sheets.

By the way, we're Bardeen, we build a free AI Agent for doing repetitive tasks.

If you're into web scraping, check out our AI Web Scraper. It scrapes data directly into your spreadsheets without any coding.

Web scraping is a powerful technique for extracting data from websites, enabling data-driven decision-making. Google Sheets, a widely accessible and user-friendly tool, makes web scraping achievable for non-programmers. In this step-by-step guide, we'll walk you through the process of setting up Google Sheets for web scraping and demonstrate how to extract data using built-in functions and custom scripts.

Introduction to Web Scraping Using Google Sheets

Web scraping is the process of extracting data from websites, enabling businesses to gather valuable information for data-driven decision-making. Google Sheets, a powerful and user-friendly spreadsheet tool, makes web scraping accessible to non-programmers.

By leveraging the built-in functions of Google Sheets, you can easily extract data from web pages without the need for complex coding or specialized software. This allows you to quickly gather and analyze data from various online sources, streamlining your data collection process.

Google Sheets offers several advantages for web scraping:

- Familiarity and ease of use for those already comfortable with spreadsheets

- Accessibility from any device with an internet connection

- Integration with other Google Suite tools for seamless data management

- Ability to automate web scraping tasks using macros or scripts

In the following sections, we'll guide you through the process of setting up your Google Sheets for web scraping and demonstrate how to extract data using built-in functions and custom scripts.

Setting Up Your Google Sheets for Web Scraping

To begin web scraping with Google Sheets, you'll need to set up your spreadsheet and familiarize yourself with the basic functions used for data extraction. Here's how to prepare your Google Sheets environment:

- Open a new Google Sheets document or navigate to an existing one where you want to store the scraped data.

- Decide on the structure of your spreadsheet, creating separate columns for each data point you plan to extract (e.g., title, description, price).

- Become acquainted with the essential web scraping functions in Google Sheets:

- IMPORTHTML: Extracts data from HTML tables and lists on a webpage.

- IMPORTDATA: Imports data from CSV or TSV files hosted online.

- IMPORTXML: Retrieves data from XML documents or web pages using XPath queries.

These functions will be the foundation of your web scraping efforts in Google Sheets. To use them, you'll need to provide the URL of the webpage or file you want to scrape, as well as any additional parameters required by the specific function.

For example, to use IMPORTHTML, you'll enter the function in a cell, followed by the URL in quotation marks, the type of data you want to extract ("table" or "list"), and the index number of the table or list on the page (e.g., =IMPORTHTML("https://example.com/data", "table", 1)).

By mastering these functions and setting up your spreadsheet correctly, you'll be ready to start extracting data from the web using Google Sheets. For more advanced scraping, consider using a free AI web scraper to automate data collection.

Bardeen's free AI web scraper can save you a lot of time. It easily scrapes data directly into your spreadsheet, no coding needed.

Basic Web Scraping Techniques in Google Sheets

Google Sheets offers several built-in functions that make web scraping accessible to users without extensive programming knowledge. Two of the most commonly used functions for basic web scraping are IMPORTHTML and IMPORTDATA.

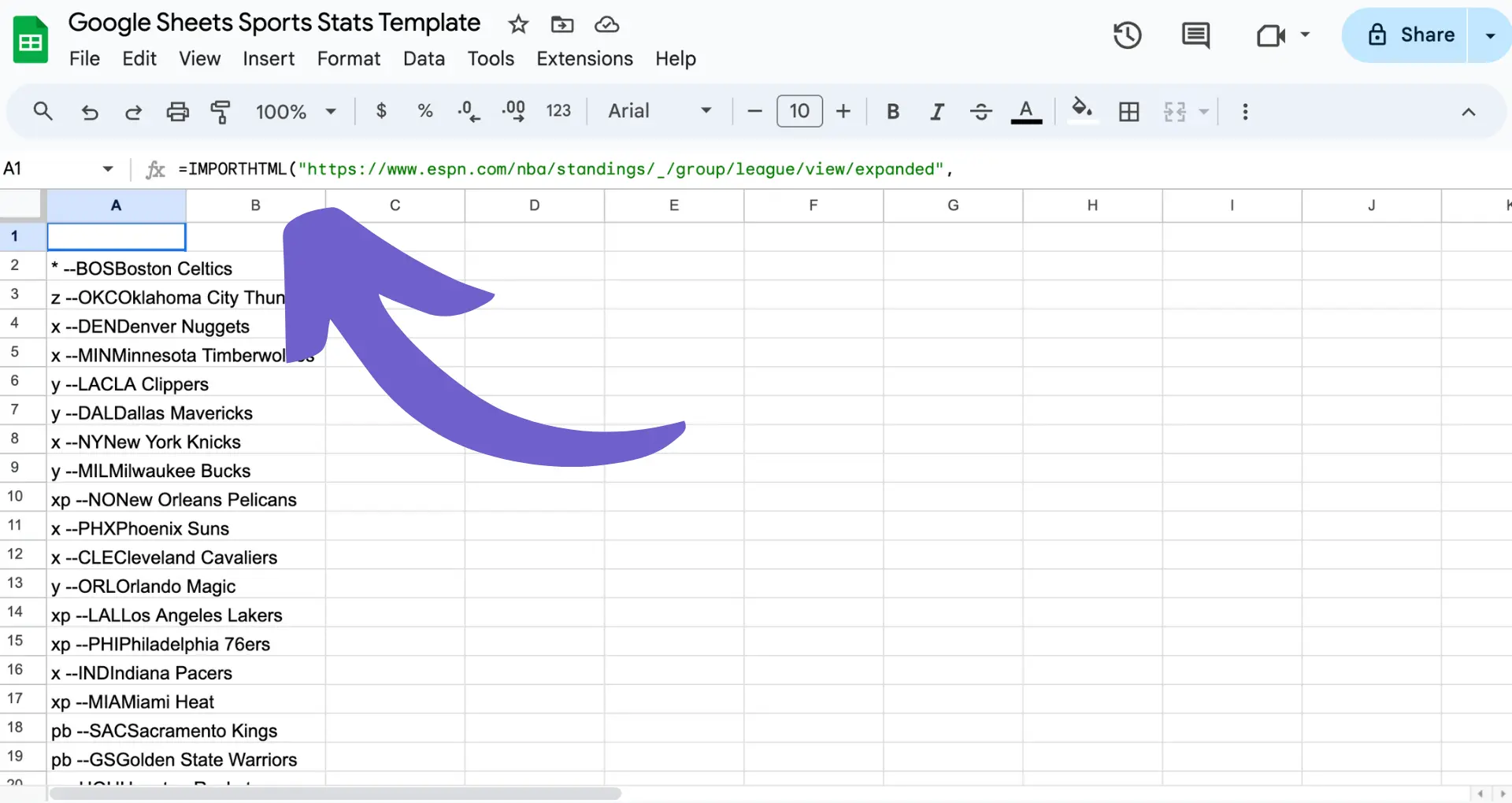

IMPORTHTML is a powerful function that allows you to fetch data from tables and lists on web pages. To use this function, you need to provide the URL of the webpage, specify whether you want to extract a "table" or "list," and indicate the index number of the target element if there are multiple tables or lists on the page.

The syntax for IMPORTHTML is as follows:

=IMPORTHTML("url", "table/list", index)

For example, to extract the first table from a Wikipedia page, you would use:

=IMPORTHTML("https://en.wikipedia.org/wiki/Example", "table", 1)

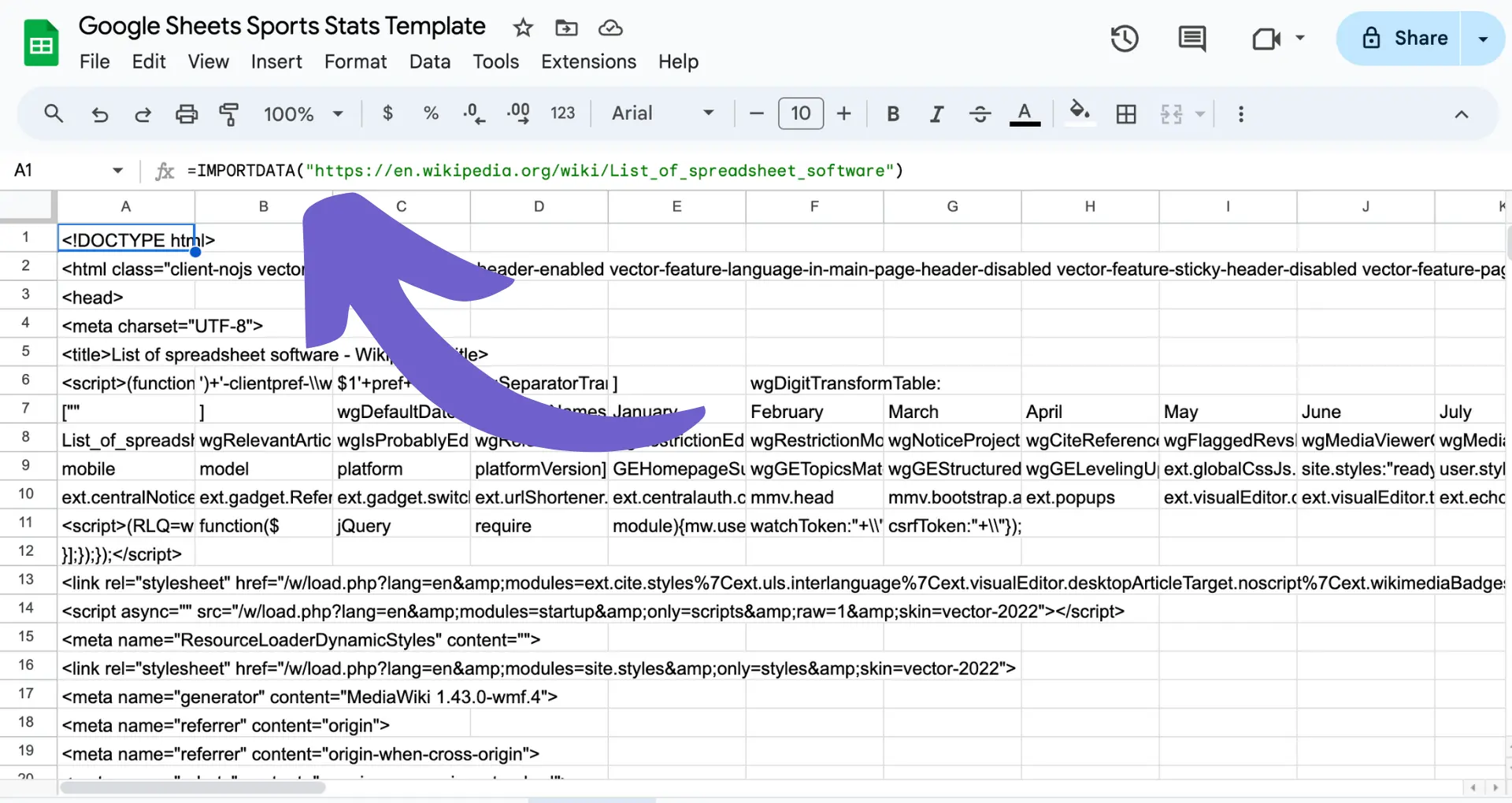

IMPORTDATA, on the other hand, is used for importing data from CSV or TSV files hosted online. This function is particularly useful when you need to extract data from structured files that are regularly updated, such as financial data or product listings.

To use IMPORTDATA, simply provide the URL of the CSV or TSV file:

=IMPORTDATA("https://example.com/data.csv")

By mastering these two functions, you can easily scrape data from a wide range of web sources and import it directly into your Google Sheets for further analysis and manipulation. For advanced scraping tasks, consider using web scraper extensions to automate and enhance your workflows.

Advanced Data Extraction with Google Sheets

While basic web scraping in Google Sheets is straightforward using functions like IMPORTHTML and IMPORTDATA, more complex data structures require advanced techniques. This is where IMPORTXML and Google Apps Script come into play.

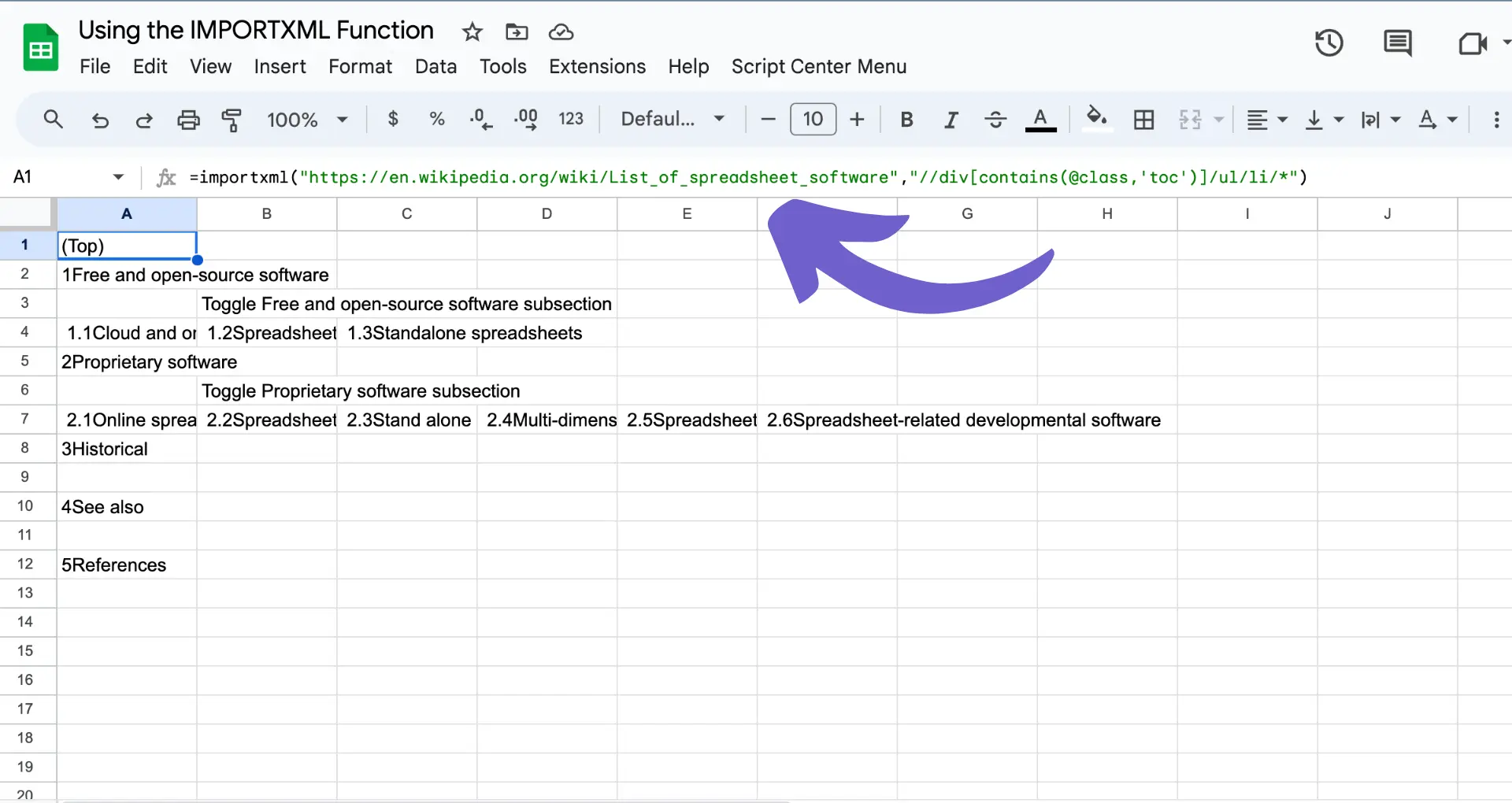

IMPORTXML allows you to extract data using XPath queries, giving you more control over the specific data you want to target. XPath is a query language used to navigate and select nodes in an XML or HTML document. By crafting precise XPath queries, you can pinpoint the exact elements you need to extract.

To use IMPORTXML, you provide the URL of the webpage and the XPath query as parameters:

=IMPORTXML("https://example.com","//div[@class='example']")

This formula will extract all div elements with the class "example" from the specified webpage.

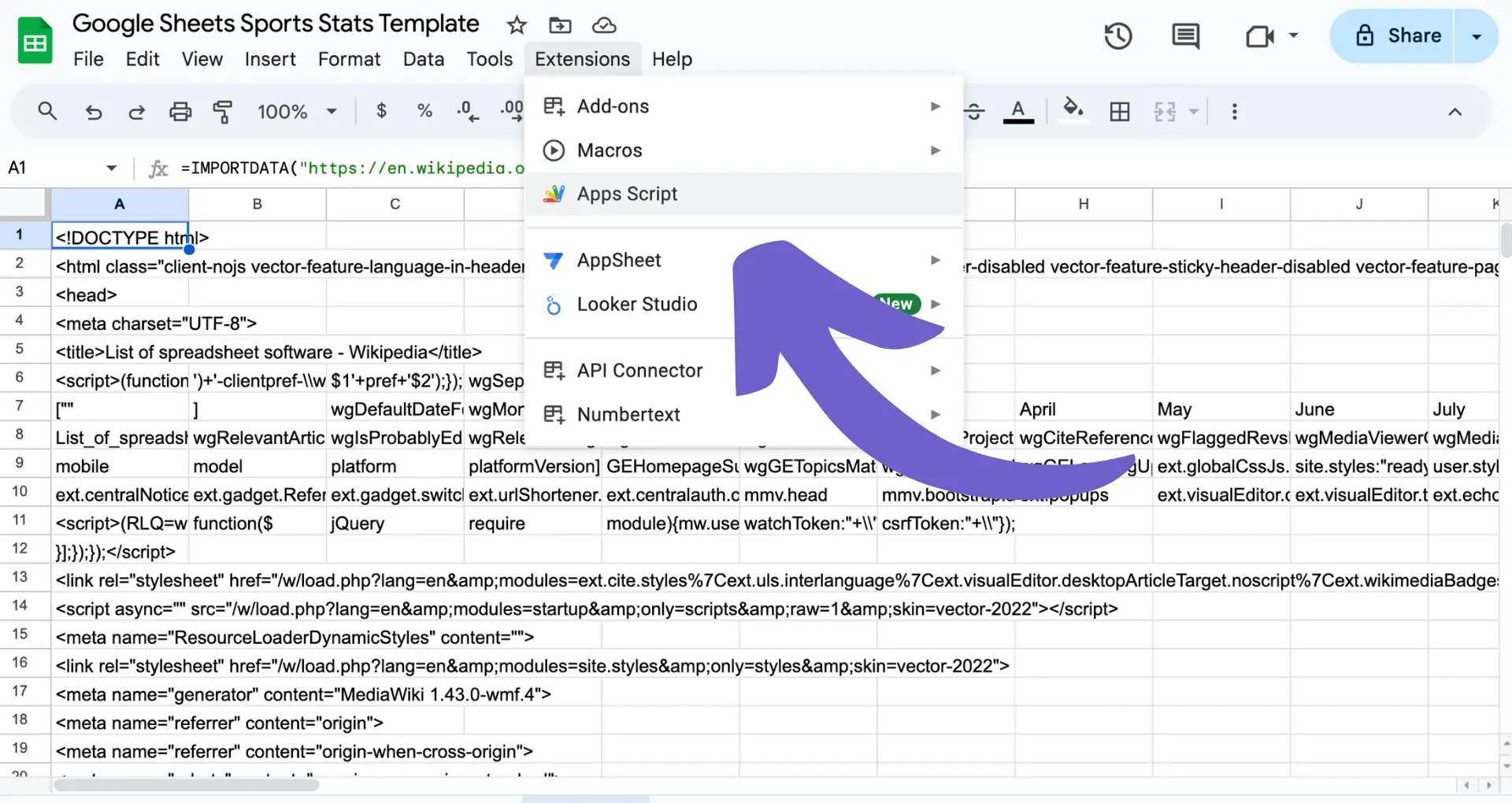

For more complex scraping tasks that go beyond the capabilities of built-in functions, you can leverage Google Apps Script. Apps Script is a JavaScript-based scripting language that allows you to extend the functionality of Google Sheets and automate tasks.

With Apps Script, you can write custom functions to scrape data, manipulate it, and even interact with external APIs. For example, you can use the UrlFetchApp class to send HTTP requests and retrieve web page content, then parse the HTML using libraries like Cheerio or Parser.

Here's a basic example of a custom scraping function in Apps Script:

function scrapeData(url){var response=UrlFetchApp.fetch(url);var html=response.getContentText();// Parse the HTML and extract data// ...return data;}

By combining the power of IMPORTXML and Apps Script, you can tackle more advanced web scraping tasks directly within Google Sheets, giving you the flexibility to extract and manipulate data according to your specific needs. For more powerful automation, consider using a GPT for Google Sheets to supercharge your workflow.

Bardeen's GPT in Spreadsheets can add ChatGPT to Google Sheets, helping you with summarizing, generating, formatting, and analyzing data effortlessly. Update your spreadsheets with AI in a snap!

Legal and Ethical Considerations in Web Scraping

While web scraping offers immense value for data-driven decision-making, it's crucial to understand the legal and ethical implications involved. Failing to comply with website terms of service, copyright laws, and data privacy regulations can lead to serious consequences.

When scraping data, always review the website's terms of service and robots.txt file to ensure you're not violating any rules. Some websites explicitly prohibit scraping, while others may have specific guidelines on how to scrape responsibly. It's essential to respect the website owner's wishes and adhere to their policies.

Copyright laws also play a significant role in web scraping. Just because data is publicly available on a website doesn't mean you have the right to scrape and use it without permission. Make sure you're not infringing on any copyrights or intellectual property rights when extracting data.

Data privacy is another critical ethical consideration. When scraping personal information, such as user profiles or contact details, you must handle the data responsibly and comply with data protection regulations like GDPR or CCPA. Obtain consent when necessary and ensure that you're not collecting or storing sensitive information without proper authorization.

To scrape ethically, consider the following best practices:

- Use a public API when available instead of scraping

- Provide a clear user agent string identifying yourself and your purpose

- Scrape at a reasonable rate to avoid overloading the website's servers

- Only collect the data you need and respect the website's content

- Develop a formal data collection policy to guide your scraping efforts

By prioritizing legal compliance and ethical practices, you can harness the power of web scraping while maintaining integrity and respect for website owners and individuals whose data you collect. For example, learning how to scrape LinkedIn responsibly can provide valuable insights.

Automate Google Sheets Scraping with Bardeen Playbooks

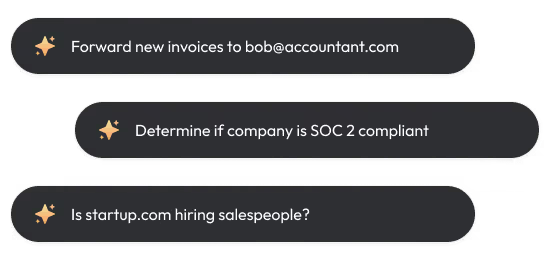

Web scraping with Google Sheets can be a manual process that requires a bit of setup and understanding of formulas. However, for those looking to automate and streamline data extraction directly into Google Sheets, Bardeen offers a powerful solution. By leveraging Bardeen's Scraper playbooks, users can save time and effort, ensuring that data collection is both efficient and accurate. Here are examples of how Bardeen can transform your web scraping tasks into automated workflows:

- Save data from the Google News page to Google Sheets: This playbook automates the process of extracting data from Google News and saving it directly into Google Sheets, perfect for those needing to keep up with current events or industry trends without manual data entry.

- Get data from Crunchbase links and save the results to Google Sheets: Ideal for market research, this playbook extracts crucial information from Crunchbase directly into Google Sheets, streamlining your competitive analysis and business intelligence efforts.

- Extract information from websites in Google Sheets using BardeenAI: This playbook uses BardeenAI's web agent to scan and extract any desired information from websites into a Google Sheet, making it a versatile tool for various data collection projects.

Automate your web scraping tasks with Bardeen and shift your focus to analyzing the data, not just collecting it. Download the Bardeen app at Bardeen.ai/download and start streamlining your data collection process today.

.svg)

.svg)

.svg)