Extract Amazon product data in 5 simple steps.

By the way, we're Bardeen, we build a free AI Agent for doing repetitive tasks.

If you're scraping Amazon, you might love Bardeen's AI Web Scraper. It automates data extraction and saves you time on market research and competitor analysis.

Extracting product data from Amazon can be a valuable tool for market research, competitor analysis, and pricing strategies. However, navigating the complex web structure of Amazon and understanding the legal and ethical considerations of web scraping can be challenging. In this step-by-step guide, we'll walk you through the process of setting up your environment, scraping Amazon product data effectively, and storing it for further analysis.

Understanding Amazon's Web Structure for Effective Scraping

Amazon's web architecture is complex, with dynamic content loading and AJAX calls that can make web scraping challenging. To effectively extract data, it's crucial to understand how Amazon structures its web pages.

Key considerations for scraping Amazon include:

- Identifying the correct HTML elements that contain the desired data, such as product names, prices, and descriptions

- Handling dynamic content that loads after the initial page load, often through AJAX calls

- Navigating through pagination and dealing with inconsistencies across different product categories

By familiarizing yourself with Amazon's web structure and the specific elements that hold the data you need, you can develop targeted scraping techniques. This knowledge will help you build robust scrapers that can handle the complexities of Amazon's website and extract accurate information for your market research or competitor analysis.

Setting Up Your Environment for Scraping Amazon

To effectively scrape Amazon without code, you need to set up your environment with the right tools and programming languages. Python is one of the most popular choices for web scraping due to its simplicity and powerful libraries like Beautiful Soup and Scrapy.

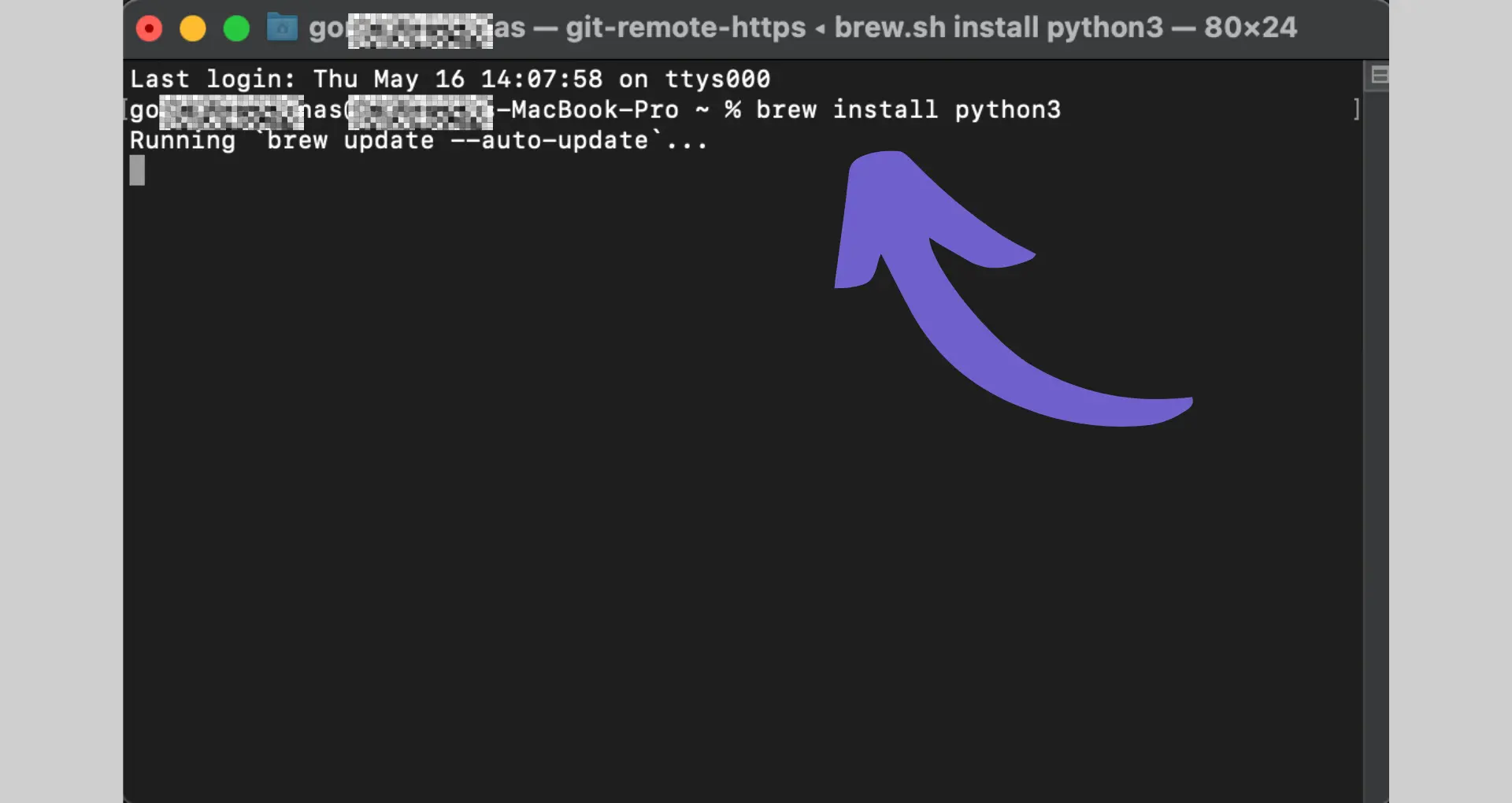

Here's a step-by-step guide to setting up your development environment for Amazon scraping:

- Install Python: Download and install the latest version of Python from the official website (python.org). Make sure to choose the appropriate version for your operating system.

- Set up a virtual environment: Create a virtual environment to keep your project dependencies isolated. Open a terminal and navigate to your project directory, then run the following commands:

- python -m venv myenv

- source myenv/bin/activate (for Unix/Linux)

- myenv\Scripts\activate (for Windows)

- Install required libraries: With your virtual environment activated, install the necessary web scraping tools. Run the following command to install Beautiful Soup and Scrapy:

- pip install beautifulsoup4 scrapy

- Choose an IDE or text editor: Select an integrated development environment (IDE) or text editor to write your Python code. Some popular choices include PyCharm, Visual Studio Code, and Sublime Text.

- Test your setup: Create a new Python file and write a simple script to test your environment. For example, you can try scraping an Amazon book series list page using Beautiful Soup or Scrapy to ensure everything is working correctly.

By following these steps, you'll have a robust development environment ready for scraping Amazon. Remember to keep your libraries and tools up to date and experiment with different configurations to find the setup that works best for your specific scraping needs.

Using Bardeen's Amazon book series list Playbook can save time. Automate scraping and focus on important tasks.

Advanced Techniques for Avoiding Detection and Captchas

When scraping data from Amazon, it's crucial to employ techniques that minimize the risk of detection and prevent your scraper from being blocked or faced with captcha challenges. Here are some advanced methods to help you avoid detection:

- Using Proxies: Rotating your IP address using a pool of proxies can help distribute your requests across different IP addresses, making it harder for Amazon to detect and block your scraper. Consider using a mix of data center, residential, and mobile proxies to mimic organic user traffic.

- Rotating User Agents: Regularly change the user agent string in your request headers to mimic different browsers and devices. This makes your requests appear to originate from various sources, reducing the chances of detection.

- Implementing Delays: Introduce random delays between your requests to avoid sending them at a predictable interval. This helps simulate human browsing behavior and prevents overwhelming Amazon's servers, which could trigger anti-scraping measures.

- Handling Captchas: If you encounter captcha challenges, you can use captcha-solving services like 2Captcha or AntiCaptcha to automatically solve them. These services provide APIs that seamlessly integrate into your scraping workflow, allowing you to bypass captchas programmatically.

- Avoiding Honeypot Traps: Be cautious of honeypot traps set by Amazon to identify and block scrapers. These traps often include invisible links or elements designed to lure scrapers. Analyze the page structure and avoid interacting with suspicious elements that are hidden from normal users.

By implementing these advanced techniques, you can significantly reduce the likelihood of your Amazon scraper being detected and blocked. However, keep in mind that scraping should be done responsibly and in compliance with Amazon's terms of service to avoid any legal issues.

Using Bardeen's Amazon product data automation can save time. Focus on important tasks while automating data collection.

Extracting and Storing Amazon Data Efficiently

When scraping Amazon product data, it's crucial to extract relevant information efficiently and store it in a structured format for further analysis. Here are some key considerations:

- Handling Pagination: Amazon search results are often divided into multiple pages. To ensure you capture all relevant products, you need to navigate through these pages programmatically. Look for pagination elements like "Next" or "Page X" links and use their URLs to move to the next page of results.

- Extracting Relevant Data: Focus on extracting essential product information such as title, price, rating, reviews, images, and description. Use appropriate CSS selectors or XPath expressions to locate and extract these data points accurately. Be selective and avoid unnecessary data to optimize storage and processing.

- Structuring Extracted Data: Organize the scraped data into a structured format like JSON or CSV. Create a consistent schema that includes fields for each data point you extracted. This structured data will be easier to store, query, and analyze later.

- Storing Scraped Data: Choose a storage solution that can handle the volume of data you expect to scrape. Options include databases like MySQL, PostgreSQL, or MongoDB, or cloud storage services like Amazon S3 or Google Cloud Storage. Consider factors like scalability, query performance, and cost when selecting a storage option.

- Handling Data Updates: If you need to keep your scraped data up to date, consider implementing incremental updates. Store timestamps or unique identifiers for each product and only scrape and update records that have changed since the last scrape. This approach saves time and resources compared to re-scraping the entire dataset.

By efficiently extracting relevant data, structuring it properly, and storing it in a scalable solution, you can build a valuable dataset of Amazon product information for analysis and insights.

Automating and Scheduling Scraping Tasks

Automating and scheduling your Amazon scraping tasks is essential for keeping your data up-to-date and gaining fresh insights for market analysis. Here are some key points to consider:

- Cron Jobs (Linux): On Linux systems, you can use cron jobs to schedule and automate your scraping scripts. Cron allows you to specify the frequency and timing of your scraping tasks, such as running the script daily at a specific time.

- Task Scheduler (Windows): For Windows users, the Task Scheduler is a built-in tool that enables you to automate and schedule your scraping tasks. You can create a new task, specify the script to run, and set the desired frequency and timing.

- Cloud Platforms: If you prefer a cloud-based solution, platforms like AWS Lambda or Google Cloud Functions allow you to run your scraping scripts on a serverless architecture. You can set up triggers or schedules to automatically execute your scraping tasks.

- Monitoring and Logging: Implement proper monitoring and logging mechanisms to track the progress and status of your scheduled scraping tasks. This helps you identify any issues or failures and ensures the reliability of your data collection process.

- Data Freshness: Consider the freshness requirements of your scraped data. Determine how frequently you need to update your dataset based on your business needs and the dynamic nature of the Amazon marketplace. Adjust your scraping schedule accordingly to ensure you have the most up-to-date information.

By automating and scheduling your Amazon scraping tasks, you can streamline your data collection process, reduce manual effort, and ensure a consistent flow of fresh data for analysis. This enables you to make timely decisions based on the latest market trends and competitor insights.

Using Bardeen's Amazon product data automation can save time. Focus on important tasks while automating data collection.

Automate Amazon Data Extraction with Bardeen

While web scraping Amazon can be accomplished manually as detailed in the guide, automating this process can significantly enhance efficiency and accuracy. Bardeen offers powerful automation capabilities that can streamline the scraping of product data from Amazon, saving you time and providing structured data ready for analysis. Automating data extraction from Amazon is particularly valuable for competitive analysis, price monitoring, and gathering product details without the need for manual intervention.

Here are some examples of automations that can be built with Bardeen using the provided playbooks:

- Get data from currently opened Amazon books series list: This playbook extracts data from an Amazon book series list page, providing valuable information such as price, product links, and names. It's particularly useful for publishers and authors for market research and competitive analysis.

- Get data from Amazon product page: Access detailed product data directly from an Amazon product page. This automation is crucial for e-commerce businesses looking to compare product specifications and pricing.

- Save Amazon best seller products to Coda every week: Keep track of trending products by automatically saving Amazon best seller data to Coda on a weekly basis. This playbook supports market trend analysis and inventory planning.

Automating these tasks with Bardeen not only saves time but also ensures you have the latest data at your fingertips for informed decision-making. Start automating your Amazon web scraping today by downloading the Bardeen app at Bardeen.ai/download.

.svg)

.svg)

.svg)